This guide is meant for two audiences:

- Companies focused on just loading a cloud data warehouse

- Companies also interested in other data integration projects

Key Data Integration Alternatives to Consider

There are three data integration alternatives to consider:

- ELT (Extract, Load, and Transform) - Most ELT technologies are newer, built within the last 10-15 years. ELT is focused on running transforms using dbt or SQL in cloud data warehouses. It’s mostly multi-tenant SaaS, with some open source options as well. Comparing ELT vendors is the focus of this guide.

- ETL (Extract, Transform, and Load) - ETL started to become popular 30 years ago as the preferred way to load data warehouses. Over time it was also used for data integration and data migration as well. For more read The Data Engineer’s Guide to ETL Alternatives.

- CDC (Change Data Capture) - CDC is really a subset of ETL and ELT, but technologies like Debezium can be used to build your own custom data pipeline. If you’re interested in CDC you can read The Data Engineer’s Guide to CDC for Analytics, Ops, and AI Pipelines.

ELT has been widely adopted for loading cloud data warehouses. That’s in part because modern ELT was built specifically for loading BigQuery, Snowflake, and Redshift originally. Pay-as-you-go cloud data warehouses, and pay-as-you-go ELT with data warehouses running the “T” as dbt or SQL, made it easy to get started.

But ELT SaaS has rarely been used for other projects. Data integration with other destinations usually requires ETL and real-time data movement. Companies who have regulatory and security requirements require private cloud or on premises deployments. Most have historically opted for ETL for these reasons.

Top ELT Vendors to Consider in 2024

This guide evaluates vendors who support ELT in alphabetical order (so as not to bias.)

- Airbyte (ELT)

- Estuary (ELT/ETL)

- Fivetran (ELT)

- Hevo (ELT)

- Meltano (ELT)

The ETL guide will compare the following ETL vendors:

- Estuary

- Informatica

- Matillion

- Rivery (ELT/ETL)

- Talend

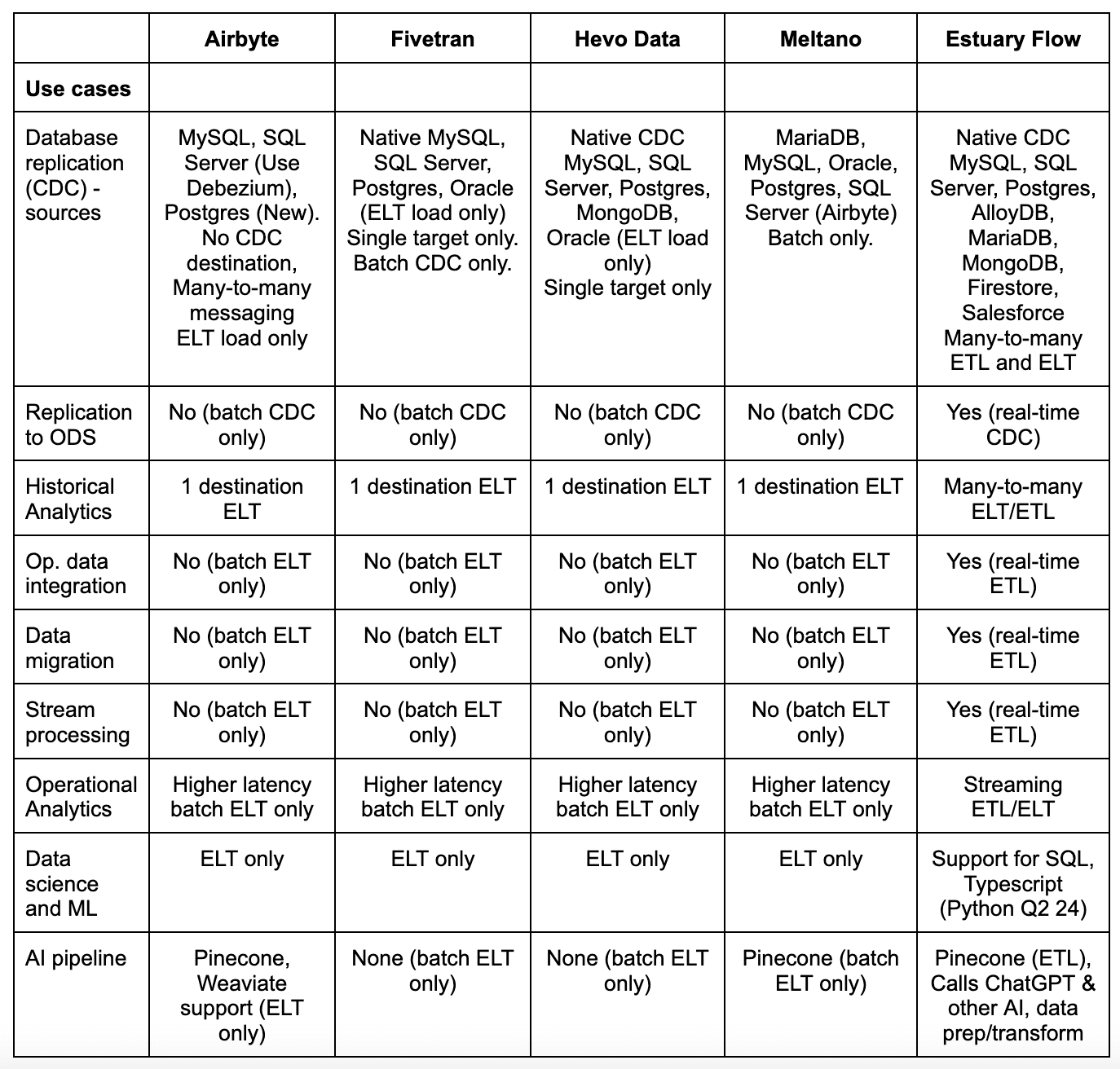

While there is a summary of each vendor, an overview of their architecture, and when to consider them, the detailed comparison is best seen in the comparison matrix, which covers the following categories:

- 9 Use cases - database replication, replication for operational data stores (ODS), historical analytics, data integration, data migration, stream processing, operational analytics, data science and ML, and AI

- Connectors - packaged, CDC, streaming, 3rd party support, SDK, and APIs

- Core features - full/incremental snapshots, backfilling, transforms, languages, streaming+batch modes, delivery guarantees, backfilling, time travel, data types, schema evolution, DataOps

- Deployment options - public (multi-tenant) cloud, private cloud, and on-premises

- The “abilities” - performance, scalability, reliability, and availability

- Security - including authentication, authorization, and encryption

- Costs - Ease of use, vendor and other costs you need to consider

Over time, we will also be adding more information about other vendors that at the very least deserve honorable mention, and at the very best should be options you consider for your specific needs. Please speak up if there are others to consider.

It’s OK to choose a vendor that’s good enough. The biggest mistakes you can make are:

- Choosing a good vendor for your current project but the wrong vendor for future needs

- Not understanding your future needs

- Not insulating yourself from a vendor

Make sure to understand and evaluate your future needs and design your pipeline for modularity so that you can replace components, including your ELT/ETL vendor, if necessary.

Hopefully by the end of this guide you will understand the relative strengths and weaknesses of each vendor, and how to evaluate these vendors based on your current and future needs.

Comparison Criteria

This guide starts with the detailed comparison before moving into an analysis of each vendor’s strengths, weaknesses, and when to use them.

The following comparison matrix covers the following categories:

Use cases - Over time, you will end up using data integration for most of these use cases. Make sure you look across your organization for current and future needs. Otherwise you might end up with multiple data integration technologies, and a painful migration project.

- Replication - Read-only and read-write load balancing of data from a source to a target for operational use cases. CDC vendors are often used in cases where built-in database replication does not work.

- Operational data store (ODS) - Real-time replication of data from many sources into an ODS for offloading reads or staging data for analytics.

- Historical analytics - The use of data warehouses for dashboards, analytics, and reporting. CDC-based ETL or ELT is used to feed the data warehouse.

- Operational data integration - Synchronizing operational data across apps and systems, such as master data or transactional data, to support business processes and transactions.

NOTE: none of the vendors in this evaluation support many apps as destinations. They only support data synchronization for the underlying databases. - Data migration - This usually involves extracting data from multiple sources, building the rules for merging data and data quality, testing it out side-by-side with the old app, and migrating users over time. The data integration vendor used becomes the new operational data integration vendor.

NOTE: ELT vendors only support database destinations for data migration. - Stream processing - Using streams to capture and respond to specific events.

- Operational analytics - The use of data in real-time to make operational decisions. It requires specialized databases with sub-second query times, and usually also requires low end-to-end latency with sub-second ingestion times as well. For this reason the data pipelines usually need to support real-time ETL with streaming transformations.

- Data science and machine learning - This generally involves loading raw data into a data lake that is used for data mining, statistics and machine learning, or data exploration including some ad hoc analytics. For data integration vendors this is very similar to loading data lakes/lakehouses/warehouses.

- AI - the use of large language models (LLM) or other artificial intelligence and machine learning models to do anything from generating new content to automating decisions. This usually involves different data pipelines for model training and model execution including Retrieval Augmented Generation (RAG).

Connectors - The ability to connect to sources and destinations in batch and real-time for different use cases. Most vendors have so many connectors that the best way to evaluate vendors is to pick your connectors and evaluate them directly in detail.

- Number of connectors - The number of source and destination connectors. What’s important is the number of high-quality and real-time connectors, and that the connectors you need are included. Make sure to evaluate each vendor’s specific connectors and their capabilities for your projects. The devil is in the details.

- Streaming (CDC, Kafka) - All vendors support batch. The biggest difference is how much each vendor supports CDC and streaming sources and destinations.

- Destinations - Does the vendor support all the destinations that need the source data, or will you need to find another way to load for select projects?

- Support for 3rd party connectors - Is there an option to use 3rd party connectors?

- CDK - Can you build your own connectors using a connector development kit (CDK)?

- API - Is an admin API available to help integrate and automate pipelines?

Core features - How well does each vendor support core data features required to support different use cases? Source and target connectivity are covered in the Connectors section.

- Batch and streaming support - Can the product support streaming, batch, and both together in the same pipeline?

- Transformations - What level of support is there for streaming and batch ETL and ELT? This includes streaming transforms, and incremental and batch dbt support in ELT mode. What languages are supported? How do you test?

- Delivery guarantees - Is delivery guaranteed to be exactly once and in order?

- Data types - Support for structured, semi-structured, and unstructured data types.

- Store and replay - The ability to add historical data during integration, or later additions of new data in targets.

- Time travel - The ability to review or reuse historical data without going back to sources.

- Schema evolution - support for tracking schema changes over time, and handling it automatically.

- DataOps - Does the vendor support multi-stage pipeline automation?

Deployment options - does the vendor support public (multi-tenant) cloud, private cloud, and on-premises (self-deployed)?

The “abilities” - How does each vendor rank on performance, scalability, reliability, and availability?

- Performance (latency) - what is the end-to-end latency in real-time and batch mode?

- Scalability - Does the product provide elastic, linear scalability

- Reliability - How does the product ensure reliability for real-time and batch modes? One of the biggest challenges, especially with CDC, is ensuring reliability.

Security - Does the vendor implement strong authentication, authorization, RBAC, and end-to-end encryption from sources to targets?

Costs - the vendor costs, and total cost of ownership associated with data pipelines

- Ease of use - The degree to which the product is intuitive and straightforward for users to learn, build, and operate data pipelines.

- Vendor costs - including total costs and cost predictability

- Labor costs - Amount of resources required and relative productivity

- Other costs - Including additional source, pipeline infrastructure or destination costs

Comparison Matrix

Below is a detailed comparison of the top ELT and ETL vendors across various categories, including use cases, connectors, core features, deployment options, and more

Comparison by Use Cases

Airbyte | Fivetran | Hevo Data | Meltano | Estuary Flow | |

|---|---|---|---|---|---|

| Use cases | |||||

| Database replication (CDC) - sources | MySQL, SQL Server (Use Debezium), Postgres (New). No CDC destination, Many-to-many messaging ELT load only | Native MySQL, SQL Server, Postgres, Oracle Single target only. Batch CDC only. | Native CDC MySQL, SQL Server, Postgres, MongoDB, Oracle (ELT load only) Single target only | MariaDB, MySQL, Oracle, Postgres, SQL Server (Airbyte) | Native CDC MySQL, SQL Server, Postgres, AlloyDB, MariaDB, MongoDB, Firestore, Salesforce Many-to-many ETL and ELT |

| Replication to ODS | No (batch CDC only) | No (batch CDC only) | No (batch CDC only) | No (batch CDC only) | Yes (real-time CDC) |

| Historical Analytics | 1 destination ELT | 1 destination ELT | 1 destination ELT | 1 destination ELT | Many-to-many ELT/ETL |

| Op. data integration | No (batch ELT only) | No (batch ELT only) | No (batch ELT only) | No (batch ELT only) | Yes (real-time ETL) |

| Data migration | No (batch ELT only) | No (batch ELT only) | No (batch ELT only) | No (batch ELT only) | Yes (real-time ETL) |

| Stream processing | No (batch ELT only) | No (batch ELT only) | No (batch ELT only) | No (batch ELT only) | Yes (real-time ETL) |

| Operational Analytics | Higher latency batch ELT only | Higher latency batch ELT only | Higher latency batch ELT only | Higher latency batch ELT only | Streaming ETL/ELT |

| Data science and ML | ELT only | ELT only | ELT only | ELT only | Support for SQL, Typescript |

| AI pipeline | Pinecone, Weaviate support (ELT only) | None (batch ELT only) | None (batch ELT only) | Pinecone (batch ELT only) | Pinecone (ETL), Calls ChatGPT & other AI, data prep/transform |

Comparison by Connectors

Airbyte | Fivetran | Hevo Data | Meltano | Estuary Flow | |

|---|---|---|---|---|---|

| Connectors | |||||

| Number of connectors | 50+ maintained connectors, 300 marketplace connectors | <300 connectors | 150+ connectors built by Hevo | 200+ Singer tap connectors | 150+ high performance connectors built by Estuary |

| Streaming connectors | Batch CDC only. Batch Kafka, Kinesis (destination only) | Batch CDC only. | Batch CDC, Kafka batch (source only). | Batch CDC, Batch Kafka source, Batch Kinesis destination | Streaming CDC, Kafka, Kinesis (source only) |

| Support for 3rd party connectors | No | No | No | 350+ Airbyte connectors (via Meltano SDK wrapper) | Support for 500+ Airbyte, Stitch, and Meltano connectors |

| Custom SDK | None | Yes | None | Yes | Yes |

| API (for admin) | Yes | Yes | Yes | Yes, but deprecated | Yes Estuary API docs |

Comparison by Core Features

Airbyte | Fivetran | Hevo Data | Meltano | Estuary Flow | |

|---|---|---|---|---|---|

| Core features | |||||

| Batch and streaming | Batch only | Batch only | Batch only | Batch only | Streaming to batch Batch to streaming |

| ETL Transforms | None | None | Python scripts. Drag-and-drop row-level transforms in beta. | None | SQL & TypeScript (Python Q124). |

| Workflow | None | None | None (Deprecated) | Airflow support | Many-to-many pub-sub ETL |

| ELT transforms | dbt and SQL. Separate orchestration | ELT only with | Dbt. | Dbt (can also migrate into Meltano projects) | Dbt. Integrated orchestration. |

| Delivery guarantee | Exactly once batch, | Exactly once (batch only) | Exactly once (batch only) | At least once (Singer-based) | Exactly once (streaming, batch, mixed) |

| Load write method | Append only (soft deletes) | Append only or update in place (soft deletes) | Append only (soft deletes) | Append only (soft deletes) | Append only or update in place (soft or hard deletes) |

| Store and replay | No storage. Requires new extract for each backfill or CDC restart. | No storage. Requires new extract for each backfill or CDC restart. | No storage. Requires new extract for each backfill or CDC restart. | No storage. Requires new extract for each backfill or CDC restart. | Yes. Can backfill multiple targets and times without requiring new extract. |

| Time travel | No | No | No | No | Yes |

| Schema inference and drift | No schema evolution for CDC | Good schema inference, automating schema evolution | Does schema inference and some evolution with auto mapping but no support for fully automating schema evolution | No | Good schema inference, automating schema evolution |

| DataOps support | No CLI, | CLI, | No CLI, | CLI, API | CLI, API, |

Comparison by Deployment Options, Abilities & Security

Airbyte | Fivetran | Hevo Data | Meltano | Estuary Flow | |

|---|---|---|---|---|---|

| Deployment options | Open source, public cloud | Cloud, limited private cloud (5 sources, 4 destinations), self-hosted HVR | Public cloud | Open source | Open source, Public cloud, private cloud |

| The abilities | |||||

| Performance (minimum latency) | 1 hour min for Airbyte Cloud, one source at a time. 5 minutes (CDC and batch connectors) for open source. | Theoretically 15 minutes enterprise, 1 minute business critical. But most deployments are in the 10s of minutes to hour intervals | 5 minutes (CDC and batch) in theory. In reality intervals are similar to others - 10s or minutes to 1 hour+ intervals. | Can be reduced to seconds. But it is batch by design, scales better with longer intervals. Typically 10s of minutes to 1+ hour intervals. | < 100 ms (in streaming mode) Supports any batch interval as well and can mix streaming and batch in 1 pipeline. |

| Scalability | Low-Medium | Medium-High | Low-Medium | Low-medium. | High |

| Reliability | Medium | Medium-High. Issues with CDC. | Medium | Medium | High |

| Security | |||||

| Data Source Authentication | OAuth / HTTPS / SSH / SSL / API Tokens | OAuth / HTTPS / SSH / SSL / API Tokens | OAuth / API Keys | OAuth / API Keys | OAuth 2.0 / API Tokens |

| Encryption | Encryption at rest, in-motion | Encryption at rest, in-motion | Encryption at rest, in-motion | Encryption at rest, in-motion | Encryption at rest, in-motion |

Comparison of Costs

Airbyte | Fivetran | Hevo Data | Meltano | Estuary Flow | |

|---|---|---|---|---|---|

| Support | Low-Medium | Medium | Medium | Low-Medium | High |

| Costs | |||||

| Vendor costs | Low-medium | High | Medium-high | Low-medium | Low |

| Data engineering costs | Med-High | Low-Med | Med | Med-High | Low-Med |

| Admin costs | Med | Med-High | Low-Med

| Med-High (self-managed open source) | Low |

Airbyte

Airbyte was founded in 2020 as an open source data integration company, and launched its cloud service in 2022.

While this section is about Airbyte, you could include Stitch and Meltano here because they all support the Singer framework. Stitch created the Singer open source ETL project and built their offering around it. Stitch then got acquired by Talend, which in turn was acquired by Qlik. This left Singer without a major company driving its innovation. Instead, there are several companies who use the connectors. Meltano is one of those. They have built on the Stitch taps (connectors) and other open source projects.

Airbyte started as a Singer-based ELT tool, but has since changed their protocol and connectors to be different. Airbyte has kept Singer compatibility so that it can support Singer taps as needed. Airbyte has also kept many of the same principles, including being batch-based. This is eventually where Airbyte’s limitations come from as well.

If you go by pricing calculators and customers, Airbyte is the second lowest cost vendor in the evaluation after Estuary. Most of the companies we’ve talked to were considering cloud options, so we’ll focus on Airbyte cloud.

- Latency: While Airbyte has CDC source connectors mostly built on Debezium (except for a new Postgres CDC connector), and also has Kafka and Kinesis source connectors, everything is loaded in intervals of 5 minutes or more. There is no staging or storage, so if something goes wrong with either source or target the pipeline stops. Airbyte is pulling from source connectors in batch intervals. When using CDC, this can put a load on the source databases. In addition, because all Airbyte CDC connectors (other than the new Postgres connector) use Debezium, it is not exactly-once, but at-least-once guaranteed delivery. Latency is also made worse with batch ELT because you need to wait for loads and transforms in the target data warehouse.

- Reliability: There are some issues with reliability you will need to manage. Most CDC sources, because they’re built on Debezium, only ensure at-least-once delivery. It means you will need to deduplicate (dedup) at the target. Airbyte does have both incremental and deduped modes you can use though. You just need to remember to turn them on. Also, Debezium does put less of a load on a source because it uses Kafka. This does make it less of a load on a source than Fivetran CDC. A bigger reliability issue is failure of under-sized workers. There is no scale-out option. Once a worker gets overloaded you will have reliability issues (see scalability.) There is also no staging or storage within an Airbyte pipeline to preserve state. If you need the data again, you’ll have to re-extract from the source.

- Scalability: Airbyte is not known for scalability. It has scalability issues that may not make it suitable for your larger workloads. For example, each airbyte operation of extracting from a source or loading into a target is done by one worker. The source worker is generally the most important component, and its most important component is memory. The source worker will read up to 10,000 records into memory, which could lead to GBs of RAM. By default only 25% of each instance’s memory is allocated to the worker container, which you have little control over in Airbyte Cloud.

Airbyte is working on scalability. The new PostgreSQL CDC connector does have improved performance. Its latest benchmark as of the time of this writing produced 9MB/sec throughput, higher than Fivetran’s (non HVR) connector. But this is still only 0.5TB a day or so depending on how loads vary throughout the day. - ELT only: Airbyte cloud supports dbt cloud. This is different from dbt core used by Fivetran. If you have implemented on dbt core in a way that makes it portable (which you should) the move can be relatively straightforward. But if you want to implement transforms outside of the data warehouse, Airbyte does not support that.

- DataOps: Airbyte provides UI-based replication designed for ease of use. It does not give you an “as code” mode that helps with automating end-to-end pipelines, adding tests, or managing schema evolution.

Fivetran

Fivetran was founded in 2012 by data scientists who wanted an integrated stack to capture and analyze data. The name was a play on Fortran and meant to refer to a programming language for big data. After a few years the focus shifted to providing just the data integration part because that’s what so many prospects wanted. Fivetran was designed as an ELT (Extract, Load, and Transform) architecture because in data science you don’t usually know what you’re looking for, so you want the raw data.

In 2018, Fivetran raised their series A, and then added more transformation capabilities in 2020 when it released Data Build Tool (dbt) support. That year Fivetran also started to support CDC. Fivetran has since continued to invest more in CDC with its HVR acquisition.

Fivetran’s design worked well for many companies adopting cloud data warehouses starting a decade ago. While all ETL vendors also supported “EL” and it was occasionally used that way, Fivetran was cloud-native, which helped make it much easier to use. The “EL” is mostly configured, not coded, and the transformations are built on dbt core (SQL and Jinja), which many data engineers are comfortable using.

But today Fivetran often comes in conversations as a vendor customers are trying to replace. Understanding why can help you understand Fivetran’s limitations.

The most common points that come up in these conversations and online forums are about needing lower latency, improved reliability, and lower, more predictable costs:

- Latency: While Fivetran uses change data capture at the source, it is batch CDC, not streaming. Enterprise-level is guaranteed to be 15 minutes of latency. Business critical is 1 minute of latency, but costs more than 2x the standard edition. Its ELT architecture can also be slowed down by the target load and transformation times.

- Costs: Another major complaint are Fivetran’s high vendor costs, which have been 5x the cost of Estuary as stated by customers. Fivetran costs are based on monthly active rows (MAR) that change at least once per month. This may seem low, but for several reasons (see below) it can quickly add up.

Lower latency is also very expensive. To reduce latency from 1 hour to 15 minutes can cost you 33-50% more (1.5x) per million MAR, and 100% (2x) or more to reduce latency to 1 minute. Even then, you still have the latency of the data warehouse load and transformations. The additional costs of frequent ingestions and transformations in the data warehouse can also be expensive and take time. Companies often keep latency high to save money. - Unpredictable costs: Another major reason for high costs is that MARs are based on Fivetran’s internal representation of rows, not rows as you see them in the source.

For some data sources you have to extract all the data across tables, which can mean many more rows. Fivetran also converts data from non-relational sources such as SaaS apps into highly normalized relational data. Both make MARs and costs unexpectedly soar. This also does not account for the initial load where all rows count. - Reliability: Another reason for replacing Fivetran is reliability. Customers have struggled with a combination of alerts of load failures, and subsequent support calls that result in a longer time to resolution. There have been several complaints about reliability with MySQL and Postgres CDC, which is due in part because Fivetran uses batch CDC. Fivetran also had a 2.5 day outage in 2022. Make sure you understand Fivetran’s current SLA in detail. Fivetran has had an “allowed downtime interval” of 12 hours before downtime SLAs start to go into effect on the downtime of connectors. They also do not include any downtime from their cloud provider.

- Deployment options: while Fivetran claims private cloud as an option, it’s not really an option. Its private cloud deployment requires some installation work and only supports 5 databases and 4 data warehouses. There is also s self-hosted option for HVR only.

- Support: Customers also complain about Fivetran support being slow to respond. Combined with reliability issues, this can lead to a substantial amount of data engineering time being lost to troubleshooting and administration.

- DataOps: Fivetran does not provide much control or transparency into what they do with data and schema. They alter field names and change data structures and do not allow you to rename columns. This can make it harder to migrate to other technologies. Fivetran also doesn’t always bring in all the data depending on the data structure, and does not explain why.

- Roadmap: Customers frequently comment Fivetran does not reveal as much of a future direction or roadmap compared to the others in this comparison, and do not adequately address many of the above points.

Hevo Data

Hevo is a cloud-based ETL/ELT service for building data pipelines that, unlike Fivetran, started as a cloud service in 2017, which makes it more mature than Airbyte. Like Fivetran, Hevo is designed for “low code”, though it does provide a little more control to map sources to targets, or add simple transformations using Python scripts or a new drag-and-drop editor (currently in Beta) in ETL mode. Stateful transformations such as joins or aggregations, like Fivetran, should be done using ELT with SQL or dbt.

While Hevo is a good option for someone getting started with ELT, as one user put it, Hevo has its limits.”

- Connectivity: Hevo has the lowest number of connectors at slightly over 150. While this is a lot, it means you need to think about what sources and destinations you need for your current and future projects to make sure it will support your needs.

- Latency: Hevo is still mostly batch-based connectors on a streaming Kafka backbone. While data is converted into “events” that are streamed, and streams can be processed if scripts are written for any basic row-level transforms, Hevo connectors to sources, even when CDC is used, is batch. There are starting to be a few exceptions. For example, you can use the streaming API in BigQuery, not just the Google Cloud Storage staging area. But you still have a 5 minute or more delay at the source. Also, there is currently no common scheduler. Each source and target frequency is different. So latency can be longer than the source or target when they operate at different intervals.

- Costs: Hevo can be comparable to Estuary for low data volumes in the low GBs per month. But it becomes more expensive than Estuary and Airbyte as you reach 10s of GBs a month. Costs will also be much more as you lower latency because several Hevo connectors do not fully support incremental extraction. As you reduce your extract interval you capture more events multiple times, which can make costs soar.

- Reliability: CDC is batch mode only, with the minimum interval being 5 minutes. This can load the source and even cause failures. Customers have complained about Hevo bugs that make it into production and cause downtime.

- Scalability: Hevo has several limitations around scale. Some are adjustable. For example, you can get the 50MB Excel, and 5GB CSV/TSV file limits increased by contacting support.

But most limitations are not adjustable, like column limits. MongoDB can hit limits more often than others. A standalone MongoDB instance without replicas is not supported. You need 72 hours or more of OpsLog retention. And there is a 4090 columns limit that is more easily hit with MongoDB documents.

There are ingestion limits that cause issues, like a 25 million row limit per table on initial ingestion. In addition there are scheduling limits that customers hit, like not being able to have more than 24 custom times.

For API calls, you cannot make more than 100 API calls per minute. - DataOps: Like Airbyte, Hevo is not a great option for those trying to automate data pipelines. There is no CLI or “as code” automation support with Hevo. You can map to a destination table manually, which can help. But while there is some built-in schema evolution that happens when you turn on auto mapping, you cannot fully automate schema evolution or control the rules. There is no schema testing or evolution control. New tables can be passed through, but many column changes can lead to data not getting loaded in destinations and moved to a failed events table that must be fixed within 30 days or the data is permanently lost. Hevo used to support a concept of internal workflows, but it has been discontinued for new users. You cannot modify folder names for the same “events”.

One final note. Hevo does have a way to support reverse ETL. I did not include it here because it is a very specific use case where you write modified data back directly into the source. That is not how updates work in most cases for applications. The better answer is to have a pipeline back to the sources, which is not supported by most modern ETL/ELT vendors. It is supported by iPaaS vendors.

Meltano

Meltano was founded in 2018 as an open source project within GitLab to support their data and analytics team. It’s a Python framework built on the Singler protocol. The Singer framework was originally created by the founders of Stitch, but their contribution slowly declined following the acquisition of Stitch by Talend (which in turn was later acquired by Qlik.)

Compared to the other vendors, Meltano is focused on configuration based ELT using YAML and the CLI. Even with Estuary, which fully supports a configuration-based approach, most users prefer the no-code approach to pipeline development.

- Connectivity: Meltano and Airbyte collectively have the most containers, which makes sense given their open source history with Singer. Meltano supports 200+ Singer connectors (taps) and has a wrapper SDK for Airbyte (350+ connectors). This is why they claim 600+ open source connectors total. But open source connectors have their limits, so it’s important to test out carefully based on your needs.

- Latency: Meltano is batch-only. It does not support streaming. While you can reduce polling intervals down to seconds, there is no staging area. The extract and load intervals need to be the same. Meltano is best suited for supporting historical analytics for this reason.

- Reliability: Some will say Meltano has less issues when compared to Airybte. But it is open source. If you have issues you can only rely on the open source community for support.

- Scalability: There isn’t as much documentation to help with scaling Meltano, and it’s not generally known for scalability, especially if you need low latency. Various benchmarks show that larger batch sizes deliver much better throughput. But it’s still not the level of throughput of Estuary or Fivetran. It’s generally minutes even in batch mode for 100K rows.

- ELT only: Meltano supports open source dbt and can import existing dbt projects. Its support for dbt is considered good. It also has the ability to extract data from dbt cloud. Meltano does not support ETL.

- Deployment options: Meltano is deployed as self-hosted open source. There is no Meltano Cloud, though Arch is offering a broader service with consulting.

- DataOps: Data engineers generally automate using the CLI or the Meltano API. While it is straightforward to automate pipelines, there isn’t much support for schema evolution and automating responses to schema changes.

Overall, if you are focused on open source, Airbyte and Meltano are two good ELT options. If you prefer simplicity you might consider Airbyte. Estuary Flow is also open source, but most people choose the public cloud offering today.

Estuary

Estuary was founded in 2019. But the core technology, the Gazette open source project, has been evolving for a decade within the Ad Tech space, which is where many other real-time data technologies have started.

Estuary Flow is the only real-time and ETL data pipeline vendor in this comparison. There are some other ETL and real-time vendors in the honorable mention section, but those are not as viable a replacement for Fivetran.

While Estuary Flow is also a great option for batch sources and targets, where it really shines is any combination change data capture (CDC), real-time and batch ETL or ELT, and loading multiple destinations with the same pipeline. Estuary Flow currently is the only vendor to offer a private cloud deployment, which is the combination of a dedicated data plane deployed in a private customer account that is managed as SaaS by a shared control plane. It combines the security and dedicated compute of on prem with the simplicity of SaaS.

CDC works by reading record changes written to the write-ahead log (WAL) that records each record change exactly once as part of each database transaction. It is the easiest, lowest latency, and lowest-load for extracting all changes, including deletes, which otherwise are not captured by default from sources. Unfortunately Airbyte, Fivetran, and Hevo all rely on batch mode for CDC. This puts a load on a CDC source by requiring the write-ahead log to hold onto older data. This is not the intended use of CDC and can put a source in distress, or lead to failures.

Estuary Flow has a unique architecture where it streams and stores streaming or batch data as collections of data, which are transactionally guaranteed to deliver exactly once from each source to the target. With CDC it means any (record) change is immediately captured once for multiple targets or later use. Estuary Flow uses collections for transactional guarantees and for later backfilling, restreaming, transforms, or other compute. The result is the lowest load and latency for any source, and the ability to reuse the same data for multiple real-time or batch targets across analytics, apps, and AI, or for other workloads such as stream processing, or monitoring and alerting.

Estuary Flow also supports the broadest packaged and custom connectivity. It has 150+ native connectors that are built for low latency and/or scale. While may seem low, these are connectors built for low latency and scale. In addition, Estuary is the only vendor to support Airbyte, Meltano, and Stitch connectors as well, which easily adds 500+ more connectors. Getting official support for the connector is a quick “request-and-test” with Estuary to make sure it supports the use case in production. Most of these connectors are not as scalable as Estuary-native or Fivetran connectors, so it’s important to confirm they will work for you. Its support for TypeScript and SQL also enables ETL.

Of the various ELT vendors, Estuary is the lowest total cost option. Costs from lowest to highest tend to be Estuary, Airbyte, Meltano, Hevo, and Fivetran in this order, especially for higher volume use cases. Estuary is the lowest cost mostly because Estuary only charges $0.50 per GB of data moved from each source or to each target, which is much less than the roughly $10-$50 per GB that others charge. Your biggest cost is generally your data movement.

While open source is free, and for many it can make sense, you need to add in the cost of support from the vendor, specialized skill sets, implementation, and maintenance to your total costs to compare. Without these skill sets, you will put your time to market and reliability at risk. Many companies will not have access to the right skill sets to make open source work for them.

How to Choose the Right ELT/ETL Solution for Your Business

For the most part, if you are interested in a cloud option, and the connectivity options exist, you may choose to evaluate Estuary.

- Lowest latency: Estuary is the only ELT/ETL vendor in this comparison with sub-second latency.

- Highest scale: It may not be obvious until you run your own benchmarks. But Estuary is the most scalable, especially with CDC. It is the only vendor capable of doing incremental snapshots and has demonstrated 5-10x the scale of Airbyte, Fivetran, and Hevo. If you expect to move a terabyte a day from a source, Estuary is the only option.

- Most efficient: Estuary alone has the fastest and most efficient CDC connectors. It is also the only vendor to enable exactly-and-only-once capture, which puts the least load on a system, especially when you’re supporting multiple destinations including a data warehouse, high performance analytics database, and AI engine or vector database.

- Private cloud: Estuary is currently the only ELT vendor to support private cloud deployments, which involves a data plane deployed in a customer private account managed by a shared SaaS control plane. It’s the privacy of on prem with the simplicity of SaaS. Your next-best option is self-hosted open source.

- Most reliable: Estuary’s exactly-once transactional delivery and durable stream storage is partly what makes it the most reliable data pipeline vendor as well.

- Lowest cost: for data at any volume, Estuary is the clear low-cost winner.

- Great support: Customers consistently cite great support as one of the reasons for adopting Estuary.

Ultimately the best approach for evaluating your options is to identify your future and current needs for connectivity, key data integration features, and performance, scalability, reliability, and security needs, and use this information to a good short-term and long-term solution for you.

Getting Started with Estuary

Getting started with Estuary is simple. Sign up for a free account.

Make sure you read through the documentation, especially the get started section.

I highly recommend you also join the Slack community. It’s the easiest way to get support while you’re getting started.

If you want an introduction and walk-through of Estuary you can watch the Estuary 101 Webinar.

Questions? Feel free to contact us any time!

About the author

Rob has worked extensively in marketing and product marketing on database, data integration, API management, and application integration technologies at WS02, Firebolt, Imply, GridGain, Axway, Informatica, and TIBCO.

Popular Articles