Data pipelines keep data flowing throughout businesses. They’re critical to the daily operations of companies in many industries. But how do you build a Real-Time data pipeline?

There’s no one-size-fits-all answer: every business is unique, as are its data needs. As a result, the way every business chooses to build data pipelines will be different.

If you’re reading this article, you’ve likely been tasked with creating a real-time data pipeline for your company. Perhaps you just joined a data or IT team.

Or perhaps you’re trying to decide if your company needs to hire a data engineer (or a team of them) to complete this task, outsource it, or if you can purchase a pre-made solution.

Whatever the case, this article is for you.

We’ll walk through the planning process you must complete before you build a real-time data pipeline — because thorough planning and adherence to best practices will make it much easier to build the exact pipeline you need.

By the end, you’ll have an idea of the work involved and the next steps you’ll need to get started.

Let’s dive in.

What Are Data Pipelines?

Before diving deep into building a data pipeline, let’s first define what it is. A data pipeline is a process involving a series of steps that moves data from a source to a destination.

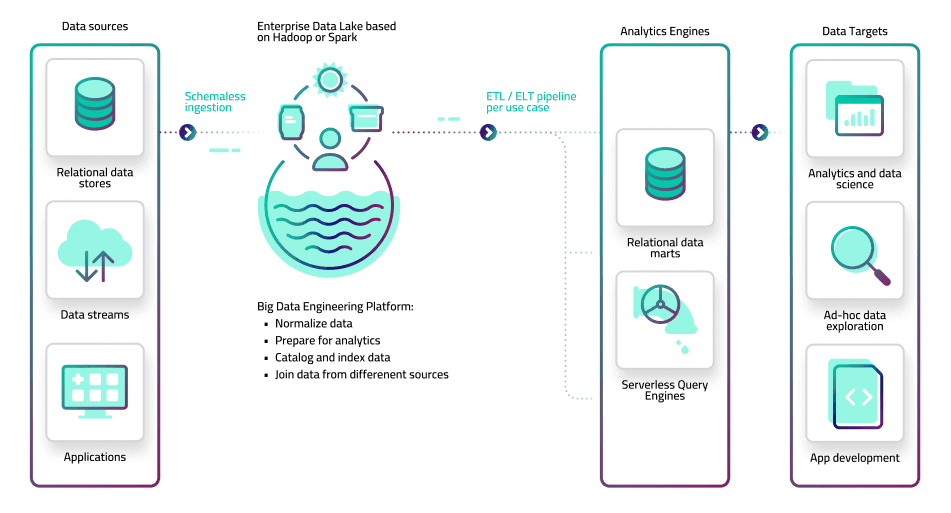

In a common use case, that destination is a data warehouse. The pipeline’s job is to collect data from a variety of sources, process data briefly to conform to a schema, and land it in the warehouse, which acts as the staging area for analysis.

Analytical tools connected to the data warehouse then allow team members to perform analytical data transformation, machine learning, data science workflows, and more.

But data pipelines can also be operational in nature. This means they extract data from the source, skip the analysis step, re-shape the data, and move it directly to business-critical apps, like business intelligence systems, CRMs, and others.

Most businesses will have a complex, interconnected network of data pipelines. Building pipelines is a vital step in the automation of enterprise-wide dataflow — especially as big data becomes the norm in just about every industry.

To be successful, a company’s data pipelines must be scalable, organized, usable to the correct stakeholders, and above all, crafted to align with business goals.

A Comprehensive Guide To Building Real-Time Data Pipelines

Data pipelines must be engineered. Doing so requires a highly technical skill set. Your organization can accomplish this in three main ways:

- You hire an outside consultancy to build the pipeline.

- An in-house data engineer or data engineering team builds the pipeline.

- You purchase a tool or platform to minimize the engineering work of building the pipeline.

We’ll cover these options in the final section of this article. But first, we’ll discuss the important planning steps and principles that are often overlooked.

It’s easy to get lost in the engineering problem and forget important aspects of why you’re building a data pipeline in the first place. (The answer is always more complicated than “We want to get data from point A to point B.”)

The points below will help you set that important groundwork.

1. What’s Your Goal In Building A Real-Time Data Pipeline?

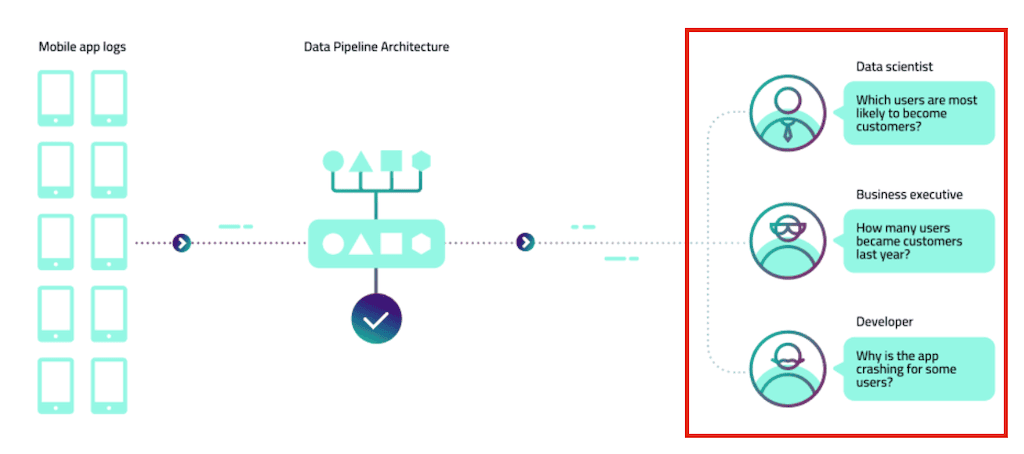

Right off the bat, it is important to know what your goal is. Ask yourself: Why are you trying to create and implement an ETL pipeline for your operations? Try to identify the benefits it will bring to your business.

Start with a few questions from the business perspective:

- What business outcome will be met by this data pipeline?

- Who are the pipeline stakeholders, and how much control do they need to have?

- Once the pipeline is deployed, how do we measure its success in business terms?

Keep these answers in mind to guide the entire process from here on out.

Next, ask questions that will determine the course of the project in practical terms:

- Are there time and budget constraints? If so, what are they?

- Do we require real-time data (aka streaming data) or will batch processing suffice?

2. Determine Your Data Sources

Data sources are the data’s point of origin. Often, a pipeline will have several, each of which contains different types of data. These could be relational databases, APIs, SaaS apps, or cloud storage repositories.

The data sources dictate what pipeline architectures and methods are possible, so you need a comprehensive list from the outset.

Start by clarifying what question the pipeline will answer, and what datasets could be helpful. You may be overlooking one or more potential sources, and you don’t want to have to add them retroactively.

3. Identify Your Ingestion Strategy

Now that you’ve determined the objective of your data pipeline and what data sources you are collecting data from, it’s time to determine how you will integrate the pipeline with the source system.

Earlier, you spent some time thinking about whether you need real-time data, or if batch processing is sufficient. This depends on the latency that’s acceptable for your data pipeline outcome.

For example, it might be ok to have some time delay for a long-term data science project. But if you need customer behavior data to inform a chatbot on your website, real-time data is a necessity.

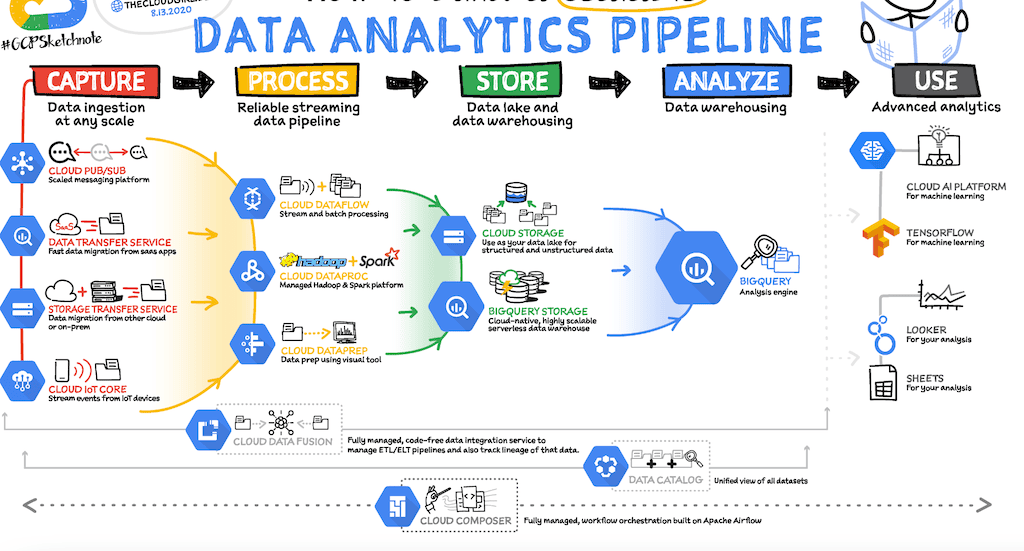

The batch vs real-time distinction is important because the two have fundamentally different architectures.

This architecture must be implemented starting at the ingestion step, and can then be carried through the rest of the pipeline.

And the relative difficulty of the two will likely determine your engineering strategy.

3.1 Batch data ingestion

Batch data pipelines usually follow a classic extract, transform, load (ETL) framework. The pipeline scans the data source at a preset interval and collects all the new data that has appeared during that time.

ETL can be relatively easy to hand-code and has been popular since the early days of small-scale on-premises infrastructure. Many batch ETL tools are available for purchase today.

3.2 Real-time data ingestion

Real-time pipelines detect new data as soon as it appears in a source. The change is encoded as a message and sent immediately to the destination. Ideally, these pipelines also transform data and perform validation in real time.

Real-time pipelines are considered challenging to engineer. They must be built on top of a message bus, which can be difficult to work with (Apache Kafka is a popular example).

Fortunately, in recent years, managed solutions for real-time pipelines have become available.

4. Design The Data Processing Phase

Before data is used in the destination system, it should be validated or transformed into data that’s compatible with that system. Each dataset should conform to a schema so that it is easy to understand, query, and work with.

Operational systems, like business intelligence apps, typically have a rigid format of what they can accept.

Data warehouses and data lakes are more flexible, so it may be technically possible to load raw data to these systems. But it’s not worthwhile. It shifts the burden onto data scientists and engineers, who will take weeks to repurpose the data into something that can be leveraged by the business — if they can even locate the data they need in the first place.

Without a data processing phase, data analysis would be either extremely difficult and time-consuming or impossible. The benefit of the data pipeline would be completely lost.

You may have heard of ETL and ELT frameworks for data pipelines. The “T” in the acronym refers to the crucial transformation step. (“E” is for extraction, otherwise known as ingestion. “L” is for loading the data to its destination.)

As these frameworks suggest, the transformation can occur at different points in the pipeline. In fact, most pipelines will have multiple transformation stages. Regardless, the transformation must occur before the data is analyzed.

In general, ETL is a safer framework than ELT because it keeps your warehouse free of raw data altogether.

5. Where To Store Data

Now that you’ve extracted data and processed it, it’s time to load it to the destination. Usually, this is fairly obvious, because the destination is the system where you’ll achieve your data goal — whether that’s analysis, real-time insights, or something else.

Still, it’s important to spend time thinking about the requirements here. They will inform the transformation phase, which we discussed previously. And like the data source system, the destination might influence the engineering challenge of your pipeline.

Ask questions like:

- How does the destination influence the transformation strategy?

- If the destination is an operational system like an app, do we want to back up the data elsewhere?

- How might our needs from the data change in the future? How can we optimize the destination now so we can pivot more easily?

6. Monitoring Framework

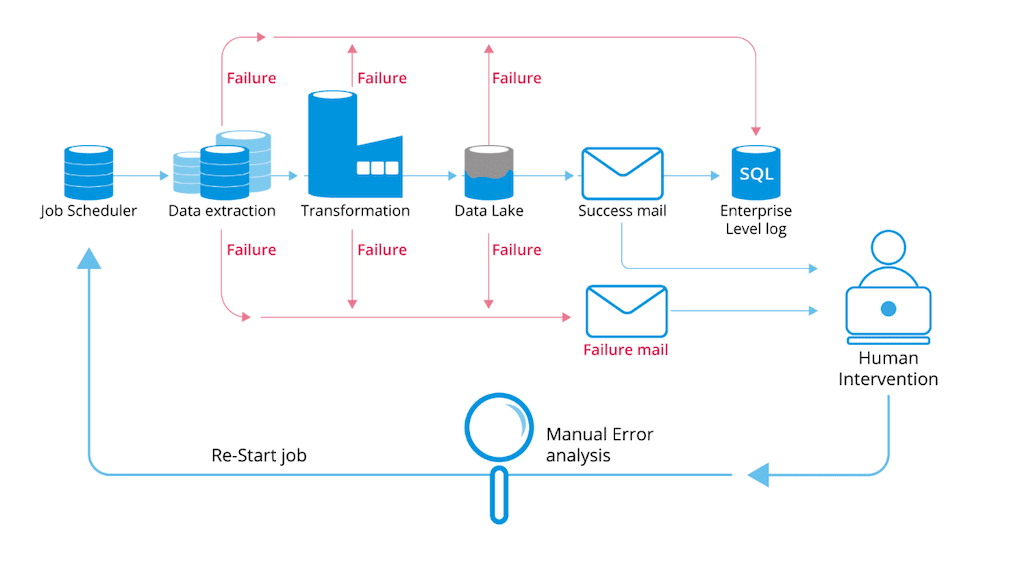

The final step includes implementing a monitoring framework for the overall process of the data pipeline. Data pipelines in action involve a lot of components and dependencies. Errors are likely, so you’ll want to catch them early.

If you purchase a pipeline solution, it should include some sort of monitoring capability. Otherwise, you’d need to create your own. Your monitoring framework should keep you informed on system health and can protect data quality.

The following assets should be worked into your monitoring tools in some way:

6.1 Metrics

Metrics are types of quantifiable information about your pipeline systems. They indicate the overall system health.

These could include:

- Latency: How long things take

- Traffic: Who is using the system

- Error frequency and type: What is failing, when, and why

- Saturation: How much of the available compute or storage is being used

6.2 Logs

Just about every type of technology system outputs logs for normal activity and errors alike. They are a lower-level informational asset with much more technical detail. These are especially useful for diagnosing and debugging performance issues and errors.

6.3 Traces

Tracing is an application of logging that gets even more detailed. It captures all the events that happen in the system in real-time, often without lots of context.

In the case of a pipeline, you may be “tracing” a single dataset’s journey from start to finish, but that’s not necessarily the case.

Taken together, metrics, logs, and traces provide different levels of granularity, so you can keep an eye on your data pipeline, detect issues quickly, and debug them.

3 Best Practices When Building And Implementing A Data Pipeline

I. Save And Store The Raw Data In Your Pipeline

Don’t delete the raw data you’ve gotten from a data source. You may use it for another purpose or need it to recover from failure elsewhere in the system. Backing up your raw data will save you time when you need to create a new pipeline or fix your existing one.

Cloud data storage is an affordable and practical way to back up the data in your pipeline. For example, Estuary Flow stores pipeline data as JSON in a cloud storage bucket.

II. Monitor And Observe

It’s easy to get a bit overconfident in your automated data pipeline and think it can take care of itself. This isn’t the case. Data pipelines are more complex than ever, meaning there are even more places where they can break.

In addition to monitoring, you’ll want to make sure you test and validate your data regularly. Even in the most well-architected pipeline, errors can be introduced from changes in the source data format, updates to the component libraries in the pipeline, configuration changes in the source and destination, and system outages.

Monitoring and observation also allow you to identify where your pipeline can be further improved. You can optimize the cost, latency, and usability of your pipeline over time.

III. Complexity Doesn’t Result In Efficiency

A complex data pipeline with a lot of components isn’t necessarily the best. When you run through every stage and component in your planned pipeline, take note of places where it could be tightened up, or where redundant components could be removed.

You may find that a simpler data pipeline could produce the same results as your current complex system. If this is the case, then look for ways to reduce the complexity of the process, and thus increase efficiency.

The Engineering Work of Data Pipelines (and Alternatives)

By now, you know where the data is coming from, where it’s going, and what transformations are required. You understand the business questions and outcomes your pipeline must address, and know its required budget, timeline, and output.

You have best practices in mind and a general plan. Your next challenge is to actually build the pipeline. Use these questions to guide you.

A. Does our company have the engineering staff to build this from scratch?

Depending on whether you need a simple ETL pipeline or a full streaming infrastructure, your answer may vary. Consult with the data engineers at your organization, and see if they have the time and skills required for this particular task.

Regardless of whether you said yes or no, go on to the next question.

B. Are there tools available that can make this easier?

There are a huge number of data pipeline tools to consider. These range from open-source frameworks that engineers can build on, all the way to no-code services that put pipeline building within reach even for people with no engineering experience.

As you evaluate your choices:

- Make sure the platform supports the source and destination systems your pipeline needs.

- Make sure critical features (for example, real-time data streaming and built-in testing) are supported.

- Evaluate whether the tool will be usable by the team you have today and that it will make their jobs easier.

- Weigh the pricing of these tools against the potential time saved from building the pipeline from scratch yourself.

Today, most organizations take a hybrid approach: purchasing some sort of pipeline tool, while customizing it to meet their particular needs.

C. Would it be better to hire someone from outside?

If your data infrastructure is a mess, you have no data engineers on staff, and you simply have no idea where to start, hiring a consultant may be for you. This is the most costly way to build a data pipeline, but you might find it worthwhile if it helps you establish a data-savvy culture at your organization going forward.

Conclusion

Building data pipelines is not an easy task, but it’s critical. Data pipelines form your data integration backbone — they hold your data infrastructure together. They empower you to answer business questions and implement intelligent operation practices in all domains of your company.

By now, you’re clear on how to think about and plan optimal data pipelines, and you’ve considered the options for engineering implementation.

Estuary Flow is a platform designed to empower smaller teams of engineers and non-engineers alike to accomplish more with their data pipeline. Flow is designed to make real-time pipelines easy, and includes integrations with popular databases, warehouses, SaaS, and cloud storage.

Author

Popular Articles