Sales conversations are weird. Each individual conversation is specific to its participants and is filled with all sorts of personal, human nuance. On the other hand, we’re expected to lookat these conversations in aggregate and answer the all-important question:

“Will these conversations lead to new customers?”

This is a very valuable, but surprisingly difficult metric to accurately calculate. Ignoring incentives to self-report positively and inherent human bias, just think about Goodhart’s Law.

Lucky for us, this sort of grey area is exactly where ChatGPT shines. So let’s get that work off our shoulders…and finally find some answers!

We’re going to need 3 things:

- A feed of conversation data (We’ll use Hubspot today, but this works with other CRMs)

- A means to transform that data into measurable information (ChatGPT, with help from Estuary Flow)

- Some place to put that measurable information (We’ll use a Google Sheet)

At the end, I’ll leave you with a GitHub repo, so you can recreate this pipeline for your team.

But first, a bit about what this demo is (and what it isn’t).

How we use ChatGPT for sales

These days, there’s no shortage of information on how to use ChatGPT for sales, or how to generate leads with ChatGPT. It’s probably fair to say you’re drowning in articles like these.

Most headlines you click will nudge you toward a plug-and-play chatbot (especially if you’re trying to use ChatGPT for Hubspot data). In essence, you’re encouraged to replace your human sales reps… with a bot that might not actually be designed to evolve your sales strategy long-term.

This is not one of those articles.

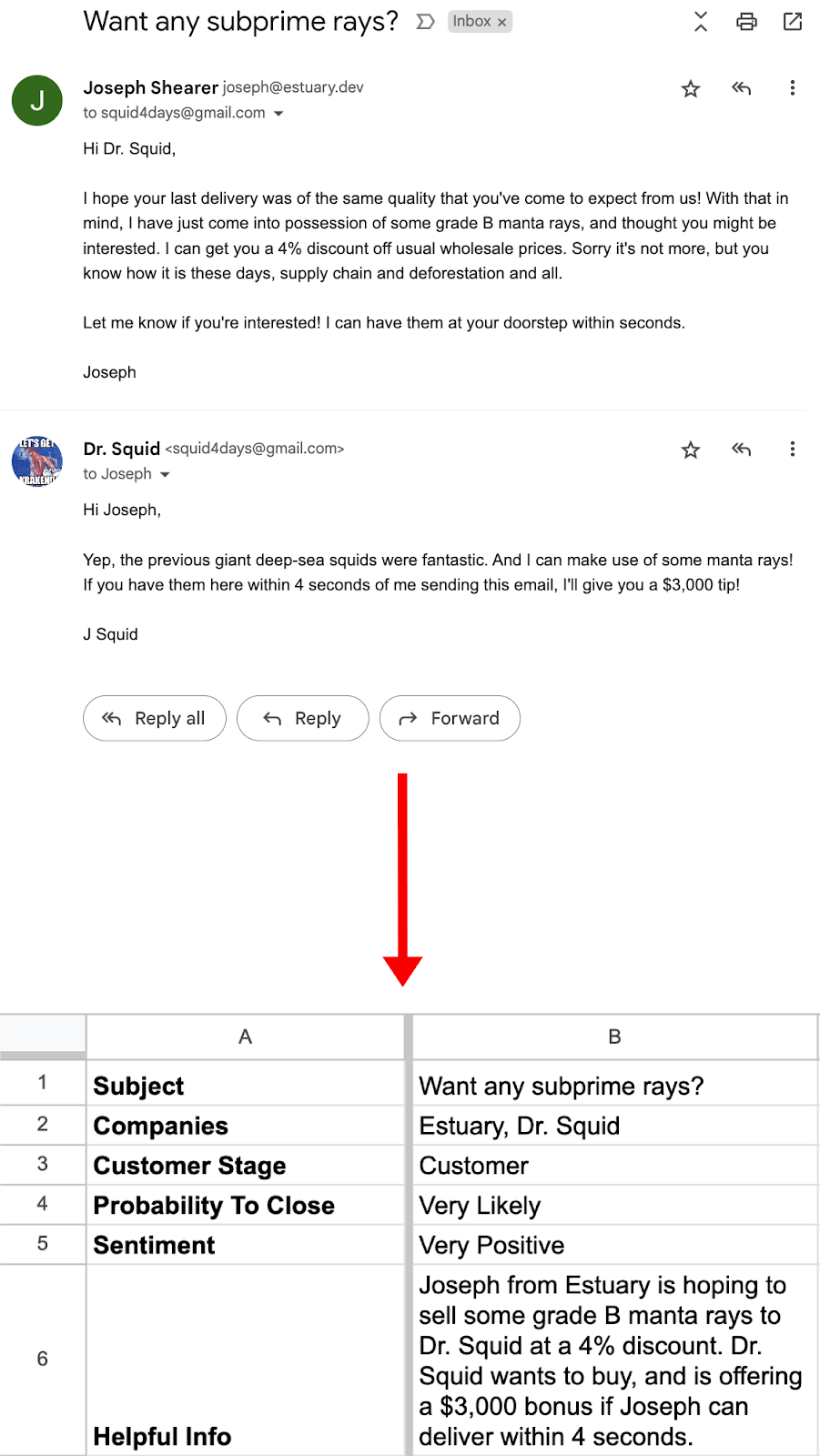

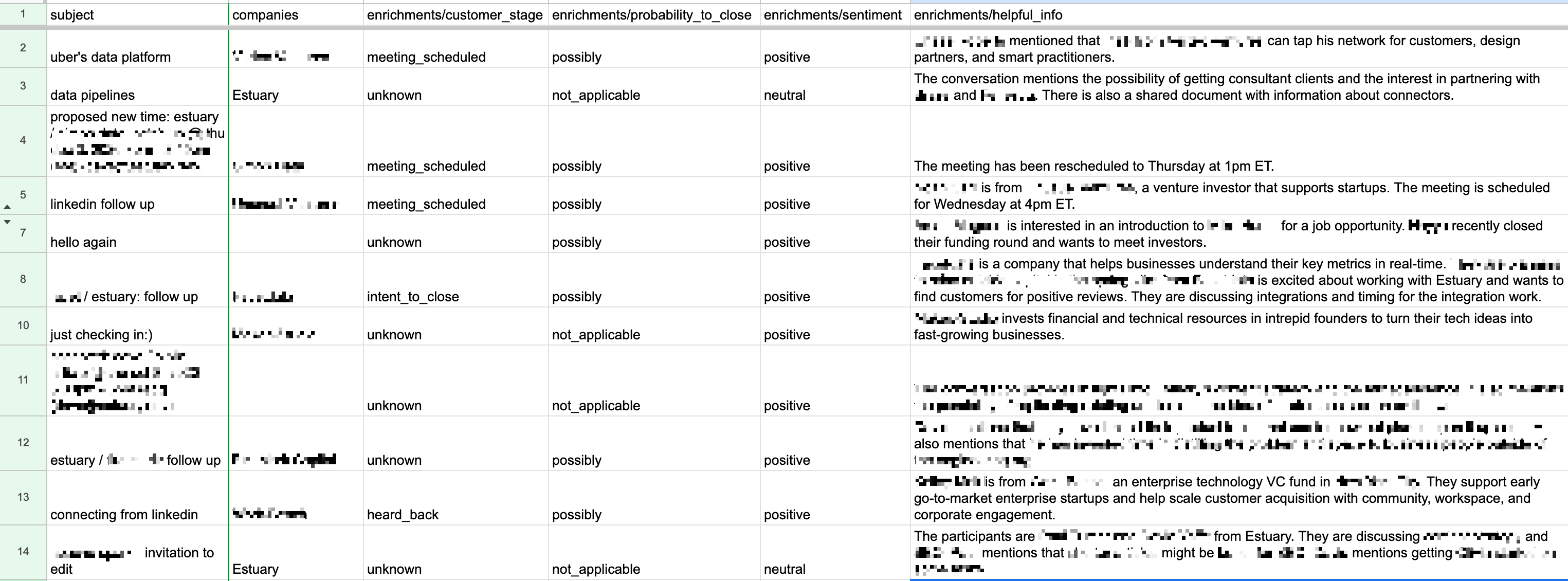

Here, we’ll set up ChatGPT to monitor your active Hubspot sales conversations. We’ll let it draw conclusions — sentiment, stage, and the likelihood of closing a sale — and display it in a compact dashboard in Google Sheets.

So if you’re interested in harnessing the power of ChatGPT to help manage all your sales conversations — and maybe your whole sales team — but you don’t want to replace your real, human sales leads pros with bots, you’re in the right place.

It’s a great way to start using ChatGPT for sales without reinventing the wheel.

It’s also a super-doable DIY process… so if you’re not the most tech-savvy member of your team, consider forwarding this article to them! We’re about to get into the weeds.

Connecting Hubspot and ChatGPT with Estuary Flow

We know our data source and dashboard destination — Hubspot and Google Sheets, respectively. And of course, ChatGPT does the heavy lifting of analyzing the sales conversations.

But we’re missing the connective tissue — we need to integrate all these components. Also, we need to roll up each conversation in Hubspot by subject and sender. In other words, we need a stateful transformation of an ever-evolving dataset.

To get all this done, we’ll use Estuary Flow. If you’re not familiar with Flow, here’s a quick rundown:

- Flow is a UI-forward tool that lets you build and manage real-time ETL pipeline

- With Flow, you can create on-the-fly stateful transformations with SQL or TypeScript. They’re called derivations.

- It’s free to start using Flow (if you want to try this demo out, say).

Side note: Flow supports a wide range of data sources that contain conversation data. We went with Hubspot because it’s a super-popular CRM and we happen to already have a lot of sales conversations there. But you can just as easily set up a pipeline just like this from a different source.

Capturing sales conversation data from a source like Hubspot is pretty straightforward. Check out the Flow docs for help.

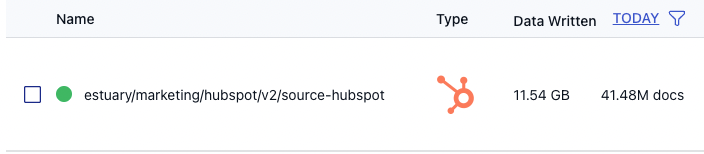

Once we’ve captured data from Hubspot, we end up with a row in our Flow dashboard that looks something like this

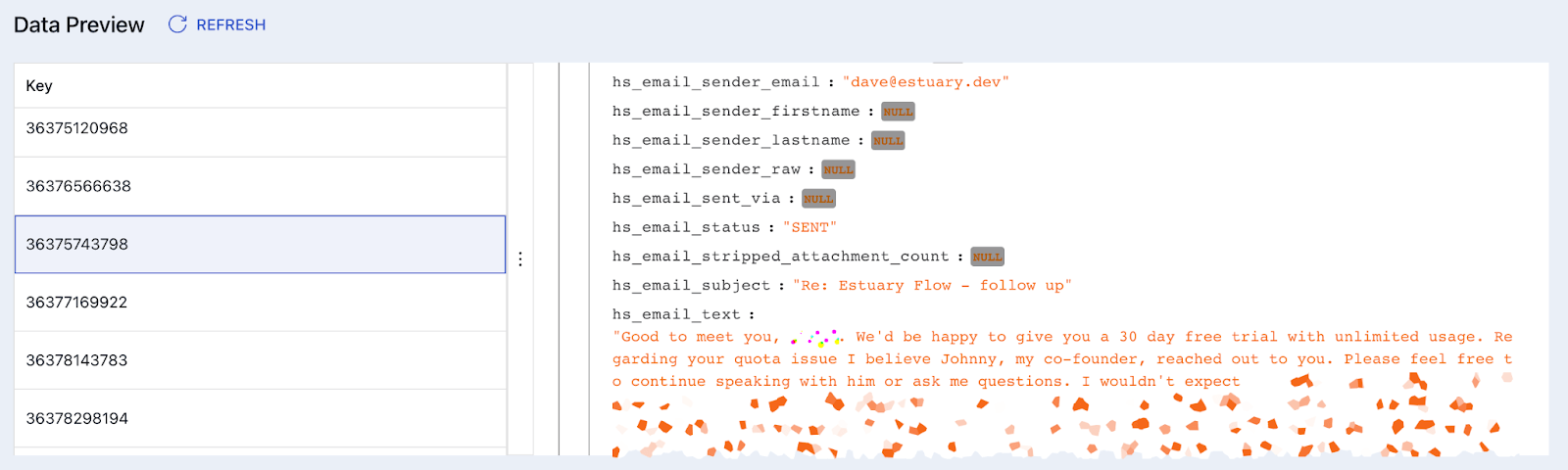

Flow captures data as collections of JSON documents. These are schematized representations of data objects in Hubspot — we’ll use the current data structure as a starting point for our transformation.

So let’s look at a sample of the data to see what we’re working with. We can take a look at a preview in the Flow dashboard:

Now that we have some data and we know what it looks like, we can move on to step two.

Transforming Hubspot data for use with ChatGPT

We’re going to use OpenAI’s ChatGPT to turn these human conversations into data points, but we have two problems.

First, while ChatGPT may appear to learn from the conversation you have with it, all that’s really happening is that the full conversation history is being sent in the completion request every time you type a message. This means that we’ll need to group together all of the relevant information for a conversation and send it over in one go (making sure to keep it under the token limit of 4k or 16k, depending on how much money you want to spend).

Since Hubspot’s API provides us with a stream of individual emails, we have some work to do before ChatGPT can meaningfully step in:

- Grouping emails into threads

- Joining together other relevant information such as company name or message sender name.

To do this, we’re going to take advantage of two features of Flow: stateful, and stateless derivations (in-flight data tranformations).

Roll up those threads

In order to group a stream of emails together into threads, we first need to keep track of all the emails we’ve seen, and index them by subject and sender. We’ll use a stateful derivation for this:

After that, every time we get a new email we then need to find all of the other emails in that thread, and emit a derived document containing all of the messages in that thread rolled up:

We also joined other useful metadata that Hubspot provides, such as participant company information. This would be useful if you wanted to, for example, query ChatGPT for the market segment of the companies involved in the conversation.

Make the magic happen

Now that we have a stream of documents representing whole email threads, we need to:

- Transform it into a form that we can provide to OpenAI’s Completions API

- Call that API

- Derive a second collection with the responses.

Since we’ve already done all of the aggregation, we are free to use a stateless derivation for this.

OpenAI recently announced the ability for ChatGPT to return its responses as valid JSON documents matching a specific schema. This makes connecting ChatGPT and Flow easy — we don’t have to figure out how to reliably parse text coming from the LLM.

First, we transform each thread document into a blob of text that ChatGPT can understand. The end result looks something like this. Lines corresponding to a particular participant are all prefixed with the same ID which improves the quality of results.

Then, we send that text along with a JSON schema representing the shape of the response we want to OpenAI, and get a response back. This is the enrichment for the current state of this thread.

javascript{

"companies": "Redacted",

"enrichments": {

"customer_stage": "heard_back",

"helpful_info": "Jane Doe is from Redacted, an enterprise technology VC fund in Antarctica. They support early go-to-market enterprise startups and help scale customer acquisition with community, workspace, and corporate engagement.",

"is_relevant": true,

"overview": "Jane Doe from Redacted reached out to schedule a meeting. They suggested several times for the meeting, including Tuesday, October 20 - 1pm-2pm ET, Thursday, October 21 - 12pm-1pm, 5pm-6pm ET, Friday, October 22 - 10am-12pm ET, and Thursday, October 29 - 10am-1pm ET.",

"probability_to_churn": "not_applicable",

"probability_to_close": "possibly",

"sentiment": "positive"

},

"subject": "connecting from linkedin"

}At this point we have a stream of email threads and associated enrichment metadata, and we have to send it somewhere in order to be able to do something useful with it. For the purposes of this demo we’re going to send it to Google Sheets, but you could also send it to any of the other destinations that Flow supports.

Where to go from here

Now we come back around to where we started: sales conversations. At this point, we have a live feed of sales conversations and where in the pipeline ChatGPT thinks they are, automatically fed into a spreadsheet in roughly real-time.

The results aren’t perfect, and there a few things I can think of that can be done to improve them, left as an exercise for you.

Context

One problem is that we’re asking ChatGPT to analyze only the information contained in a single email thread at once.

In reality, you would consider everything we know about a particular customer, while still focusing on the particular email thread. In fact, it would be fantastic if we could take everything we know about every customer into account when determining, for example, the probability to close. While you could try to jam every email thread with that customer into a single completion call, that would both end up being very expensive, as well as likely not working when there are many emails with a particular customer.

One possibility is to calculate the embeddings of every thread, and then use a vector database like Pinecone to load relevant information and provide it as context to the LLM when computing the enrichments for a particular thread.

Flow has support for materializing to Pinecone. Here’s another blog post with an example of how to do this.

Multi-Shot

One other trick that is often used to improve the quality of LLM responses is to simply ask the model to please correct any mistakes it made previously.

This pattern is the basis for tools like Langchain, which allow you to define complex multi-shot interactions with the LLM, which can dramatically improve results. Of course, this comes at the expense of multiple calls to the API per thread.

…or use this example as-is

Possible improvements aside, the pipeline we’ve outlined here is a great place to start introducing ChatGPT to your Hubspot workflow, or your sales process in general.

When you use ChatGPT to monitor sales interactions (even without much context) you control for the inherent human biases to let things slip through the cracks or lose sight of the big picture, without compromising the human-ness of each conversation.

A repository containing all of the Flow specs required to run this pipeline yourself is available here. Get started with Flow here (it’s free to start).

And if you want to talk about this use case — or anything else you’re cooking up with Flow and ChatGPT — you can find me and our whole engineering team on Slack.

Author

Popular Articles