You’re done for the day.

You stifle a yawn, as you look through your emails to see if there are any last-minute requests.

Just as you’re about to leave, you feel a gentle tap on your shoulder.

“We onboarded Cloud SQL as a source, and need the data for analysis in BigQuery. And we need this data, like yesterday.”

What do you do?

Do you look for the easiest ways to move your data from Cloud SQL to BigQuery Dataflow?

If so, you’ve come to the right place.

In this blog, you’ll find detailed instructions and expert advice on how to connect Cloud SQL to BigQuery. We’ll also look at the pros and cons of each method, so you can make an informed decision on which one is best for you. So, if you’re ready to get started, let’s dive in!

What is Cloud SQL?

Google Cloud SQL is Google’s fully managed relational database service that stands out for its rich extension connections, developer ecosystems, high performance, and seamless scalability.

With Cloud SQL, you can ensure the security and reliability of your databases which allows your business to run without a hitch. It also automates your replication, backups, capacity increases, and encryption patches.

Cloud SQL does all this and more — while ensuring 99.5% availability in every nook and cranny of the world! With Google’s Database Migration Service (DMS) you can easily migrate your production databases to Cloud SQL with minimal downtime.

What is BigQuery?

Google BigQuery is a managed, serverless data warehouse that is fully scalable and built on Google’s cloud infrastructure. It is an enterprise data warehouse that allows businesses to store and query large amounts of data quickly and cost-effectively.

It also offers a powerful SQL-like query language to help data engineers easily access, analyze and transform data without having to write complex code. Its scalability and performance enable data engineers to run queries on large datasets quickly and cost-effectively.

Additionally, BigQuery integrates with other Google Cloud services, such as Cloud Dataproc and Google Data Studio, to enable data engineers to easily combine data from multiple sources, analyze it, and gain insights.

What are the Benefits of Migrating Data from Cloud SQL to BigQuery?

- With BigQuery, you no longer have to provision and forecast compute and storage resources beforehand. BigQuery allocates all the resources based on usage, dynamically.

- BigQuery provides ridiculously fast analytics on a petabyte scale through its unique capabilities and architecture.

- Since BigQuery uses a columnar data store, you can enjoy the highest data compression with minimized data scanning in the usual data warehouse deployments.

Why Integrate Cloud SQL to BigQuery using Dataflow?

Google Cloud Dataflow is a game-changer when it comes to migrating data from Cloud SQL to BigQuery. Let’s go over a couple of reasons why this method is recommended:

- Automatic Scaling: Google Cloud Dataflow’s automatic scaling of virtual machines for pipeline jobs lets you process large volumes of data simultaneously. Say goodbye to sitting around for days, waiting for the confirmation of migration results. Google Cloud Dataflow gets the job done in minutes!

- Hands-Off: Since Google provides Cloud Dataflow as a fully-managed service, it takes the management and deployment of pipeline jobs off your hands. This means you can effortlessly repeat the procedure to obtain quick feedback as the jobs are run and deployed together as a single step.

- Rapid Migration: With the ‘auto scaling’ option enabled, your pipeline jobs will carry out the data processing in parallel.

How to Migrate from Cloud SQL to BigQuery Dataflow?

Cloud SQL isn’t built for heavy analytical operations, which makes migration of data to a centralized data repository like BigQuery pivotal.

Now that you’ve seen the benefits of migrating data from Cloud SQL to BigQuery, let’s dive into the easiest methods you can use to migrate data from Cloud SQL to BigQuery.

Method 1: Building a Data Pipeline Using GCP

To set up a connection between Cloud SQL and BigQuery using GCP, follow along:

- Step 1: First, enable the BigQuery Connection API.

- Step 2: For the connection to work, you need to ensure that the Cloud SQL is set to the public network. Scroll to your SQL instance > Connection tab. Now all you gotta do is enable the public IP and click on Save. But the connection isn’t complete without providing an authorized address. Let’s tackle that in the next step.

- Step 3: Scroll to the BigQuery interface and add an external data source. This opens up a prompt where you can enter the details about your Cloud SQL data. Enter all the requisite information to connect to Cloud SQL.

- Step 4: Finally, provide the role and email address for users to access this newly created external database by clicking on the ‘Share Connection’ option.

Now you can query your Cloud SQL data in BigQuery using federated queries. To send these queries, you can use the EXTERNAL_QUERY function. Let’s take an example here to illustrate this.

Suppose you have a customer table in BigQuery, while the sales table is present in Cloud SQL. Now, to join the two in a single query, you can make a federated query to a Cloud SQL table named deliveries and joins the results with a BigQuery table named demo.customers:

plaintextSelect d.customer_id, d.name, bq.first_order_date FROM demo.customers AS d

LEFT OUTER JOIN EXTERNAL_QUERY(

'us.connection_id',

'''SELECT customer_id, MIN(order_date) AS first_order_date

FROM deliveries

GROUP BY customer_id''') AS bq.customer_id = d.customer_id

GROUP BY d.customer_id, d.name, bq.first_order_date; Key Points to Remember

- To obtain the permissions to query a Cloud SQL instance, you can ask your administrator to give you the BigQuery Connection User (

roles/ bigquery.connection) IAM role on your project. - Not all Cloud SQL instance regions support federated queries. So, you might have to move your SQL instance to where federated query support is available.

- A lot of the data types of SQL aren’t supported by BigQuery (like the UUID generator type). For these scenarios, convert the data type to BigQuery readable data type in the federated query.

Method 2: Building a Data Pipeline Using Cloud Dataflow

This method will have two steps. First, you’ll create a routine/connector that publishes changes from Cloud SQL to Pub/Sub. This’ll be followed by writing a Dataflow pipeline that consumes those changes from Pub/Sub and migrates them to BigQuery.

Migration of Cloud SQL data to BigQuery using Dataflow works best when you have relational source data that needs to be aggregated or denormalized before migration.

The only downside here is that transporting OLTP/transactional data will need a strong versioning schema, to track all the changes.

Workflow

Using Cloud Dataflow to connect Cloud SQL to BigQuery will look different for each use case, depending on the shape of your data in your source database, and thus, the transformations required.

But in general, you'll need to follow these steps:

- Create a Cloud Dataflow job: First, you need to create a Cloud Dataflow job that reads data from Cloud SQL, transforms it, and writes it to BigQuery. You can create a job using the Cloud Dataflow UI, the gcloud command-line tool, or the Dataflow API.

- Specify the input source: In the job configuration, you need to specify the input source for the pipeline. In this case, you would specify the Cloud SQL instance and database that contains the data you want to transfer.

- Define the data transformation: Next, you need to define the transformation that will be applied to the data. This might include filtering, aggregating, or joining data to prepare it for analysis in BigQuery.

- Specify the output destination: Finally, you need to specify the output destination for the pipeline. In this case, you would specify the BigQuery dataset and table where you want to write the transformed data.

- Run the job: Once you have configured the job, you can run it in the Cloud Dataflow service. Cloud Dataflow will automatically provision the necessary resources, such as compute instances and storage, to execute the job.

- Monitor the job: While the job is running, you can monitor its progress using the Cloud Dataflow UI or the gcloud command-line tool. You can view metrics such as job duration, data throughput, and errors to ensure that the job is running smoothly.

- Verify the output: Once the job has been completed, you can verify that the transformed data has been written to BigQuery. You can query the BigQuery dataset and table using the BigQuery UI, the gcloud command-line tool, or the BigQuery API.

Key Points to Remember

- BigQuery doesn’t let you modify existing records. Since all the writes are considered immutable, you need to have a clear strategy to avoid duplication and errors if your application demands it.

- BigQuery has a 4 GB/file limit. This means if you are extracting Cloud SQL data in JSON format, you need to fragment your file into chunks before migrating to BigQuery.

- If the underlying data in Cloud SQL is changed during a federated query/transfer, it could lead to data inconsistency.

Alternative To Google Cloud Dataflow to Connect Cloud SQL to BigQuery

Although Google Cloud dataflow is a well-managed solution that's UI-based, a keen eye will quickly pick up a few major stumbling blocks that can pack a huge engineering punch:

- You need to manually apply a transformation to get the tables from your relational database to adhere to a schema that can be used by BigQuery.

- You need to fragment large files to avoid hitting BigQuery's file limits.

With these limitations, it's hard to provide a one-size-fits-all guide for using Dataflow to connect Cloud SQL and BigQuery.

But in the next section, we'll provide an alternative method to integrate Cloud SQL and BigQuery in real-time... with the correct schema, all in a UI.

Method 3: Building a Pipeline With Estuary Flow.

Estuary Flow is a real-time data integration platform that allows you to connect Cloud SQL to BigQuery and other data sources. Estuary is streaming native and has an intuitive no-code UI that’s quick to use once your data systems meet the prerequisites. Like Dataflow, it's also highly scaleable and hands-off once the initial setup is done.

To connect Cloud SQL to BigQuery using Estuary, you'll need to meet the following requirements:

- Google Cloud SQL instance: You need to have a running Cloud SQL instance that contains the data you want to transfer to BigQuery.

- Allow connections from Estuary Flow: You'll need to enable public IP on your database and add the IP address of Estuary Flow (currently 34.121.207.128) as an authorized IP address.

- Depending on whether your Cloud SQL instance is MySQL, Postgres, or SQL Server, you’ll have to meet a few more requirements to prepare your database. See the guides below:

- A Google Cloud Storage bucket in the same region as the BigQuery dataset.

- A Google Service account with

roles/bigquery.dataEditor,roles/bigquery.jobUser, androles/storage.objectAdmin; and a service account key generated. See this guide for help.

Once you've met these requirements, you can follow these steps to connect Cloud SQL to BigQuery using Estuary Flow:

- Log in to your Estuary account, or sign up to get started for free.

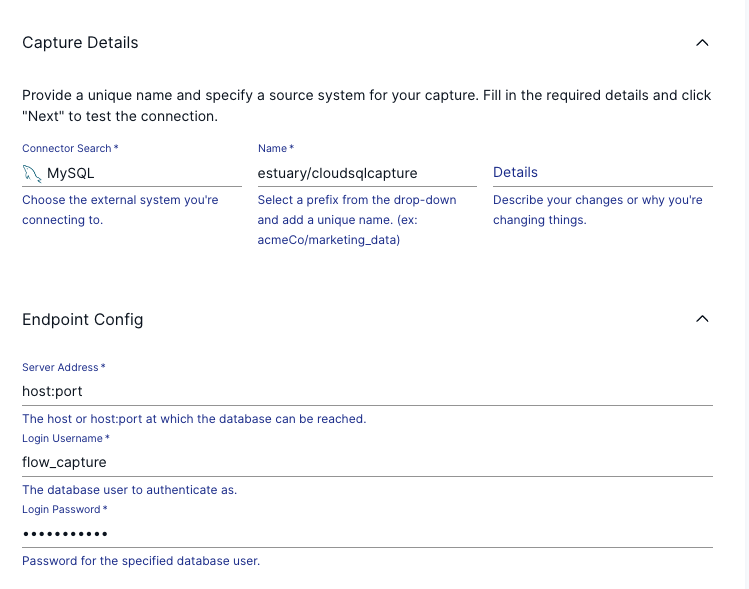

- Go to the create a new capture page of the Estuary web app and select either the MySQL, PostgreSQL, or SQL Server connector, depending on your Cloud SQL database type.

- Add a unique name for the capture. Provide the Cloud SQL server address, database username (this should be “flow_capture” if you followed the prerequisite steps), and a password.

- Click the Next button. Flow lists all the tables in your database, which it will convert into Flow data collections described by JSON schema. You can remove any tables you don’t want to capture.

- Click Save and Publish.

- On the dialog box showing your capture was successful, click the Materialize Collections button to continue.

- Choose the BigQuery connector.

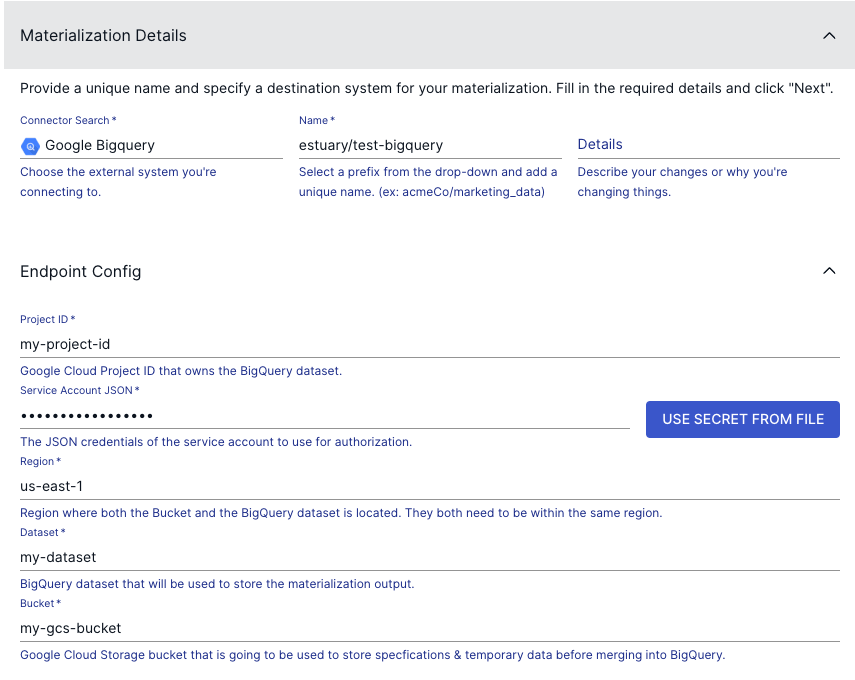

- Add a unique name for the materialization.

- Provide the following details for your BigQuery dataset:

- Google Cloud project ID

- Service account JSON credentials (which you generated per the prerequisites)

- The project’s Google Cloud Region

- Dataset name

- Staging Google Cloud Storage bucket name

- Scroll down to the Collection Selector. Each table you just captured from Cloud SQL will be mapped to a new table in BigQuery. Provide a name for each (you might choose to use the same names).

Optionally, you can modify the collection's schema, determining how it'll be mapped to BigQuery, but that shouldn't be necessary: Flow will output the data in a queryable format in BigQuery tables. - Click Next.

- Click Save and Publish.

All historical data from your Cloud SQL database will be copied to BigQuery. Any new data that appears in Cloud SQL will also be copied to BigQuery in real-time. Along the way, data will be cleaned and re-formatted to adhere to BigQuery's data types and valid schema options.

Using this method requires minimal technical expertise, and your data pipeline is backed up securely with schema validation and exactly-once semantics. Additionally, a single data pipeline can sync many (or all) tables in your Cloud SQL database into equivalent BigQuery tables.

Introducing Salesforce CDC

Before we conclude, it's essential to introduce Salesforce Change Data Capture, or Salesforce CDC, a vital tool in your data integration arsenal. Salesforce CDC enables real-time tracking of changes in your Salesforce data and seamless replication of these changes to BigQuery, enhancing your data analysis capabilities.Conclusion

BigQuery is an incredibly powerful and popular cloud-based data warehouse that can store and analyze large datasets quickly and easily. Cloud SQL is a fully managed database service that makes it easy to set up, manage, and maintain your data. When connected, Cloud SQL and BigQuery can be used together to store and analyze your data, enabling you to access new insights and make informed decisions.

Give Estuary Flow a try to create real-time data pipelines, for free today.

It integrates with an ecosystem of open-source connectors to extract data from sources including Google Cloud SQL to destinations like BigQuery with low latency. This’ll allow you to replicate that data to various systems for both operational and analytical purposes in real time.

Author

Popular Articles