Data lake architecture is the foundation for data analytics, reporting, and decision-making. The architecture's ability to store and process data from a variety of sources is changing the way businesses use data. Even though data lakes are an important component in data management, it is not always easy to implement them.

The challenges of navigating the complicated layers of a data lake and understanding how these technologies enhance data processing and analysis are things not every data engineering team is equipped to handle.

That’s what this guide aims to simplify for you. Our focus is on unboxing the concept of a data lake, its architectural layers, and its interaction with different technologies. We’ll also discuss data lake architecture diagrams of AWS and Azure and give you insight into Estuary Flow and its capacity to augment the effectiveness of your present data lake architecture.

By the end of this guide, you will have a comprehensive understanding of the complexities and benefits of a data lake, and how it relates to your data stack.

What is A Data Lake?

A data lake is a large-scale storage system that holds a significant amount of raw data in its native format until it’s needed for data access. A key characteristic of a data lake is that it allows storing data as-is without having to transform it in any specific format first. This data can be structured, semi-structured, or unstructured.

Data lakes can store enormous volumes of data which provides scalability as per your needs. Also, because they accommodate all types of data, they offer a high degree of flexibility. This feature makes them especially useful for diverse data analysis and machine learning applications.

However, data lakes present certain challenges. Without proper management and governance, a data lake can turn into a "data swamp" where it's difficult to locate and retrieve information. Ensuring data protection and access control can also become complex because of the volume and diversity of the data.

Now that we’ve covered the basics of data lakes, let’s discuss how they differ from data warehouses. This will not only clarify the differences between the two but also provide insights into choosing the most suitable data storage and management approach for your business needs.

Data Lake vs. Data Warehouse: Key Differences and Use Cases

While both data lakes and data warehouses are data storage systems, they each possess distinct features and serve different purposes which depend on their treatment of data.

A data warehouse is a structured storage system that houses processed data, making it best suited for business reporting and analytics.

The main advantage of a data warehouse is its schema-on-write approach where the data is structured before it’s stored, ensuring efficient organization of the data stored. This method also streamlines the process of data retrieval which is beneficial for scenarios where the questions and the structure of the data are pre-established.

On the other hand, a data lake is more accommodating of unstructured data and stores it in raw form. This flexibility makes it an excellent choice for data exploration, data discovery, and machine learning where questions aren't fully known in advance.

A key feature of a data lake is its schema-on-read approach where data is structured when it’s read. This characteristic offers flexibility for data exploration and accommodates ingesting data of various forms.

Both data lakes and data warehouses pose unique challenges when it comes to data protection and data governance. For businesses that need to access data in real time, data lakes are often a better fit. On the other hand, if a business knows what kind of reports it wants to generate, then a data warehouse could be a more suitable option.

Now let’s move towards understanding the core components of Data Lake architecture that will lay a solid foundation for designing, implementing, and maintaining a robust data lake infrastructure.

5 Core Components Of Data Lake Architecture

A well-designed Data Lake has a multi-layered architecture with each layer having a distinct role in processing the data and delivering insightful and usable information.

Let’s dissect these layers in depth to understand the integral roles they play.

Raw Data Layer (Ingestion Layer)

The raw data layer, or as it is often referred to as the ingestion layer, is the first checkpoint where data enters the data lake. This layer ingests raw data from various external sources such as IoT devices, data streaming devices, social media platforms, wearable devices, and many more.

It can handle a diverse array of data types like video streams, telemetry data, geolocation data, and even data from health monitoring devices. This data is ingested in real-time or in batches depending on the source and requirement, maintaining its native format without any alterations or modifications. The ingested data is then organized into a logical folder structure for easy navigation and accessibility.

Standardized Data Layer

The standardized data layer, although optional in some implementations, becomes crucial as your data lake grows in size and complexity. This layer acts as an intermediary between the raw and curated data layers and improves the performance of data transfer between the two.

Here, the raw data from the ingestion layer undergoes a format transformation, converting it into a standardized form best suited for further processing and cleansing. This transformation includes changing the data structure, encoding, and file formats to enhance the efficiency of subsequent layers.

Cleansed Data Layer (Curated Layer)

As we descend deeper into the architecture, we reach the cleansed data layer or the curated layer. This is where the data is transformed into consumable datasets, ready for analysis and insights generation. The layer executes data processing tasks that include data cleansing, denormalization, and consolidation of different objects.

The resulting data is stored in files or tables and makes it accessible and ready for consumption. It is in this layer where data is standardized in terms of format, encoding, and data type to ensure uniformity across the board.

Application Data Layer (Trusted Layer)

The application data layer, also known as the trusted layer, adds a tier of business logic to the cleansed and curated data. It ensures that the data aligns perfectly with business requirements and is ready for deployment in various applications.

Specific mechanisms like surrogate keys and row-level security are implemented here which provide additional safeguards to the data. This layer also prepares the data for any machine learning models or AI applications that your organization uses.

Sandbox Data Layer

Finally, the sandbox data layer, an optional but highly valuable layer, serves as an experimental playground for data scientists and analysts. This layer provides a controlled environment for advanced analysts to explore the data, identify patterns, test hypotheses, and derive insights.

Analysts can safely experiment with data enrichment from additional sources, all while ensuring that the main data lake remains uncompromised.

Data Lake Architecture Diagrams: AWS and Azure Cloud Solutions

Practically, data lakes can be implemented on many different platforms and can utilize many different components. Let’s look at how data lakes can be implemented in the cloud on 2 of the biggest cloud platforms available: AWS and Microsoft Azure.

AWS Cloud Data Lakes

Amazon Web Services (AWS) offers advanced and scalable solutions for building comprehensive data lakes, tailored to the diverse needs of organizations.

Let’s explore 3 unique data lake architecture patterns on AWS.

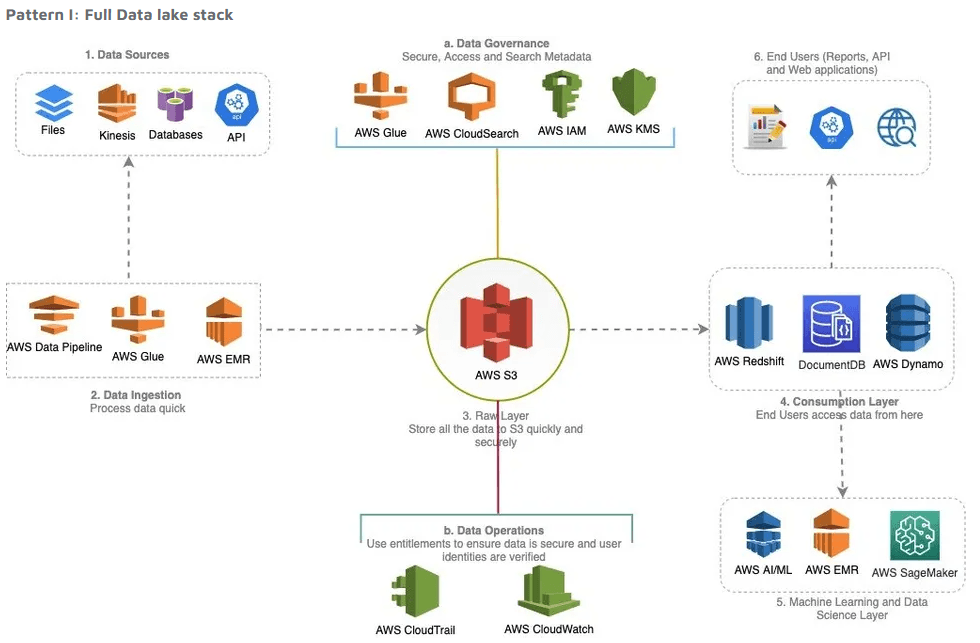

Comprehensive Data Lake Stack

The full data lake stack on AWS integrates various data sources, using services such as AWS Data Pipeline, AWS Glue, and AWS EMR for data ingestion. AWS S3 serves as the scalable storage raw data layer, while the consumption layer utilizes AWS Dynamo, Amazon Redshift, and Amazon DocumentDB for processed data.

Machine learning and data science integration occur through Amazon SageMaker and Amazon ML. Amazon Glue Catalog and AWS IAM ensure data governance while AWS CloudTrail and AWS CloudWatch manage operations.

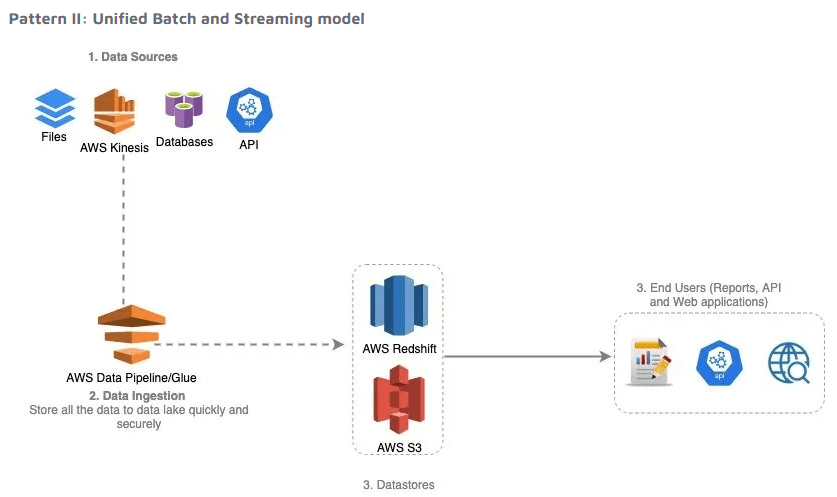

Consolidated Batch & Streaming Model

The unified batch and streaming model on AWS processes real-time and historical data. It employs AWS Data Pipeline for managing the data flow, AWS S3 for raw data storage, and Amazon DocumentDB for the consumption layer.

Amazon SageMaker and Amazon ML support machine learning and data science with data governance and operations managed similarly to the full data lake stack.

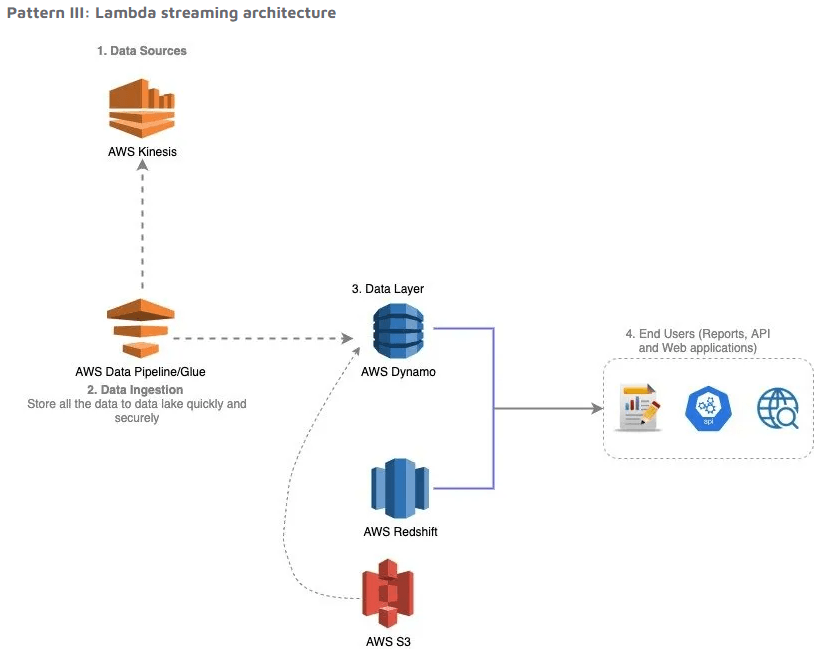

Lambda Streaming Architecture

The lambda streaming architecture on AWS handles large volumes of real-time data. It uses Amazon Dynamo for the consumption layer and AWS Glue and AWS EMR for data pipeline creation.

Machine learning models are built, trained, and deployed rapidly at scale with Amazon SageMaker, and data governance and operations align with the other two patterns.

Azure Cloud Data Lakes

Microsoft Azure's cloud platform builds robust, scalable data lakes tailored to an organization's specific requirements. Below, we illustrate 3 distinct data lake architecture patterns on Azure.

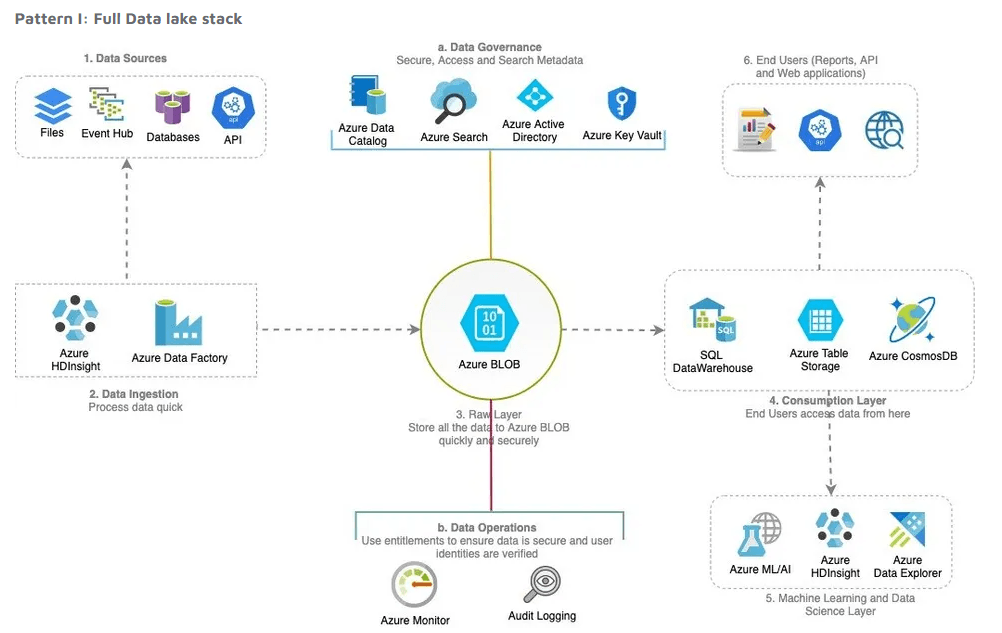

Full Data Lake Stack

The full data lake stack on Azure manages varying data structures and sources, utilizing Azure Data Factory for data pipeline creation. The consumption layer includes Azure SQL Data Warehouse (Synapse Analytics), Azure Cosmos DB, and Azure Table Storage, catering to diverse end-user needs.

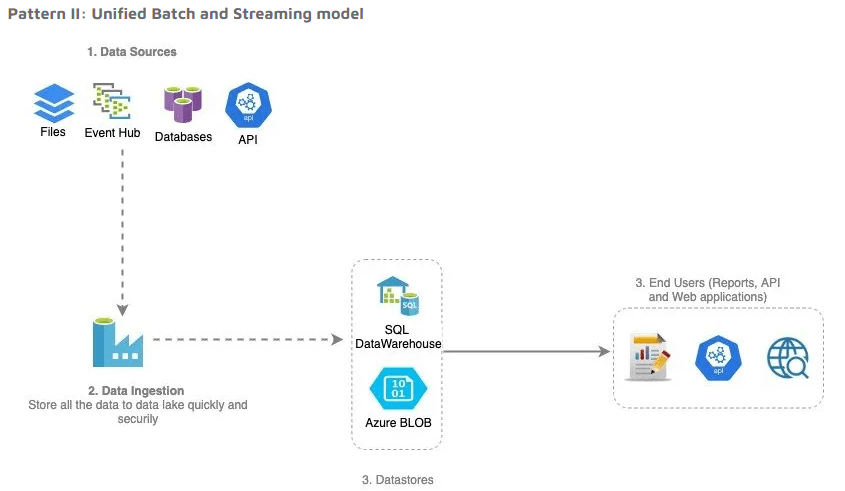

Unified Batch & Streaming Model

This architecture hinges on Azure Data Factory for managing data pipelines. Azure Blob and Azure Data Lake Storage form the raw data layer, providing secure and accessible storage. The consumption layer primarily relies on Azure Cosmos DB, known for low latency and high throughput, meeting high-performance application demands.

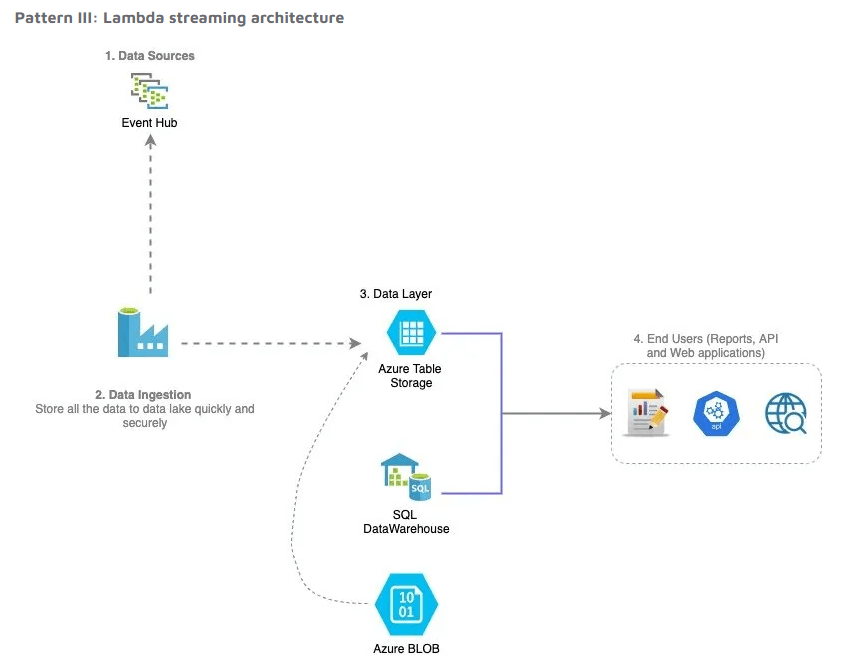

Lambda Streaming Architecture

The lambda streaming architecture leverages Azure HDInsight for data pipeline creation. HDInsight, offering Apache Hadoop and Apache Spark in a fully managed cloud environment, facilitates the transition from on-premise environments to the cloud, especially when migrating large amounts of code.

Hybrid Data Lake Architectures: What is Databricks?

Today, cutting edge data lake technologies aren’t full data lakes but hybrid data storage architectures. These systems bring the flexibility of a data lake with the control and reliability of other storage systems.

Let’s take a look at a system you might be familiar with: Databricks Delta Lake, a storage layer that combines the advantages of data warehouses and data lakes.

Delta Lake is an open-source storage layer that brings enhanced reliability and performance to data lakes.

It introduces several features that transform how you handle big data:

- ACID transactions: Delta Lake adds transactional integrity to data lakes which ensures that concurrent reads and writes result in consistent and reliable data.

- Time travel (data versioning): Delta Lake keeps a record of every change made to the data, allowing users to access older versions of data for audits or to fix mistakes.

- Unified batch and stream processing: With delta lake, you can merge batch and streaming data processing, simplifying your data architecture and reducing latency for insights.

- Schema enforcement and evolution: Delta Lake preserves the schema of the data on write. This protects the data from unexpected changes. Schema evolution can be enabled when necessary, accommodating changes in the data structure.

Delta Lake Architecture: Unifying Data Lakes and Data Warehouses

Delta Lake operates on an advanced architecture designed to optimize data processing and ensure data reliability. Let’s break down the architecture into its main components:

Data Storage

Delta Lake is built on top of your existing data lake, which means it’s compatible with file systems supported by Apache Spark like HDFS, AWS S3, and Azure Data Lake Storage. It uses a parquet format for storing data, providing efficient compression and encoding schemes.

Delta Log

The delta log is the transaction log that records all changes made to the data. It’s crucial for implementing ACID transactions and enabling Time Travel for data versioning. The log keeps track of every transaction, which includes added, removed, or modified data files.

Schema Enforcement & Evolution

Delta Lake stores the schema of the data in the Delta Log. This allows Delta Lake to enforce the schema when writing data, ensuring data integrity. If the schema evolves, Delta Lake can safely handle it by recording the schema change in the Delta Log.

Checkpoint

A checkpoint in Delta Lake is a snapshot of the current state of the Delta Log. This is also a Parquet file that contains the state of all the data files of a table at a specific version. Checkpoints help speed up the reading of the Delta Log for large tables.

Optimization Techniques

Delta Lakes use multiple techniques to optimize query performance, including:

- Compaction: Reducing the number of small files

- Data skipping: Skipping irrelevant data during a read operation

- Z-Ordering: A multi-dimensional clustering technique that co-locates related data

Estuary Flow: Optimizing Data Lake Performance with Real-Time Pipelines

Our innovative real-time data pipeline solution, Estuary Flow, modernizes data lake architecture in a different way.

While Databricks combines data warehouse advantages with a data lake with a focus on Big Data analytics, Flow can greatly simplify the management of streaming data using real-time data lakes. You can use Flow to ingest new data into your data lakes and read data from them, transform, and process it for use in your business applications.

Flow, itself, utilizes data lakes to develop a fault-tolerant streaming ETL architecture. These data lakes store JSON documents, encompassing data either captured from an external system or derived from one or more other collections. These collections function as single sources of truth for data, living in a cloud storage bucket owned by you.

So data lakes in Estuary Flow have 2 essential roles:

- Facilitating thttps://estuary.dev/streaming-etl-processes-cdc/he routing of data to different storage endpoints in near-real-time, thereby ensuring quick data availability at all points of use.

- Supporting the backfilling of data from various storage endpoints which allows the integration of historical data across different systems.

Key Features Of Estuary Flow

Let’s look into some key features of Estuary flow.

- Diverse Range of Connectors: Connectors are used to maintain a continuous and consistent flow of data between various data systems and the Flow platform. The platform's open protocol makes it easy to add new connectors.

- Real-Time Data Processing: The platform provides real-time SQL and TypeScript transformations. This enables the modification and refinement of data as it navigates through the data lake, thus supporting immediate decision-making.

- Scalability and Resilience: Estuary Flow is designed to grow with your data, with a record of handling data volumes up to 7 GB/s. It offers robust resilience across regions and data centers, ensuring data accuracy while keeping the load on your systems minimal.

- Robust Data Products: Every collection in Estuary Flow has a globally unique name, a JSON schema, metadata, and a key. The application of a key allows for data reduction based on precise rules, leading to highly controllable and predictable behavior. Meanwhile, each document in a collection needs to validate against its schema, ensuring the quality of your data products and the reliability of your derivations and materializations.

Estuary Flow's Approach To Data Lake Management

Estuary Flow simplifies data lake management by automatically applying different schemas to data collections as they move through the pipeline. This ensures an organized storage structure, transforming the data lake into a well-organized repository rather than a chaotic "data swamp." By streamlining the management process, Estuary Flow optimizes data accessibility and usability.

With Flow, you can even build unique storage solutions combining the unique strengths of transactional databases, data warehouses, and data lakes. By leveraging these storage options in synergy, you can unlock the full potential of your data stack.

Conclusion

Data lake architecture can be a challenge to understand and optimize to maximize its benefits. Success lies in understanding your unique data requirements, selecting appropriate tools, and executing a strong strategy.

Implementing Estuary Flow can enrich your Data Lake architecture, integrating a variety of data sources effortlessly, thereby revolutionizing your data operations.

If you’re prepared to enhance your data processes, sign up for Estuary Flow or get in touch with us for personalized assistance.

Author

Popular Articles