Data is essential for modern businesses, and turning it into meaningful information is crucial to succeed in today's data-driven world. But how can businesses efficiently handle vast data sets? The solution is found in data transformation and automation tools. These tools automate the data transformation process, allowing businesses to process large volumes of data quickly.

In this guide, we will dive into what data transformation is and explore the top 10 data transformation tools available in the industry to help streamline your data processes.

What is Data Transformation?

Data transformation is the process of converting data from one format to another so that it can be used effectively for analysis and decision-making. This involves tasks such as cleaning, filtering, validating, and combining data from different sources. The ultimate goal is to ensure that the transformed data is accurate, consistent, and relevant for the intended use.

The data transformation process involves the following key steps:

- Data Discovery: This involves identifying and collecting relevant data from different sources such as databases, spreadsheets, and files. Explore top Data Discovery Tools that can help you streamline this process.

- Data Mapping: Once the data is collected, it needs to be mapped to ensure compatibility with the desired format for analysis. This step involves identifying relationships between different data elements and creating a map to transform them into the desired format.

- Code Generation: After mapping the data, the next step is to create executable code to convert the input data into the desired output format.

- Code Execution: Once the code is generated and tested, it can be executed on the input data to transform it into the desired format.

- Data Review: The transformed data is then reviewed to ensure it is accurate, consistent, and appropriate for the intended use. This step involves data profiling, quality checks, and validation.

- Data Delivery: Finally, the transformed data is sent to the target system, where it can be used for analysis and decision-making.

Top 10 Data Transformation Tools in the Industry

Let’s explore the 10 best data transformation tools in the industry, including ETL tools with strong transformation capabilities, robust modeling and analytic tools that integrate with the data warehouse, and BI tools.

1. Estuary Flow

Estuary Flow is one of the best data integration and transformation tools in the market. It offers features for data ingestion, storage, transformation, and materialization, making it an all-in-one platform for managing data pipelines. Estuary Flow is built on top of a streaming broker called Gazette. It can regularly back up data by storing it in GCS or AWS buckets, providing a reliable and fault-tolerant architecture.

If you need to apply a stateful transformation to real-time data in motion, Flow is a great fit. You can write your transformations with simple SQL or TypeScript in a cloud environment build off of Flow’s intuitive web app. The platform takes care of the rest. For an in-depth technical perspective, see Flow’s docs.

Key features of Estuary Flow

- Automation: Flow automates schema management and data deduplication, eliminating the need for scheduling or orchestration tools.

- Scalability: Flow can handle active workloads at up to 7GB/s change data capture (CDC) from databases of any size.

- Accuracy & Control: Users can customize their pipelines with built-in schema controls to ensure accuracy and consistency. The platform offers idempotent, exactly-once semantics.

- Real-time Database Replication: Flow can create exact copies of data in real time. It supports databases of 10 TB+ storage.

- Pre-Built Connectors: Flow Provides over 200 pre-built connectors for various data sources and destinations, making it easy to integrate with different systems.

- Streaming ETL: Flow provides real-time ETL (extract, transform, load) capabilities, ensuring that the data in the warehouse is always up-to-date and readily available.

Pricing

Estuary Flow offers three pricing tiers—Free, Cloud, and Enterprise. Please refer to Estuary Flow pricing details for more information. Check out the Estuary Flow pricing calculator to estimate the cost based on your specific needs.

There's no credit card required to sign up: you can start for free!

2. IBM DataStage

IBM DataStage is a robust data transformation tool for data movement and transformation tasks. It supports both ETL and ELT patterns, delivering transformed data to various destinations such as data warehouses, web services, and messaging systems. With a graphical framework, IBM DataStage provides an intuitive way to develop jobs and manage data.

Key features of IBM DataStage

- Full Spectrum of Data and AI Services: IBM DataStage offers a range of data and AI services to manage the data and analytics lifecycle on the IBM Cloud Pak for Data platform. These services include data science, event messaging, data virtualization, and data warehousing.

- Automated Delivery Pipelines for Production: Automates continuous integration/continuous delivery (CI/CD) job pipelines from development to testing and production. This helps to reduce development costs.

- Distributed Data Processing: IBM DataStage allows users to execute cloud runtimes remotely wherever the data resides while maintaining data sovereignty and minimizing costs.

Pricing

IBM DataStage charges based on the amount of computing resources used to run your job for an hour.

3. Matillion

Matillion is a cloud-based tool that helps to quickly transform your data with ease. It provides a simple graphical interface to connect to different data sources, load data into your cloud warehouse, and transform data as you like. Matillion also offers the flexibility to write Python code for advanced transformation tasks. This tool is ideal for users in small and medium-sized businesses.

Key features of Matillion

- Pre-Built Connectors: Provides over 150 pre-built connectors for various data sources and destinations, making it easy to integrate with different systems.

- GUI: Offers a graphical user interface to create orchestration jobs and sophisticated ETL pipelines. This enables you to manage your data efficiently.

- Custom Python Scripts: Uses Custom Python scripts for complex transformations, allowing you to customize your data processing as needed.

Pricing

Matillion's pricing starts at $1,000 per month for 500 credits, with each credit representing one virtual core-hour. Since each task uses two cores per hour, costs can quickly increase, especially with more tasks and resources, making it an expensive choice for larger data workloads.

Also read: Top Matillion Alternatives

4. Datameer

Datameer is a SaaS data transformation solution explicitly designed for Snowflake Cloud. It provides end-to-end data lifecycle management, including discovery, transformation, deployment, and documentation. You can manipulate datasets using SQL, No Code, or both, making it ideal for both tech-savvy and SQL-savvy teams.

Key features of Datameer

- Data Catalog: Built-in data catalog with quick access to documentation and asset metadata for easy movement.

- Advanced Data Pipelines: Offers advanced capabilities for versioning, deploying, and monitoring your work. It enables you to use a developer’s capabilities without writing any code.

- Full Access: Provides complete control of metadata, including tags, descriptions, and attributes. It also includes rigorous data lineage and audit trails.

Pricing

Datameer offers a 14-day free trial, but there are no pricing details on the website. The Datameer team can prepare a custom quote.

5. dbt (Data Build Tool)

dbt is a command-line data transformation tool designed for SQL and Python-proficient data analysts and engineers. It follows software engineering best practices such as portability, modularity, continuous integration, delivery, and documentation. dbt can integrate with various cloud data warehouses like BigQuery, Snowflake, and Redshift; and local databases.

For robust data analytics beyond ETL — on the back end of the warehouse — dbt is an industry favorite.

Key features of dbt

- SQL-based Data Transformation: Uses SQL code to build and manage data models, test data quality, and document work, enabling easy integration with SQL-supporting data sources.

- Automatic Dependency Graph Generation: Automatically generated dependency graphs help you track data flow through the pipeline and execute each step in the correct order.

- Scheduling, Logging, and Alerting: Features like scheduling, logging, and alerting enable pipeline automation and issue notification.

Pricing

dbt provides a free tier for one developer seat with forever free access. The paid plans start at $100/developer seat/month. For enterprise plans, you must contact the dbt team.

6. Denodo

Denodo is one of the best data integration and transformation tools that enables you to transform data without physically moving it. It supports structured and unstructured sources. Denodo provides flexible options for coding data transformations, including SQL and other services. Alternatively, you can use a graphical interface to create virtual views that combine data from various sources. Denodo is suitable for both business users and data experts.

Key features of Denodo

- Support for Various Data Sources: Denodo supports various data sources, including flat files, cloud-based databases, and on-premises databases. This allows users to access and transform data from various sources in a single platform.

- Meets Security/Privacy Requirements: Denodo offers centralized control of data query executions, ensuring data security and privacy. It complies with various regulations and standards, such as GDPR and HIPAA.

- Legacy Replacement and Cloud Migration: Denodo simplifies data migration from legacy systems to cloud-based platforms. It supports multi-cloud deployments, reducing the complexity of managing data across different cloud platforms.

Pricing

Denodo offers a 30-day free trial. After the free trial, the platform is priced at $6.27/hour.

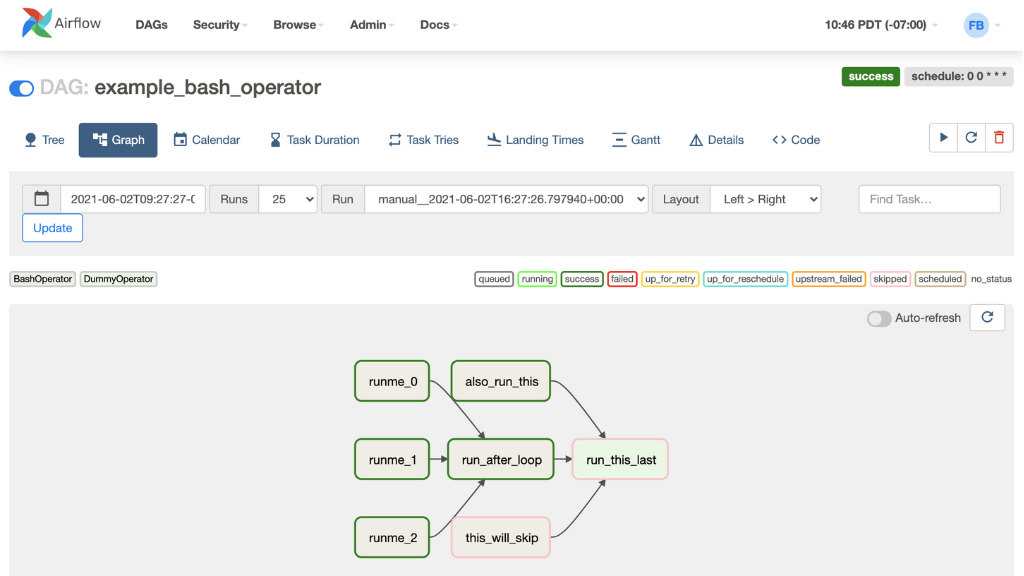

7. Apache Airflow

Apache Airflow is an open-source data management platform that helps you manage your data engineering pipelines with ease. Apache Airflow's advanced data transformation technology simplifies complex data flows and enhances the versatility of Python ETL tools for users.

Key features of Apache Airflow

- User-Friendly Workflow Management: Apache Airflow Monitors, schedules, and manages workflows using an intuitive UI. It always stays in control by monitoring the status and logs of your completed or ongoing tasks.

- Plug-and-Play Integrations: Provides plug-and-play integrations with popular operators like Amazon Web Services, Google Cloud Platform, or Microsoft Azure.

- DAG: Organize, monitor, and schedule complicated data operations using directed acyclic graphs (DAG), a topological representation of data flows inside the system.

Pricing

Since Apache Airflow is an open-source project, it is completely free to use.

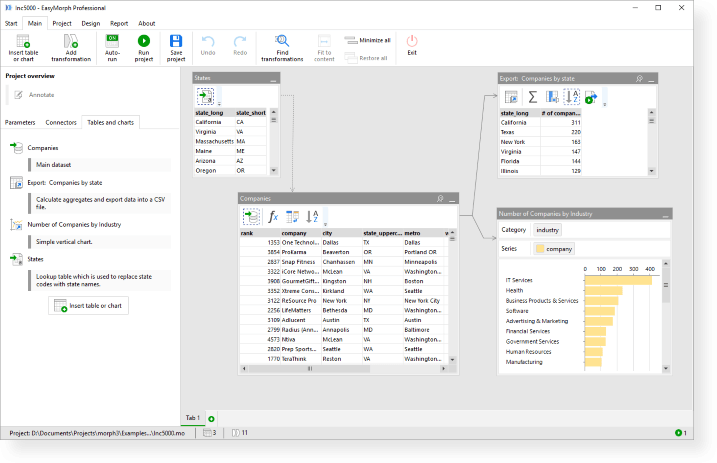

8. Easymorph

Easymorph is a data preparation and ETL tool designed to simplify the process for non-technical individuals. The platform provides a simple and visual UI for automating complex data transformations, enabling easy and controlled self-service for data organization. Easymorph enables you to extract data from multiple sources, including databases, spreadsheets, emails, and web API endpoints.

Key features of Easymorph

- No-Code Automation: Easymorph allows you to automate your ETL processes without any programming or SQL knowledge. Visual workflows can be used to automate data retrieval, transformation, and visualization.

- Built-in Actions: The program includes over 150 built-in actions for visual data preparation and ETL. These actions can be used to pull data from various sources, automate and alter visual data, and schedule ETL processes.

- Data Transformation: Easymorph allows you to transform your data efficiently without having to deal with SQL or custom scripts. The program includes native support for data transformations using an intuitive and visual interface.

Pricing

Easymorph has two pricing tiers—Free and Professional. The Professional tier costs $75 per month.

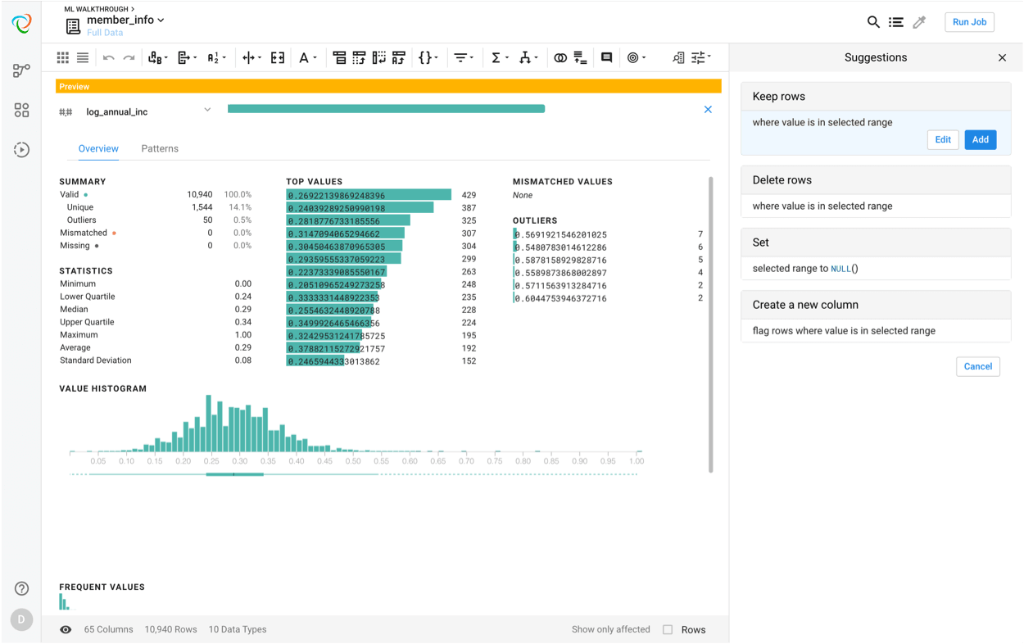

9. Designer Cloud by Τrifacta

Designer Cloud by Τrifacta is one of the powerful big data transformation tools that allows data engineers to clean, prepare, transform, and report unstructured data with ease. With Designer Cloud, you can generate your data pipelines using your preferred tool like SQL, Spark, Python, or even dbt. It also offers built-in governance to ensure the quality and reliability of your data pipelines, making it a reliable choice for any organization.

Key features of Designer Cloud by Τrifacta

- Data Wrangling: The platform provides an intuitive visual interface for data wrangling tasks such as data cleaning, transformation, and aggregation, which helps accelerate the time-to-insight.

- Multi-Cloud Support: Designer Cloud can be deployed on all major cloud platforms, including Google Cloud Platform, Amazon Web Services, and Microsoft Azure.

- Scalability: Designer Cloud is designed to be highly scalable, ensuring that performance is never an issue, even with large amounts of data.

Pricing

Designer Cloud by Τrifacta offers three pricing tires—Starter, Professional, and Enterprise.Pricing starts at $80 per user/month for the Starter plan.

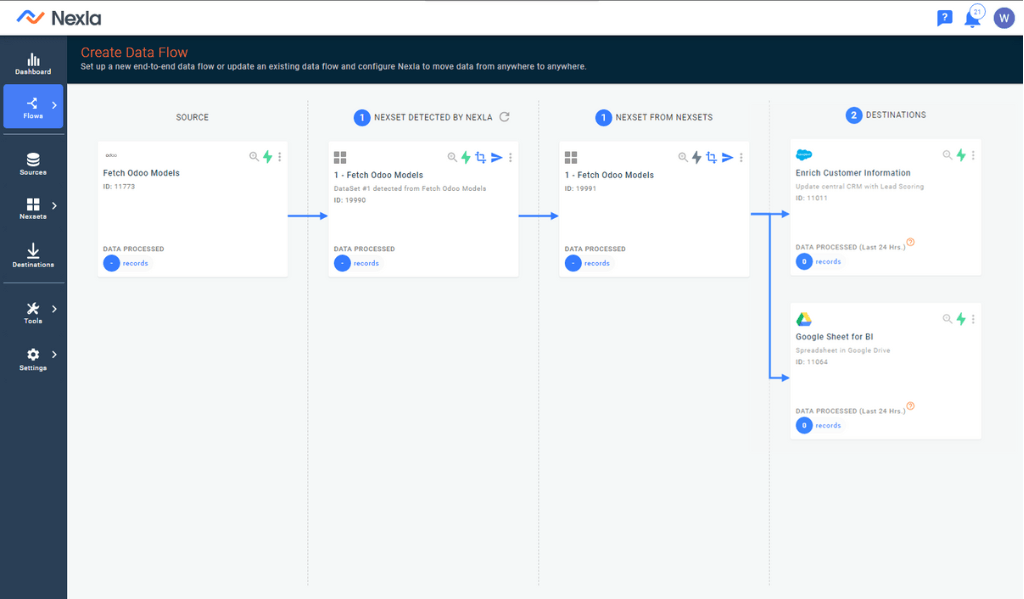

10. Nexla

Nexla is a data transformation tool that simplifies data preparation with its no-code interface. It allows business users to conduct data transformation with its extensive library of transformation functions. Nexla provides automated versioning and logging, allowing you to understand how data sets have changed, who made those changes, simplifying compliance and increasing data reliability.

Key features of Nexla

- All-in-One Integration Process: Nexla combines various processes such as streaming, ETL, ELT, reverse ETL, API integration, and integration into a single unified process.

- Data Quality and Governance: Nexla offers data quality and governance features to ensure your data is accurate and compliant. This includes schema validation, data type validation, and data profiling.

- Real-Time Data Processing: Nexla provides real-time data processing capabilities for streaming and batch data. This allows you to analyze and act on data insights as they happen, leading to better business outcomes.

Pricing

Nexla offers a free trial for 14 days. After the trial period, pricing is based on the number of active data sources and the amount of data processed per month.

Conclusion: Choose the Right Data Transformation Tool for 2024

The world of data transformation tools is constantly evolving and improving, with new and innovative solutions emerging every year. The 10 tools highlighted in this discussion represent some of the best options available in 2024. Each tool offers unique features and capabilities to help organizations transform data with greater speed, accuracy, and efficiency. So, evaluate your needs carefully, and choose the tools that best fit your specific goals and objectives.

Are you looking for a data transformation tool that can assist in cleaning, manipulating, and formatting your data for more effective analysis? Why not try Estuary Flow? To get started for free, register here!

FAQs on Data Transformation Tools

1. What is the most cost-effective data transformation tool?

While tools like Apache Airflow and Easymorph offer free versions, Estuary Flow provides a powerful and affordable real-time transformation solution with flexible pricing options. Its pay-as-you-grow model, combined with automation features, makes it a cost-effective choice for both small and large enterprises.

2. Which data transformation tool is best for real-time processing?

Estuary Flow excels in real-time data transformation, offering change data capture (CDC) with minimal latency. Its real-time database replication and built-in schema management make it ideal for users requiring instant access to transformed data. It also supports 200+ connectors for seamless integration.

3. Can I automate data transformations without coding?

Yes, tools like Easymorph and Estuary Flow allow you to perform data transformations with minimal coding. Estuary enables users to write transformations using simple SQL or TypeScript, but its automation features significantly reduce manual coding efforts, making it accessible for non-technical users as well.

Also Read:

Author

Popular Articles