Most modern applications require complex search capabilities, such as full-text search across large datasets, filtering based on multiple criteria, or near real-time analysis. While DynamoDB is suitable for developing low-latency applications with high throughput, its search capabilities are limited. Its support for search-related capabilities is extremely basic and not enough for the complex search queries of modern applications.

A DynamoDB stream to ElasticSearch pipeline can help mitigate this drawback. This involves allowing DynamoDB to remain as the primary data storage while ElasticSearch becomes the dedicated search and analytics index. Such a combination unlocks the ability to provide lightning-fast search results that are tailored toward user queries, derive valuable insights in real-time, and ultimately create a more engaging and data-driven user experience.

In this post, we’ll look at two reliable methods to stream data from DynamoDB to ElasticSearch.

What Is DynamoDB?

DynamoDB is a fully managed NoSQL database service by Amazon Web Services (AWS), suitable for all types of applications. The service is also known for its consistent performance and scalability.

With its serverless nature, DynamoDB scales automatically with the application’s needs, ensuring efficient performance and data handling at any scale. It eliminates the need to manage hardware or software, allowing developers to focus on building their applications. Additionally, DynamoDB offers encryption at rest, eliminating any security risks and keeping your data secure.

Here are some key features of DynamoDB.

- Performance: DynamoDB handles over 10 trillion requests per day and can support tables of any size with automated scaling. This ensures consistent single-digit millisecond response times and up to 99.999% availability.

- Flexible Data Models: It supports both key-value and document data models, allowing for a flexible schema. With no servers to manage and a pay-as-you-go pricing model, DynamoDB auto-scales and effectively manages tables to maintain performance.

- ACID Transactions: DynamoDB supports atomicity, consistency, isolation, and durability (ACID transactions), enabling complex business logic for critical workloads.

- Global Tables: Global tables provide active-active replication across AWS Regions, improving application resiliency and reducing latency for globally distributed applications.

What Is ElasticSearch?

ElasticSearch is an open-source, distributed search and analytics engine that is built on top of Apache Lucene. It is widely recognized for its comprehensive features, scalability, and ease of use.

One of the impressive features of ElasticSearch is that it speeds up search operations by using an index to retrieve data, which is much faster than searching the text directly. It organizes data into documents instead of traditional relational database tables. This document-oriented approach is without tables, where data is structured in JSON format. An advantage of this structure is that it allows for complex searches, including full-text searches across massive amounts of data with near-instant response times. It's commonly used in various applications for log and event data analysis, data exploration, and machine learning.

Let’s look into some of the key features of ElasticSearch.

- Distributed Architecture: ElasticSearch is built to scale horizontally, allowing you to increase capacity by adding more nodes to a cluster. Its distributed nature supports automatic rebalancing of data nodes, ensuring high availability and performance.

- Real-time Analytics: It provides near real-time search and analytics capabilities. Once data is indexed, it is immediately searchable, making ElasticSearch suitable for time-sensitive applications that require up-to-date information.

- Flexible Data Ingestion: ElasticSearch supports various data types, including text, numbers, dates, and geo-spatial data. This makes ElasticSearch a powerful tool for diverse use cases.

How to Stream Data From DynamoDB to ElasticSearch

There are two methods you can use to stream data from DynamoDB to ElasticSearch:

- Method 1: Using a No-Code SaaS Tool Like Estuary Flow for Streaming DynamoDB to ElasticSearch

- Method 2: Using AWS Lambda for DynamoDB Stream to ElasticSearch

Method 1: Using a SaaS Alternative like Estuary Flow for Streaming DynamoDB to ElasticSearch

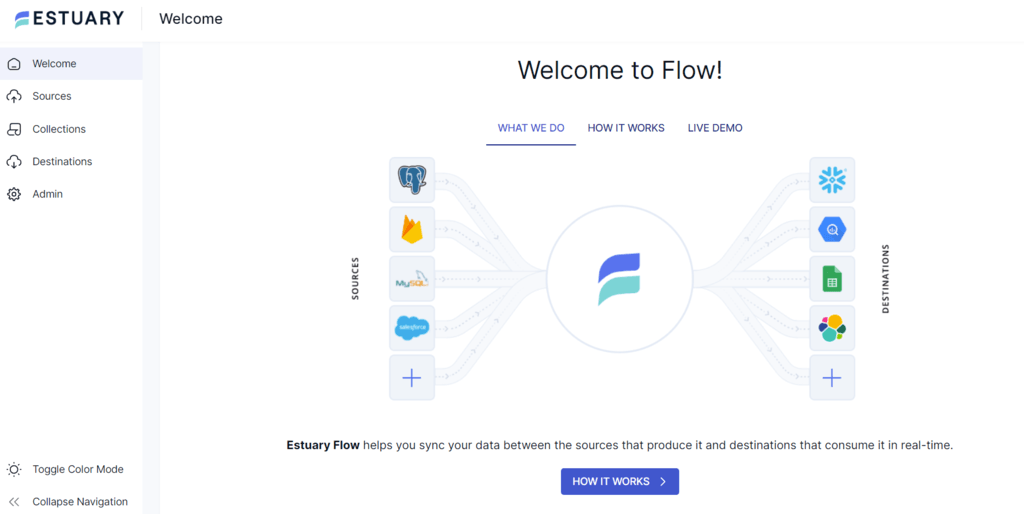

You can easily stream data from DynamoDB to ElasticSearch with user-friendly no-code ETL (extract, transform, load) tools, even without much technical expertise. Estuary Flow is one of the best no-code ETL tools. It efficiently automates the DynamoDB stream to ElasticSearch.

Before you start setting up the streaming pipeline for DynamoDB to ElasticSearch integration, here are the prerequisites you need to ensure are in place.

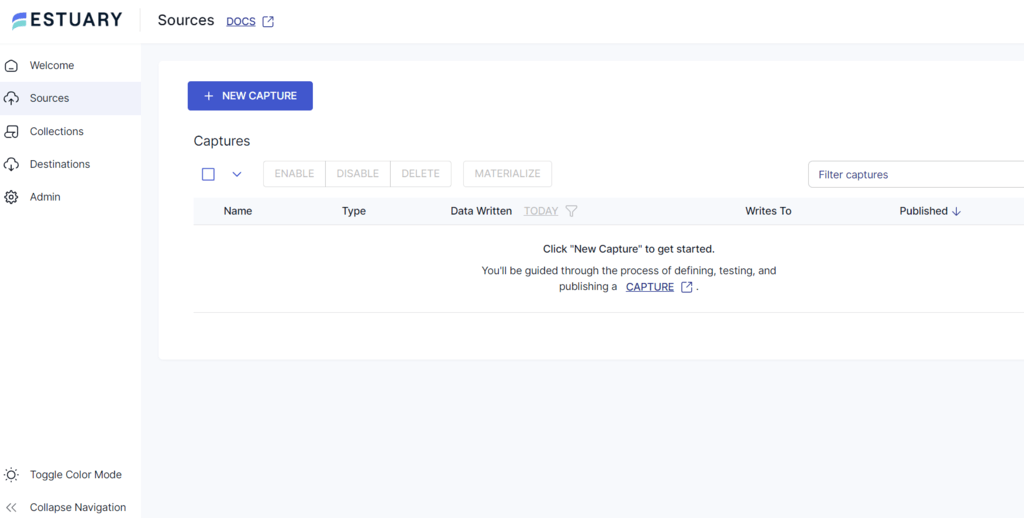

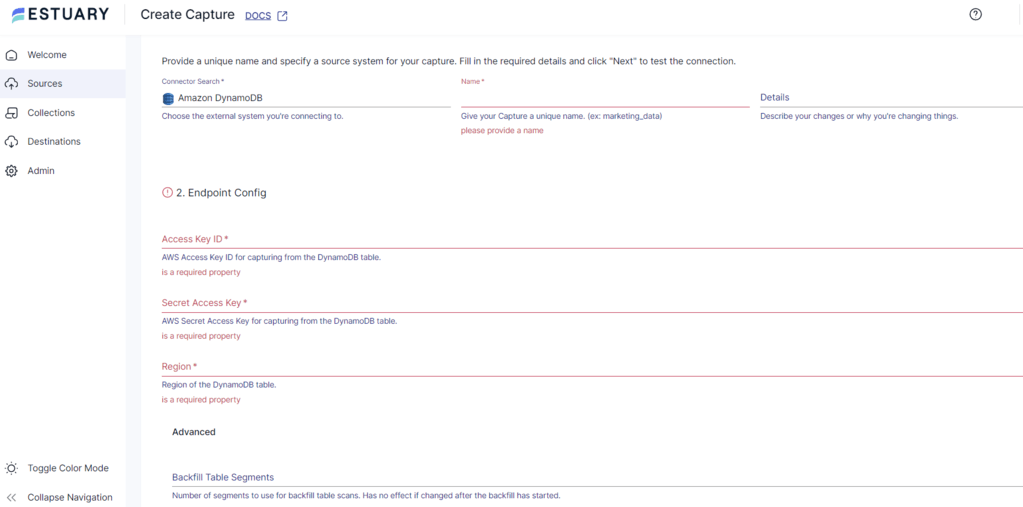

Step 1: Configure DynamoDB as the Source

- Login to your Estuary Flow account.

- Click on the Sources tab on the left navigation pane.

- Click on the + NEW CAPTURE button.

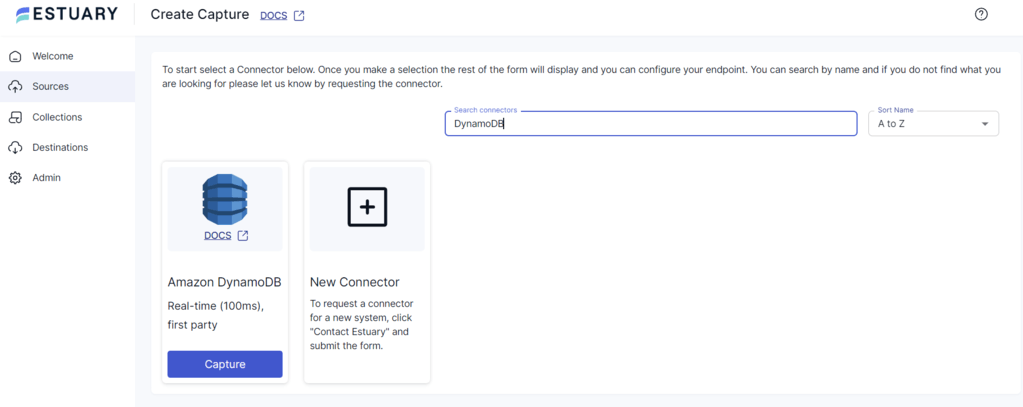

- Next, search for DynamoDB using the Search connectors field and click the connector’s Capture button to begin configuring it as the data source.

- On the Create Capture page, enter the specified details like Name, Access Key ID, Secret Access Key, and Region.

- After filling in the required fields, click on NEXT > SAVE AND PUBLISH. This will capture data from DynamoDB into Estuary Flow collections.

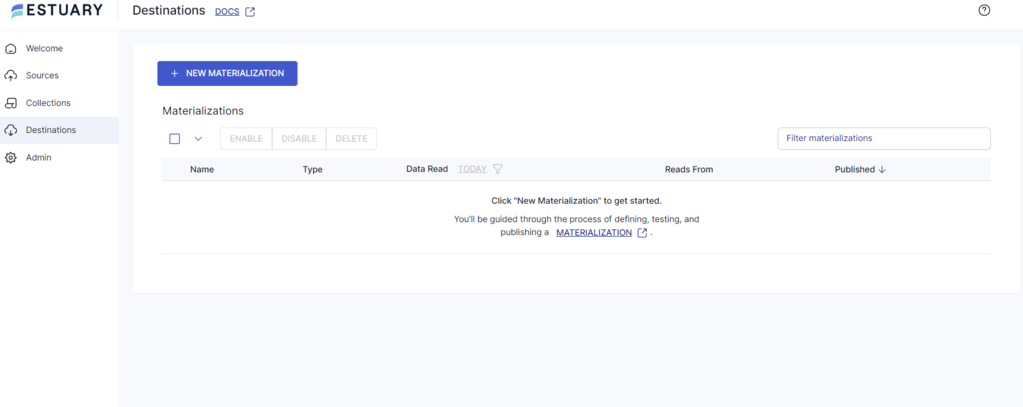

Step 2: Configure ElasticSearch as the Destination

- Once the source is set, click MATERIALIZE COLLECTIONS in the pop-up window or the Destinations option on the dashboard.

- Click on the + NEW MATERIALIZATION button on the Destinations page.

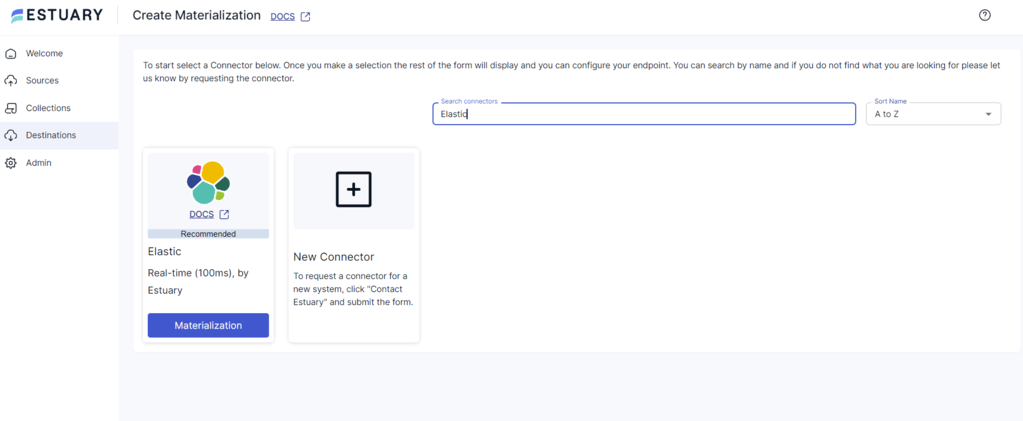

- Type Elastic in the Search connectors box and click on the Materialization button of the connector when you see it in the search results.

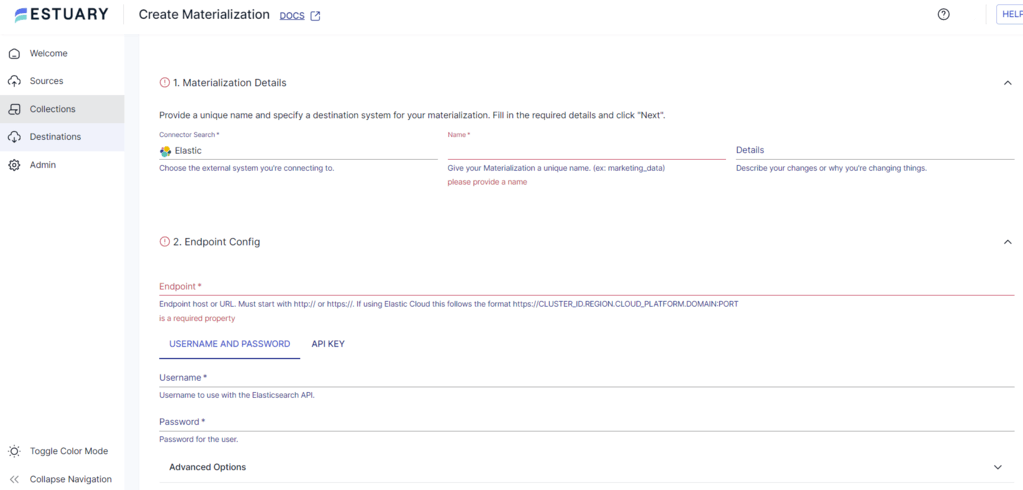

- On the Create Materialization page, enter the details like Name, Endpoint, Username, Password, and Index Replicas.

- If your collection of data from DynamoDB isn’t filled automatically, you can add it manually using the Link Capture button in the Source Collections section.

- Finally, click on NEXT > SAVE AND PUBLISH to materialize data from your Flow collections to ElasticSearch.

- With the source and destination configured, Estuary Flow will begin loading data from the Flow collections to ElasticSearch.

Benefits of Using Estuary Flow

Here are some of the benefits of Estuary Flow.

- No-code Configuration: Powerful no-code tools like Estuary Flow are designed to be user-friendly and do not require extensive technical expertise to configure the source and destination. This is due to over 200 connectors that help simplify the process in just a few clicks.

- Real-time Data Processing With CDC: Estuary flow leverages Change Data Capture (CDC) for real-time data processing. This helps maintain data integrity and reduces latency.

- Scalability: Estuary Flow is designed to handle large data flows and supports up to 7 GB/s. This flow makes it highly scalable as data usage in DynamoDB and ElasticSearch increases.

- Efficient Data Transformations: Estuary supports TypeScript and SQL transformations. By leveraging Typescript, Estuary can prevent common pipeline failures and enable fully type-checked data pipelines, which is crucial for ensuring data integrity during migration. In addition, the platform’s native SQL transformations provide an easy-to-use alternative for reshaping, filtering, and rejoining data in real time, which is essential for maintaining data consistency and accuracy.

Method 2: Using AWS Lambda for DynamoDB Stream to ElasticSearch

Streaming data from DynamoDB to ElasticSearch can significantly enhance your application’s search capabilities. Here are the detailed steps involved in this method that uses AWS Lambda for the integration.

Step 1: Create Your DynamoDB Table With Streams Enabled

- Create a DynamoDB table in the AWS Management Console.

- Enable DynamoDB Streams on the table and set the stream view type to New Image.

Step 2: Create an IAM Role for Lambda Execution

- Your Lambda function needs permission to read from DynamoDB and write to your ElasticSearch domain.

- Create an IAM Role with policies with permissions for Amazon ElasticSearch Service (ES), DynamoDB, and Lambda execution.

Here’s an example:

| { "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": [ "es:ESHttpPost", "es:ESHttpPut", "dynamodb:DescribeStream", "dynamodb:GetRecords", "dynamodb:GetShardIterator", "dynamodb:ListStreams", "logs:CreateLogGroup", "logs:CreateLogStream", "logs:PutLogEvents" ], "Resource": "*" } ] } |

Step 3: Create an ElasticSearch Domain

- In the AWS Management Console, navigate to ElasticSearch service and create a new domain. This will be the destination for your indexed data.

Note: AWS has transitioned ElasticSearch service to Amazon OpenSearch Service. However, existing domains continue to be referred to as ElasticSearch domains.

- Configure the domain settings as needed, including access policies to allow the Lambda function to post data.

Step 4: Create a Lambda Function

- Create a Lambda function by choosing a runtime (e.g., Python, Node.JS, etc.).

- Write the function code to process records from DynamoDB streams and post them to ElasticSearch.

Here is a sample code in Python.

| import boto3 import requests from requests_aws4auth import AWS4Auth region = 'us-east-1' service = 'es' credentials = boto3.Session().get_credentials() awsauth = AWS4Auth(credentials.access_key, credentials.secret_key, region, service, session_token=credentials.token) host = 'https://search-ddb-to-es-r7dcdoy4caeoklst3yseumqmre.us-east-1.es.amazonaws.com' # the Amazon ES domain, with https:// index = 'lambda-index' type = 'lambda-type' url = host + '/' + index + '/' + type + '/' headers = { "Content-Type": "application/json" } def handler(event, context): count = 0 for record in event['Records']: # Get the primary key for use as the Elasticsearch ID id = record['dynamodb']['Keys']['id']['S'] if record['eventName'] == 'REMOVE': r = requests.delete(url + id, auth=awsauth) else: document = record['dynamodb']['NewImage'] r = requests.put(url + id, auth=awsauth, json=document, headers=headers) count += 1 return str(count) + ' records processed.' |

Step 5: Configure The DynamoDB Stream Trigger

- In the Lambda function’s trigger, add a new trigger.

- Select DynamoDB as the trigger type and choose the DynamoDB table created in Step 1.

Step 6: Test the Setup

- After the setup, make changes to your Amazon DynamoDB table and verify that the changes are reflected in your ElasticSearch domain.

- You can use Kibana or ElasticSearch API to query or visualize the data and ensure it matches the changes made in DynamoDB.

These are the steps for completing a DynamoDB stream to ElasticSearch using AWS Lambda. However, this method has several limitations.

- DynamoDB Streams 24-hour processing Limit: DynamoDB stream retains data for 24 hours only. If the Lambda function fails to process records within this time frame, those records will be lost permanently.

- Lambda Function Code and Dependencies: As your data streaming requirements evolve, you’ll need to update your Lambda function to handle schema changes, add error handling, etc., which can add extra operational overhead.

- Technical Expertise: Building the custom Lambda functions requires extensive knowledge in both programming and the AWS ecosystem, which can be a setback for non-technical users.

Key Takeaways

DynamoDB stream to ElasticSearch provides a significant increase in performance and scalability. While using the AWS Lambda function can help implement this, it can be time-consuming and requires extensive technical expertise, making it prone to errors.

Estuary Flow is an excellent solution for those who want an easy and automated way to stream data from DynamoDB to ElasticSearch without the need for extensive technical knowledge. The method you choose depends on your needs and level of expertise.

Estuary Flow provides an extensive and growing list of connectors, robust functionalities, and a user-friendly interface. Sign up today to simplify and automate DynamoDB stream to ElasticSearch.

FAQs

- Can we integrate Elasticsearch with DynamoDB?

Yes, you can integrate Elasticsearch with DynamoDB to enable full-text search queries on your DynamoDB data. AWS provides a plugin called the Amazon DynamoDB Logstash Plugin that makes it easy to set up this integration.

- Is DynamoDB stream a Kinesis stream?

No, DynamoDB Streams and Kinesis Data Streams are separate services, but they have similar APIs. You can use the DynamoDB Streams Kinesis Adapter to process DynamoDB Streams using the Kinesis Client Library (KCL).

Author

Popular Articles