Gone are the days when rigid, siloed application architectures couldn’t keep up with today's data-intensive business environments. Event-driven architecture (EDA) examples from leading companies show how industries are now adopting flexible, responsive systems to get real-time insights for their growth.

These architectures give a fresh event-driven approach so you can react dynamically to the ever-changing data flow environment. As the global microservices architecture market size is expected to reach $21.61 billion by 2030, companies are quickly catching on to the advantages of this approach to streamline their business processes.

If everyone else is benefiting from it, why should you miss out? Through different real-world examples, we will see how companies use event-driven architecture to seize opportunities and overcome challenges in an increasingly complex business landscape.

What Is Event-Driven Architecture?

Event-driven architecture (EDA) is a software design pattern or architectural style that focuses on the flow of events within a system or between different systems, often in the form of messages or notifications. Various components of a system communicate and react to events asynchronously, rather than through direct, synchronous method calls. Events can represent various occurrences, changes in state, or triggers within a system.

Event-driven architecture is commonly used in various applications, including:

- Real-time data processing

- Microservices-based systems

- IoT (Internet of Things) applications

- Systems that require high levels of concurrency and responsiveness

For a detailed explanation of event-driven architecture, including its models and components, read our in-depth guide on event-driven architecture basics.

What Are the Components of Event-Driven Architecture?

Let’s explore some of the key components of event-driven architecture to gain a better understanding of how it all works.

Event

An event is a significant occurrence within the system or from an external source that triggers a specific action or response. Events can be categorized as either external events (e.g., user input, sensor data) or internal events (e.g., system notifications, timer events).

Event Source (Publisher)

The event source is the entity that generates or emits events. These sources can be anything from user interfaces and sensors to databases and external services. Event sources publish events to an event bus or message broker.

Event Bus (Message Broker)

The event bus is a central component in event-driven architecture. It acts as a communication channel that helps in the distribution of events from event sources to event consumers. You can use different technologies like Apache Kafka, Gazette, or cloud-based solutions like AWS EventBridge to implement event buses.

Event Consumer (Subscriber)

Event consumers are components or services that subscribe to specific types of events from the event bus. When an event is published on the bus, the relevant consumers receive and process it. Consumers can be microservices, functions, or other parts of the system that need to respond to events.

Event Handler

Event handlers process and respond to events. They are part of the event consumers and contain the business logic to execute when a specific event is received. Event handlers decouple the processing of events from the event source, promoting loose coupling between components.

Event Router

In more complex event-driven systems, you can have an event router that directs events to their respective event consumers based on predefined rules or routing logic. This component helps manage the flow of events within the system.

Event Store

An event store is a data store that maintains a record of all events that have occurred in the system. This allows for event sourcing – a technique used to capture and store the state of an application as a sequence of events. Event stores are particularly useful for auditing, debugging, and replaying events.

Event-Driven Patterns

Event-driven architecture can involve various patterns like publish-subscribe, request-reply, and point-to-point communication. The choice of pattern depends on the specific use case and requirements of the system.

Error Handling & Retry Mechanisms

Advanced event-driven systems often include error-handling mechanisms and retry strategies to deal with failed event processing. This makes sure that events are not lost and that the system remains resilient in the face of failures.

10 Practical Event-Driven Architecture Case Studies to Explore

Event-driven architecture allows systems to react to real-world events and makes communication asynchronous. This improves operations and makes them more agile and scalable so things can keep running smoothly no matter how big or complex they get.

From entertainment giants to logistic titans, event-driven architecture provides solutions that handle vast amounts of data in real time, adapt to changing conditions, and give instant feedback, especially when an event occurs.

To understand the impact they have in real life, let’s delve into 10 event-driven architecture examples across various domains and see the transformative power of event stream processing.

Netflix

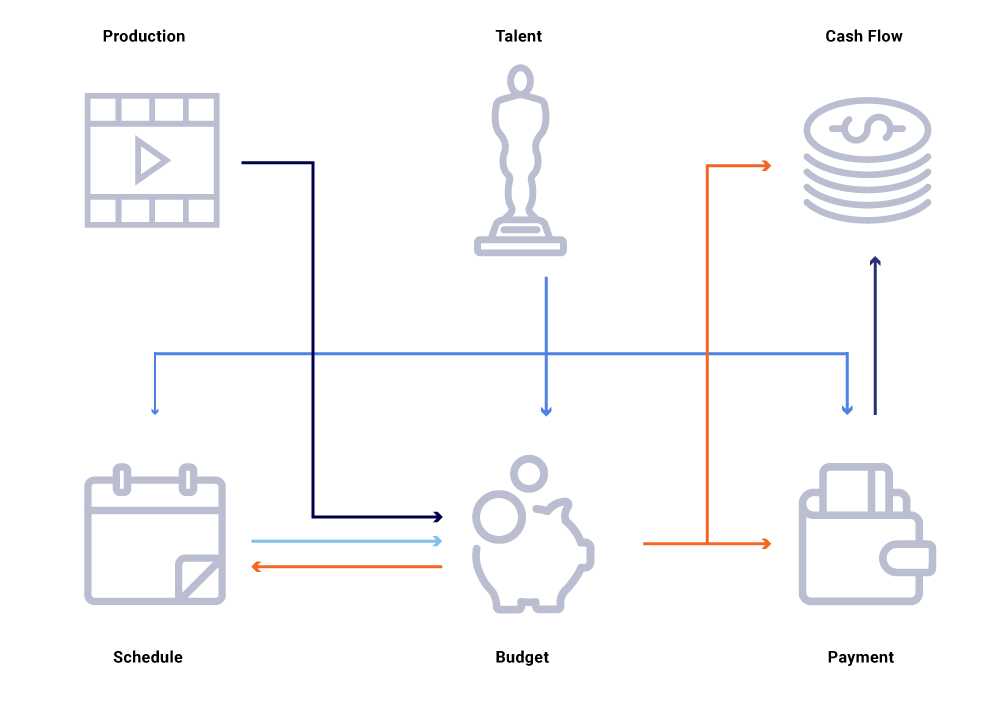

Netflix, a global streaming platform with over 180 million members worldwide, invests billions of dollars in producing original content. To effectively plan and manage their content finance, Netflix's Content Finance Engineering Team faced challenges in tracking spending, programming catalogs, and producing financial reports. These crucial business logic concerns led them to rethink their approach.

To solve this, Netflix embraced a micro-service-driven approach that needed a scalable and reliable architecture for handling the complex interactions between services.

Solution

Netflix implemented an event-driven architecture powered by Apache Kafka to establish a scalable and reliable platform for finance data processing, thus becoming a significant event producer.

- Consumer microservices with Spring Boot: For effortless data consumption, Netflix used Spring Kafka Connectors.

- Data enrichment process: Data consumed from Kafka was integrated with other services to create comprehensive datasets.

- Event ordering: Netflix used keyed messages within Kafka to ensure that events remained in the correct order, guaranteeing data dependability.

- Guaranteed message delivery: Unique tracking methods, like UUID tracking in distributed caches, were implemented so that no event data goes missing.

- Centralized data contracts: Adopting Confluent Schema Registry and Apache Avro, Netflix developed a clear structure for stream versioning to ensure consistency.

Benefits

The transition to an event-driven architecture delivered valuable business impacts for Netflix's finance tracking and reporting:

- Improved traceability: Enhanced service traceability resulted in fewer data discrepancies across the system.

- Synchronized state: With the event-driven approach, different services were synchronized, ensuring real-time data uniformity.

- Efficient data processing: The new structure facilitated swifter data processing and empowered Netflix with instantaneous and accurate business insights.

- System flexibility: The architecture's decoupling provided increased resilience and adaptability which is crucial for a constantly evolving platform like Netflix.

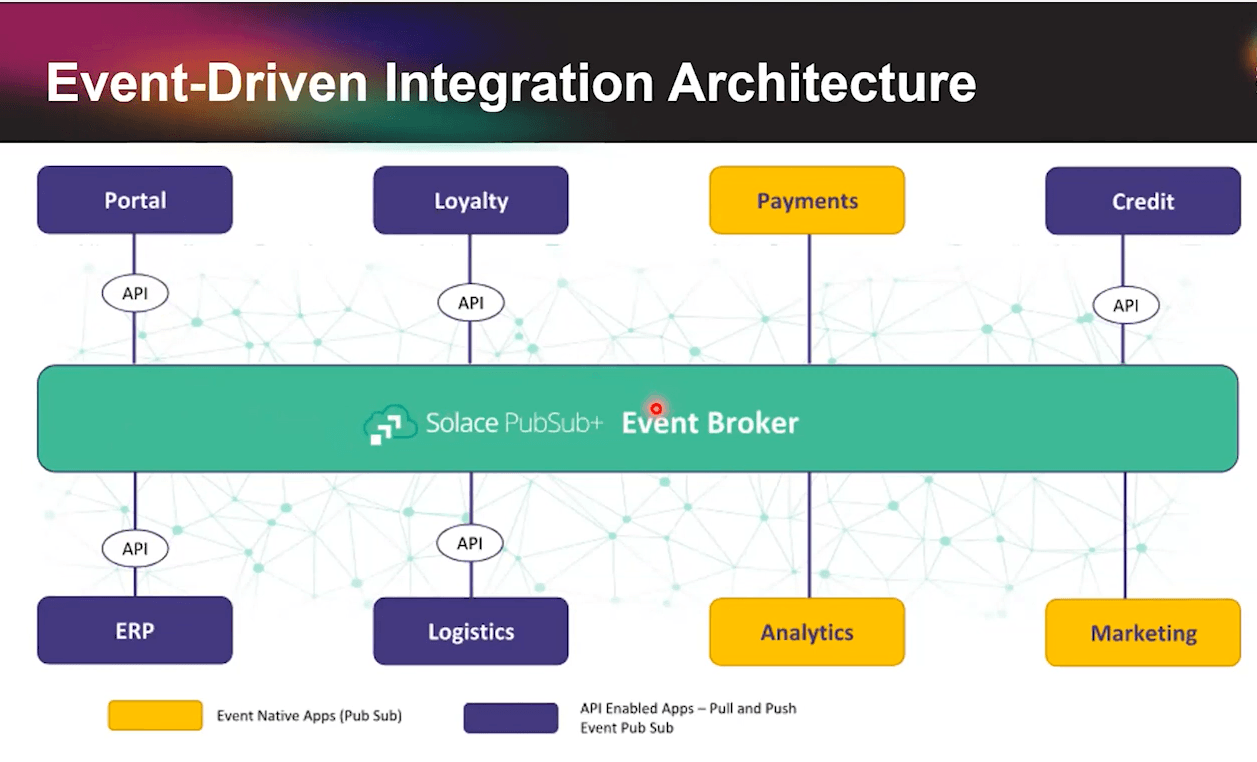

Unilever

Unilever is a renowned global consumer goods company that faced the challenge of merging various online touchpoints for their B2B platform, gro24/7. Over time, the technology evolved – microservices became popular, cloud hosting was the new norm, and specialized event-driven applications emerged. These advancements meant that the once straightforward eCommerce system now had a suite of applications, each demanding smooth integration.

Solution

Unilever adopted an event-driven architecture to streamline integration and establish a single source of truth:

- Accelerated data transfer: MQTT protocol accelerated data transfer for improving customer experience.

- Intelligent event routing: A unique topic taxonomy directed events to appropriate applications based on specified criteria.

- Event broker connectivity: An event broker connects all ecosystem applications for direct or middleware communication through the event channel.

This EDA approach decoupled Unilever's systems while allowing real-time data publishing and subscription between applications and touchpoints. This not only helped them function as an effective event consumer but also strengthened the entire event-driven approach.

Benefits

Event-driven architecture optimized Unilever's complex application ecosystem and delivered valuable business results:

- Real-time Omnichannel: It drove real time omnichannel experience via instant data updates.

- Integration simplification: It streamlined real-time data sharing to reduce integration complexity.

- Enhanced scalability: The solution eased additions/removals without disrupting existing systems.

- Flexible data distribution: The solution provided flexibility in data distribution based on application needs.

- Cost efficiency: The data transfer costs were minimized through the publish-once/subscribe model.

EDEKA

EDEKA Group is one of Germany’s leading supermarket chains. It was facing challenges with its traditional infrastructure. The systems relied on batch updates which meant that the company experienced siloed systems, especially between its:

- Supply chain

- In-store systems

- Merchandise management applications

- Customer-facing platforms like web and mobile apps

Solution

EDEKA collaborated with a cloud consulting firm to implement an event-driven architecture that tore down legacy data silos. The event mesh enabled real-time master data streaming across EDEKA's enterprise systems and touchpoints.

- Flexible platform adoption: Adopted a versatile platform supporting various protocols and environments.

- Event-Driven data sharing: Replaced batch updates with dynamic, event-driven data exchange.

- Enhanced data visibility: Provided insights into system data flows for event-driven microservices design.

- Systems integration partner: Introduced an event mesh streaming real-time master data across EDEKA's enterprise.

Benefits

EDEKA's shift to an event-driven architecture had a major impact on operations, customer experience, and strategic positioning.

- Operational efficiency gains: Improved reliability, robustness, and agility while lowering costs.

- Real-time product data distribution: Instantly distributes product details across the supply chain and stores.

- Improved customer experience: Streamlined processes via real-time data promote a superior shopping experience.

- Strategic brand differentiation: Insights from event-driven microservices helped tailor brand positioning as per customer expectations.

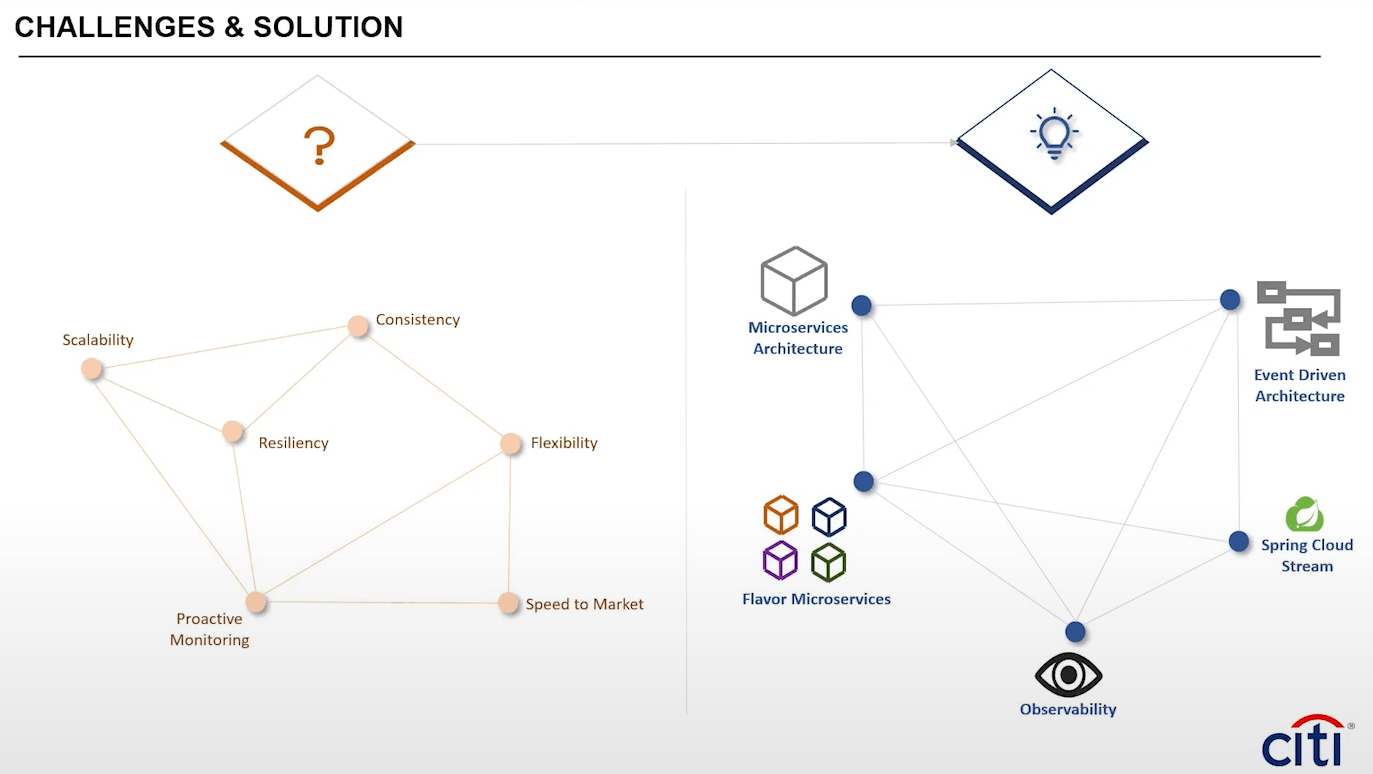

Citi Commercial

Citi Commercial Cards launched an innovative API Platform, but it struggled with the evolving demands of the commercial card industry. Citi Bank’s traditional systems were having difficulties handling the surge in B2B transactions, especially when events occur in rapid succession.

Solution

Citi embraced modern architecture to scale and adapt to industry changes.

- Microservices architecture: Adopted a microservices approach for independent development, deployment, and resilience.

- Event-driven backbone: Used EDA to generate events and simplify complex processes into manageable event chains.

- Observability framework: Established monitoring of metrics, logs, and traces from the event producers for performance visibility.

- Abstraction layer: Deployed Spring Cloud Stream for abstraction in event broker interactions to minimize complexity exposure.

Benefits

Citi's architecture delivered valuable business outcomes:

- Massive scalability: The platform scaled from thousands to over 8 million records in 18 months.

- Proactive monitoring: The observability framework provided early warnings and faster issue resolution.

- Operational flexibility: Event-driven architecture provided flexibility to handle time-bound and deferred execution tasks.

- Faster delivery: The principles adopted accelerated development cycles and digital transformation projects.

- Consistent structure: Microservices approach resulted in consistent code for easier collaboration and maintenance.

Uber

Uber is a global leader in ride-sharing and food delivery. It had its fair share of problems when it introduced ads on UberEats. They needed to process ad events swiftly, reliably, and without errors. All this had to be done without data loss or overcounting.

Solution

Uber implemented an advanced event streaming architecture using:

- Dynamic event streaming with Kafka: Used Kafka for resilient and smooth streaming of high-volume ad events.

- Scalable storage with Pinot: Adopted Pinot for fast storage and access to processed events, guaranteeing data uniqueness.

- Stateful real-time processing with Flink: Incorporated Flink for validating and deduplicating events to reinforce data accuracy.

This combination provided the real-time throughput, stateful processing, and scalable storage Uber needed to manage ad events with precision and speed at scale.

Benefits

Some major advantages included:

- Scalability and flexibility: The solution's modular nature could efficiently scale and adapt to evolving requirements.

- Rapid and reliable processing: With Apache Kafka and Flink integration, Uber guarantees that ad events are processed in real time with the utmost reliability.

- Data accuracy: The system's architecture, including exactly-once semantics and upsert operations in Pinot, ensured that data was processed accurately without duplications.

JobCloud

JobCloud operates Switzerland's largest job portal. It relied on legacy systems that were difficult to maintain and limited their ability to meet modern data demands. JobCloud wanted to transition to an event-driven architecture without disrupting existing APIs. They sought a solution to bridge legacy systems and enable new capabilities.

Solution

After evaluating options, JobCloud selected Apache Kafka’s fully managed service for its expansive ecosystem and integrations.

- Robust infrastructure: The service provided required features like ACLs and robust infrastructure.

- Operational simplicity: It eliminated Kafka complexities so JobCloud could focus on applications.

- Seamless integration: Kafka integration bridged new and legacy systems as a messaging layer.

The managed Kafka service formed the core of JobCloud's transition to an event-driven architecture.

Benefits

With managed Apache Kafka, JobCloud gained:

- Seamless modernization: JobCloud effectively transitioned to an event-driven architecture and bridged the gap between its legacy systems and new functionalities.

- Operational continuity: The new framework lets JobCloud enhance its platform without experiencing any service interruptions.

- Efficient system Interaction: The event-driven setup eliminated direct interactions with legacy APIs and made system interactions smoother and more efficient.

- Reliability: With the new setup, JobCloud witnessed consistent and dependable performance even during periods that demanded multiple system updates.

PushOwl

PushOwl, a leading SaaS solution for eCommerce marketing, is a pioneer in complex event processing. Serving 22,000+ eCommerce businesses globally, PushOwl powers its marketing campaigns with important metrics like impressions, clicks, and revenue generation from push notifications.

However, as the company grew, it faced problems: how to efficiently store and retrieve push notifications on demand and facilitate merchants with easy-to-access campaign reports on their PushOwl dashboard.

Solution

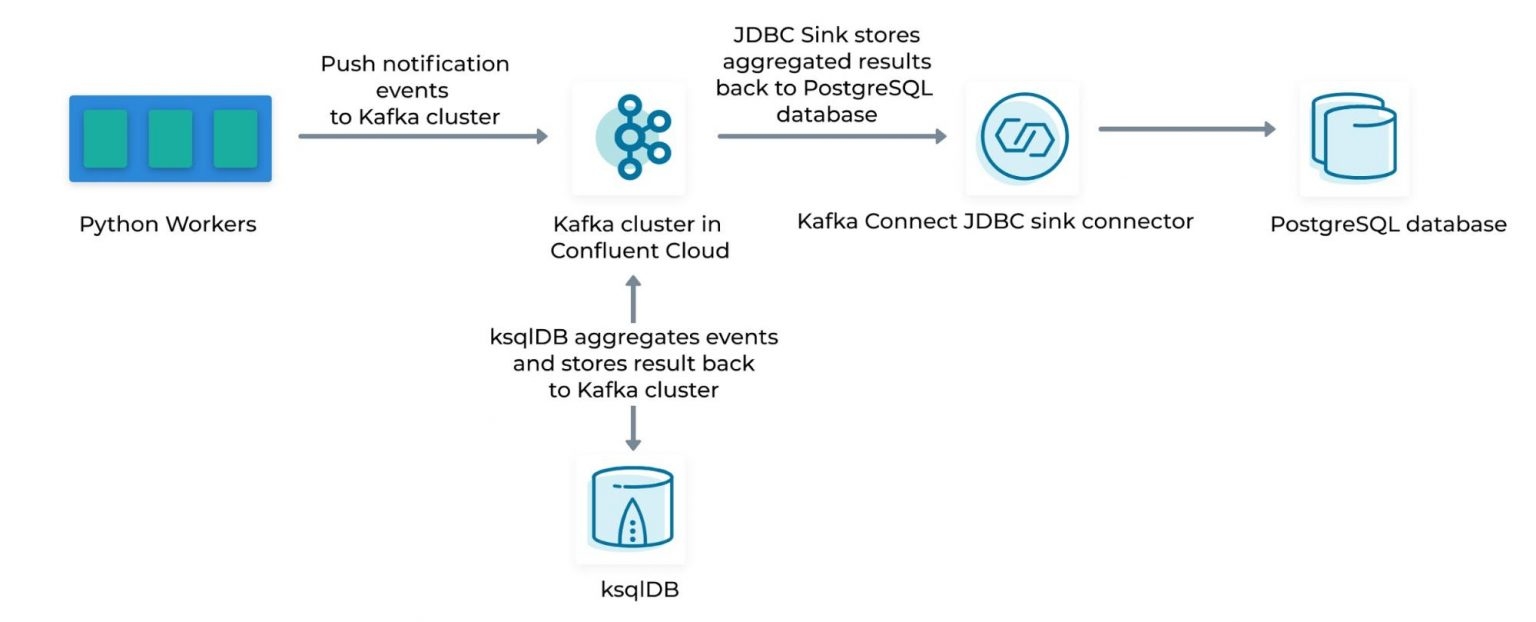

PushOwl implemented an event-driven architecture using:

- Event streaming with Kafka: Used Kafka and ksqlDB for scalable and fault-tolerant stream processing.

- Real-time stream aggregation: ksqlDB ingested notification events from Kafka and performed aggregations for generating campaign reports.

- Database synchronization: Kafka Connect synced aggregated reports from Kafka to the PostgreSQL database for merchant dashboard access.

This provided a robust platform for stream processing, aggregation, and storage at scale.

Benefits

The event-driven architecture helped PushOwl to:

- Operate cost-effectively: Handled peak loads without significant system upgrades.

- Achieve scalability: Efficiently handle large data volumes and prepare for future growth.

- Streamline data management: Simplified processing and storage of high notification volumes.

- Improve user experience: Faster dashboard loads via real-time processing enhanced merchant experience.

Wix

Wix faced difficulties in managing over 1,500 microservices with a team of 900 developers. The primary challenge was to transition from synchronous to asynchronous messaging for smoother operations and better scalability.

Solution

Wix implemented Kafka as a scalable messaging backbone between microservices:

- Message ordering: Kafka's partitioning provided ordered, reliable data streams.

- Observability: Monitoring tools gave visibility into Kafka usage and stream processing.

- Operational scaling: Proactive cluster management ensured stable performance under load.

- Asynchronous events: Kafka provided asynchronous event-driven communication between services.

- Easy consumption: Wix built client libraries in Java and Node.js to simplify consuming Kafka event streams.

Benefits

Wix's Kafka implementation had major advantages:

- Scalability: With the event streaming platform, Wix effectively managed and scaled its 1,500 microservices.

- Flexibility: Wix users had the freedom to design, manage, and develop their websites the way they wanted. Shopify and Wix became comparable because the latter innovated a lot.

- Efficiency: Transitioning to asynchronous messaging reduced bottlenecks and improved the overall performance of their system.

- Innovation: Open-source projects like Greyhound helped Wix to stay at the forefront of technological advancements in event-driven architectures.

Funding Circle

Funding Circle, a global lending platform used around the world, had some challenges making sure their complicated system ran smoothly. They used a mix of technologies like Ruby on Rails, Apache Samza, Kafka Connect, and Kafka Streams, which all had to work together without any issues.

The company identified an alarming increase in release bugs that they could have avoided with more advanced integration tests. The existing tests, which spanned the entire stack, were prone to errors, unreliable, and slow.

Solution

To solve these issues, Funding Circle introduced "The Test Machine". This library ensured that all their subsystems operated cohesively. Some important things it can do are:

- Tests as data: Instead of manually writing test data to Kafka, they used a data notation approach combined with a pure function.

- Portable tests: They created tests as a sequence of commands to run against multiple targets, from local to remote Kafka clusters.

- Test performance: Recognizing the challenges of full-stack testing, they streamlined their testing process to reduce overhead, boost parallelism, and minimize start/stop times.

- Journal everything: They developed a method where a single consumer subscribes to all output topics, ensuring all data gets consumed and added to a journal for easy verification. This method also helped to better process events.

Benefits

The Test Machine had the following major benefits:

- Faster and more reliable testing: It simplified the testing structure, lowered the error chances, and improved test speed.

- Improved resource utilization: With more dependable tests, they reduced the time and resources spent on manual data fixes.

- Scalability: This solution also improved maintenance and upgrades to address new requirements, making it adaptable for future growth.

- Enhanced confidence: The Test Machine made sure all their different systems worked well together and removed the possibility of operational hitches.

Deutsche Bahn (DB)

Deutsche Bahn (DB) caters to 5.7 million passengers every day. For them, access to real-time, accurate, and consistent trip information on various platforms is crucial. A mismatch in information across these channels can create confusion. The challenge was to design a unified system that could act as the primary source for all passenger-related information.

Solution

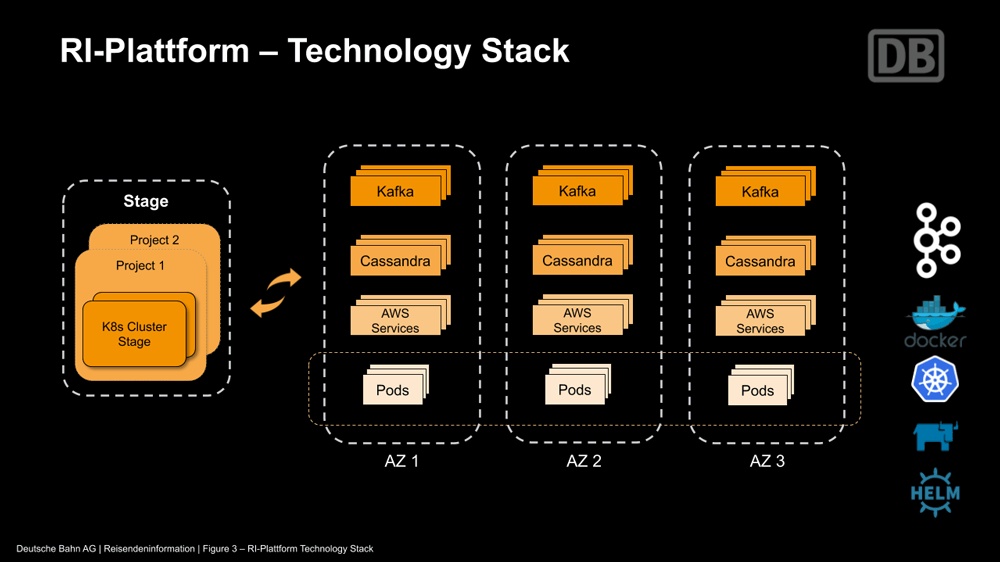

DB implemented an advanced real-time data streaming architecture:

- Advanced system architecture: Built a strong structure that included cloud hosting and a mix of open-source tools.

- Real-time data management: Used real-time event streaming technology for effective data handling and organization.

- Automated announcements: Brought in extra data sources, like platform sensor data, to automate accurate station alerts.

- Local data processing: Used local state stores to move data operations to a local setting which accelerated the procedure.

- Microservices integration: Launched 100 microservices to skillfully handle and distribute the platform's vast daily event load.

- Centralized information system: Created the RI-Plattform for up-to-date and consistent travel details across multiple DB points of contact.

- Agile streaming platform: Chose a nimble event streaming platform after initial trials with several open-source platforms, simplifying the development cycle.

Benefits

The real-time data streaming solution delivered:

- Consistency: Passengers now receive consistent and accurate information regardless of the medium they use.

- Scalability: Designed to handle increased workloads, the system remains future-proof as DB expands its services.

- Efficiency: Transitioning to local data processing helped the system reduce processing time and resulted in faster updates.

- Reliability: The system achieved impressive high availability rates and ensured minimal disruptions in information access for passengers.

- Improved user experience: The timely and reliable delivery of information has improved the everyday travel experience for millions of travelers in Germany.

As businesses increasingly adopt event-driven architecture, having a reliable stream processing platform becomes all the more important. This ensures real-time insights, synchronization, scalability, and streamlined operations. Here, streaming data pipeline tools, like Estuary Flow, play an integral role in simplifying the implementation of event-driven architecture and handling the complexities that come with EDA.

How Estuary Flow Supports Event-Driven Architecture

Estuary Flow is our fully-managed, cutting-edge platform for real-time data tasks. Built on Gazette, a fast, highly scalable streaming broker, Flow supports streaming SQL, batch ETL, and automating real-time data pipelines. It's tailored for big tech systems like databases, filestores, and pub-sub. With Estuary Flow, setting up an event-driven architecture becomes simple and effective. Here's how:

Versatile Data Movement

- Instantaneous processing: Data is moved and transformed from its source to the desired destination in milliseconds.

- Diverse data sources: Estuary Flow seamlessly connects with clouds, databases, and SaaS applications, ensuring varied and dynamic data inputs.

Scalable Operations

- Unified tools for all: Both technical and non-technical teams can use Estuary Flow's UI or CLI.

- Built for growth: The fault-tolerant architecture supports large-scale operations and manages active workloads at a rate of 7GB/s.

- Uncompromised accuracy: With customizable schema controls, data integrity stays in check. The platform's "exactly-once" semantics ensures each event is uniquely processed.

Dynamic Analytics and Transformations

- Comprehensive customer views: Merge real-time and historical data to get a detailed current view of your customers.

- Operational analytics: Get insights into the performance impact of real-time analytical tasks to streamline your processes further.

- Real-time ETL: With immediate Extract, Transform, and Load processes, data warehouses stay consistently updated, and ready to react to every event.

Seamless Integrations and Sharing

- Effortless data sharing: Facilitate secure access to real-time data so you can incorporate data into your preferred systems.

- Bridge legacy and modern systems: Integrate legacy systems smoothly with modern cloud environments. Estuary Flow's compatibility with the Airbyte protocol further broadens the integration possibilities.

What Are the Benefits & Challenges of Event-Driven Architecture?

Event-driven architecture offers several benefits but it also comes with its own set of challenges. Here's a breakdown of the advantages and disadvantages of using EDA:

Benefits of Event-Driven Architecture

- Loose Coupling: EDA decouples components so they can interact without having to know the specifics of each other's implementations. This promotes system flexibility.

- Scalability: Events can be processed asynchronously which makes it easier to scale individual components independently to handle different workloads.

- Real-time Responsiveness: Event-driven architecture enables real-time processing and updates. This makes it ideal for applications requiring low latency, like IoT and real-time analytics.

- Flexibility: New components can be added or existing ones modified without major disruptions as long as they adhere to the event contract.

- Event Sourcing: EDA can support event sourcing where all changes to an application's state are captured as a sequence of immutable events. This gives you a reliable audit trail and simplifies debugging.

- Decoupled Services: Event-driven architecture encourages the creation of independently deployable services which can cause better code maintainability and easier team collaboration.

- Fault Tolerance: With decoupled components, failures in one part of the system are less likely to cascade and affect other parts.

Now let’s take a look at the major challenges of event-driven architecture.

Challenges of Event-Driven Architecture

- Event Loss: Events can be lost in transit which can cause data inconsistencies if not properly addressed.

- Latency: In some cases, event-driven systems may introduce latency because of the asynchronous nature of event processing.

- Complexity: Implementing EDA can be complex, especially when dealing with numerous events, event handlers, and message routing.

- Testing & Validation: Testing the interactions between different services and handling various event scenarios can be more challenging than testing a monolithic application.

- Message Broker Reliability: Event-driven architecture often relies on message brokers for event distribution. The reliability and scalability of these brokers become crucial points of failure.

- Event Ordering: Ensuring the correct order of events can be challenging in distributed systems. Some events may arrive out of sequence or with delays which require careful handling.

Why Should You Consider Using Event-Driven Architecture?

Event-driven architecture is a smart choice for organizations looking to process data in real-time, create more dependable systems, and stay nimble in today's ever-changing digital landscape. Here are a few compelling reasons why you should opt for EDA:

Quick Data Processing and Instant Insights: Event-driven architecture accelerates data processing. Real-time analysis allows you to immediately identify trends and anomalies, enabling growth and providing the flexibility to adapt to change.

Enhanced Resilience and Accountability: EDA adds a layer of resilience to systems, preventing a single failure from causing a complete breakdown. Additionally, it strengthens service traceability, reducing errors and ensuring accurate data alignment.

Efficiency, Scalability, and Simplified Integration: Event-driven architecture streamlines integration between applications and services, using a standardized approach to reduce complexity and overcome common challenges. It also optimizes system performance, which enables seamless growth and promotes cost-effective operations.

Conclusion

Event-driven architecture examples from giants like Netflix and Deutsche Bahn clearly show the transformative power of this design principle. These architectures are reshaping the operational dynamics across different industries and making it possible to process things in a fast and flexible way, while also being able to scale up easily.

However, mistakes while planning the architecture can hamper the very advantages that this architecture is supposed to bring. This is where Estuary Flow comes in. Flow provides a reliable and cost-effective event streaming platform to accelerate your transition to event-driven systems. With Flow, you get important event bus and storage capabilities without infrastructure headaches.

Sign up for free to experience Flow's unmatched event streaming capabilities. We also provide personalized assistance on your event-driven journey.

Author

Popular Articles