How to sync Firestore data to Snowflake for data analytics

Until recently, connecting Google Cloud Firestore to Snowflake hasn’t been easy. Here’s why (and how) to integrate this powerful pair.

Google Cloud Firestore is an excellent cloud NoSQL database, and Snowflake is one of the most popular cloud data warehouses available today. Together, these two data storage systems could provide the backbone for all the operational and analytical workflows in your data stack.

But connecting Firestore to Snowflake hasn’t always been as easy as it should be. In fact, it’s been traditionally challenging enough that organizations often give up on putting this powerful pair together.

Fortunately, with new data pipeline technology, it doesn’t have to be this way.

In this article, we’ll cover the basics of the two systems, and why they’re both excellent choices for different workloads. Then, we’ll dig into the challenges of integrating the two and some available methods. We’ll finish off with a step-by-step guide to creating a real-time Firestore to Snowflake data pipeline with a no-code tool.

What is Google Cloud Firestore?

Google Cloud Firestore is a serverless, managed, cloud-native NoSQL database. It’s an excellent real-time database that’s becoming a popular choice to back all sorts of applications.

Google designed Firestore to blend the best traits of two of its other products: Firebase and Google Cloud Platform. The result is a database that’s great at handling real-time data and is massively scalable.

Other benefits of Firestore include:

- Though it’s a managed, cloud-native database, Firestore offers extensive offline features through local caching.

- Firestore is known for providing a smooth, simple developer experience — it’s easy to use.

- Firestore is a NoSQL database, which allows it to flexibly support unstructured data in a way that a relational database can’t. At the same time, Firestore uniquely stores data as JSON in nested collections, which enables highly efficient real-time data handling.

That last point, though a benefit, is part of what makes data analytics in Firestore so challenging. Generally speaking, we’re used to SQL-based relational databases that store tabular data. With these databases, tables have more defined structure, and even complex queries are relatively easy to set up. In the event that we need to get data out of a SQL database, data pipeline tools and Change Data Capture methods are well-established.

But what happens when you need to get data out of Firestore?

And — taking a step back — if it’s such a great database, why do we need to get data out of it, anyway?

How to analyze Firestore data

Simply put, to analyze Firestore data properly, it’s a good idea to move data out of the platform.

You can certainly perform queries within Firestore. In fact, queries in Firestore are highly performant and are exactly the reason why it’s a great choice to back your app. But they’re designed for operational workloads — that is, quickly leveraging data to power applications and user interactions.

For example, an e-commerce application needs to juggle information on the current status of its inventory and customers. Firestore is a great choice for this task.

On the other hand, Firestore is not a great place to ask questions of your data in aggregate — it’s not designed for data analytics. Especially as your data grows in size and complexity, you’re probably going to want to bring it elsewhere to answer big business questions.

For e-commerce, these are questions like:

- What are the demographic and geographic breakdowns of customers?

- What’s the lifetime value of the average customer, and how does this vary?

- What do our most popular products have in common, and how do customers generally interact with them?

That being said, it’s ultimately up to you to decide whether or not to migrate Firestore data for analysis. Let’s get a bit more specific with those two options:

Option 1: Query Firestore directly

You can use Firestore itself to write simple and compound queries and apply them directly.

This is a good option for early data exploration, but it quickly gets expensive and doesn’t scale well because:

- Firestore stores data as unstructured JSON, which isn’t optimized for large-scale querying. So, each query requires lots of aggregation to occur behind the scenes. For more mature data, this can take a huge toll on performance.

- You’re charged for all the components of this inefficient querying — documents read, memory, and network bandwidth used.

Option 2: Move data out of Firestore for analysis

For ongoing or large-scale analysis, your best bet is to move data from Firestore to a different data storage system designed for analytics: most likely, a data warehouse.

Like relational databases, data warehouses typically have tables and are SQL-based. But unlike transactional SQL databases (and unlike Firestore) data warehouses have specific architecture and features for efficient analytics at scale.

There’s no set list, but a typical cloud-based data warehouse will have:

- OLAP processing system to allow easy multi-dimensional analysis of data.

- Strong metadata support.

- Analytical or data access tools, either integrated with the data warehouse platform or provided as turnkey integrations.

What is Snowflake?

Snowflake is one of the (if not the) most popular data warehouses available today.

Snowflake is a powerful, fully-managed, cloud-based, scalable data warehouse featuring massively parallel processing (MPP). It has modern architecture featuring separate compute and storage. These features make it a great place from which to run your analytical workloads at just about any scale, and one of the most popular destinations for data pipelines.

But there’s another data warehouse to which that exact same description could be applied, and which I’d be remiss to not mention here: Google BigQuery.

Because it’s part of the Google ecosystem along with Firestore, integrating the two systems together isn’t terribly difficult.

So, all other considerations aside, it makes sense to pair BigQuery with Firestore.

But these decisions rarely occur in a vacuum. If you’re reading this article, you have a particular reason for combining Firestore with Snowflake. Maybe it’s one of the subtle-but-important advantages Snowflake has over BigQuery, for instance:

- Snowflake is known for having an excellent web UI with features catering to both analysts and engineers.

- According to some studies, Snowflake boasts better performance (and a greater performance-to-price ratio) than BigQuery.

- Snowflake’s zero-copy cloning and global caching lend efficiency when working with a team across dev and production.

Or perhaps you (or your company) already use Snowflake for other purposes, and Firestore is the newer addition. Maybe you just really like the idea of “fire and ice” as the theme for your core data infrastructure. I’m not here to judge you.

Whatever the case, your data warehouse choice should not be influenced by Google lock-in. It shouldn’t be prohibitively hard to pipe data between Firestore and Snowflake.

On that note, let’s get into two ways to sync data from Firestore to Snowflake: one that’s hard, and one that’s easy.

How to sync data from Firestore to Snowflake

Neither Google nor Snowflake provides us with an easy path to create a Firestore-to-Snowflake pipeline, but they do give us the tooling to build one on our own.

On the other end of the spectrum, third parties offer pipeline solutions focused on ease of use.

Let’s break that down:

Option 1: Write a Google Cloud Function

This is a developer workflow. To put it simply, you can hand-code a data pipeline in the cloud if you have the skills and the time. Google gives you a few tools to do this:

- The Firebase SDKs (most likely, you’ll use the Firebase Admin SDK)

- Google Cloud Functions for Firebase (a flavor of Google’s Node-based Cloud functions that allows you to use the Firebase SDKs)

From the linked documentation, developers won’t have much trouble setting up trigger function(s) that will detect change events in Firestore. The tricky part is then getting that data into Snowflake.

Snowflake is extensible through external functions. How to put these pieces together is beyond the scope of this article. And once you do get that function together, the compute costs on both the Google and Snowflake sides could get high.

Alternatively, for one-time migrations, you can export Firestore data to a Google Cloud Storage bucket and then load that data into Snowflake. This isn’t a recommended workflow for repeated use or to configure an ongoing data pipeline, however.

Option 2: Use a third-party tool

Today, there’s a growing ecosystem of data pipeline tools out there designed to provide a user-friendly path to integrate disparate data systems — like Firestore and Snowflake.

To use a third-party tool, the first thing you need to check is: does the platform provide access to Firestore as a data source and Snowflake as a destination? Most tools will list “integrations” or “connectors” on their website, so this is easy to find out quickly.

Now, you need to answer more nuanced questions, like:

- Are the integrations well-maintained? (And are they maintained by the company itself, an open-source community, or another entity?)

- Does the central runtime of the pipeline platform have the pricing, speed, and scalability to meet your needs? (When connecting two high-scale platforms like Firestore and Snowflake, this is of particular concern.)

From here on out, we’ll be focusing our discussion on Estuary Flow. Flow is a managed platform for building real-time data pipelines. It has a connector that captures data from Firestore, and another that writes data to Snowflake. These connectors are built and maintained by Estuary’s engineering team.

What makes Flow unique is that it has an event-based runtime instead of running pipelines as batch processes. Especially for high-scale data storage systems, this not only makes pipelines faster; it also makes them more efficient, thus reducing cost.

How Estuary Flow models Firestore data

When you go from Firestore to Snowflake, you’re going from unstructured JSON to tabular data. Part of your pipeline’s job is to handle this transition.

Flow also stores all data internally as JSON files, but with a more explicit structure. The way in which Flow remodels JSON data from Firestore determines the tables you see in Snowflake. It’s important to be aware of this as you plan your pipeline.

Flow’s JSON documents are made of multiple key-value pairs. These documents are grouped in collections, which share a JSON schema and a collection key. This keeps the data organized and allows you to map it to external system’s data resources, like Firestore collections and Snowflake tables.

Unlike Flow, Firestore models JSON in hierarchical, nested collections. Here’s an example (taken from the Estuary docs on the subject) in which we have a collection of users, and each user has its own collection of messages:

plaintextusers

├── alice

│ └── messages

│ ├── 1

│ └── 2

└── bob

└── messages

└── 1Given the hierarchical Firestore collection structure above, Flow would flatten it out into two Flow collections: users and users/*/messages.

users would contain a document identifying each user — Alice and Bob. users/*/messages would contain all three messages — two of Alice’s, and one of Bob’s. But you’d still be able to identify which message is whose because Flow adds a property called /_meta/path, which notes the original path in the Firestore hierarchy — for example, users/alice/messages.

With no further modifications, you could materialize these collections into analogous Snowflake tables: one for users and one for messages. In most cases, this default pattern is the one you want. However, if you’d rather model the data differently, you can transform your Flow collections with a derivation.

If the default pattern works for you (or you’re not terribly concerned with data modeling at this time) you can jump right into the tutorial without any more thought. Let’s get started.

Tutorial: Create a real-time data pipeline from Firestore to Snowflake

In this tutorial, you’ll set up a real-time data pipeline that will copy Firestore collections into Snowflake tables, and keep the two systems in sync going forward.

Prerequisites

To complete this tutorial, you’ll need:

- An Estuary Flow account. You can get started with a free trial — go to the web app to request yours. (For production deployments, contact the Estuary team).

- A Firestore database.

- A Google service account that has…

- Read access to your Firestore database, as provided by the roles/datastore.viewer role. Assign the role when you create the service account, or add it to an existing account.

- A JSON service account key. Learn how to generate one here.

- A Snowflake database that includes a schema.

- A user with access to the Snowflake database and schema. Use this script to get set up.

- Your Snowflake account’s host URL is formatted using your Snowflake account identifier. Have both the full URL and identifier handy; for example,

orgname-accountname.snowflakecomputing.comandorgname-accountname, respectively.

Capture data from Firestore

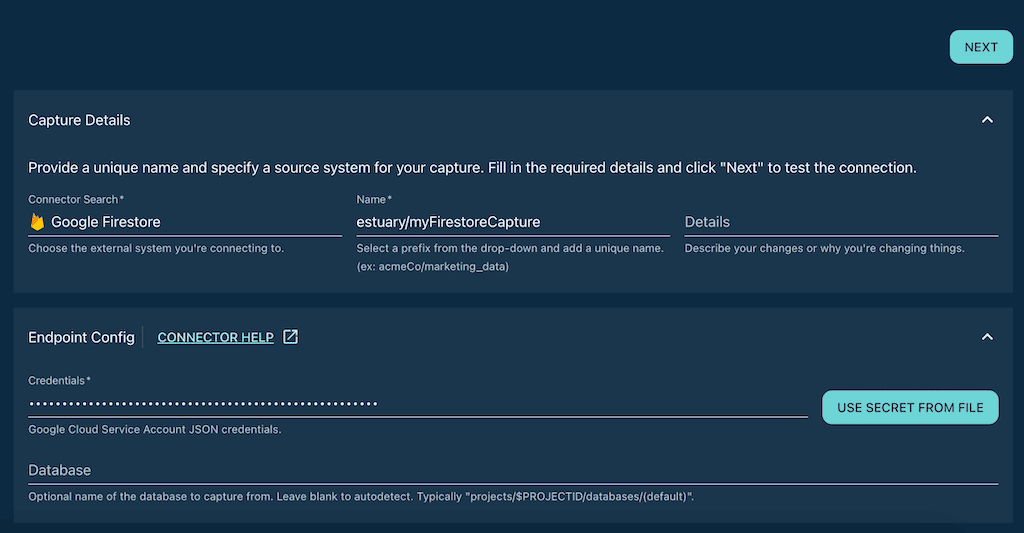

- Go to the captures page of the Estuary Flow web app and click New Capture.

- Choose the Google Firestore tile.

- In the form that appears, create a name for your capture. To do so, click inside the Name field and select a prefix from the drop-down menu. Then, append a unique identifier.

- Provide the JSON service account key by either pasting it into the form or uploading it from a file.

- Click Next. Flow initiates a connection to your Firestore database. It identifies the data collections and maps them to Flow collections (as discussed above). You can remove any collections you don’t want to capture.

- Click Save and Publish. Flow deploys the capture and begins ingesting data from Firestore, first copying all historical data, and then listening for any new updates that may appear in the future.

- Click the Materialize Collection button that appears following a successful capture.

Note: if you wanted to transform the Flow collections, you could pause and do so at this stage.

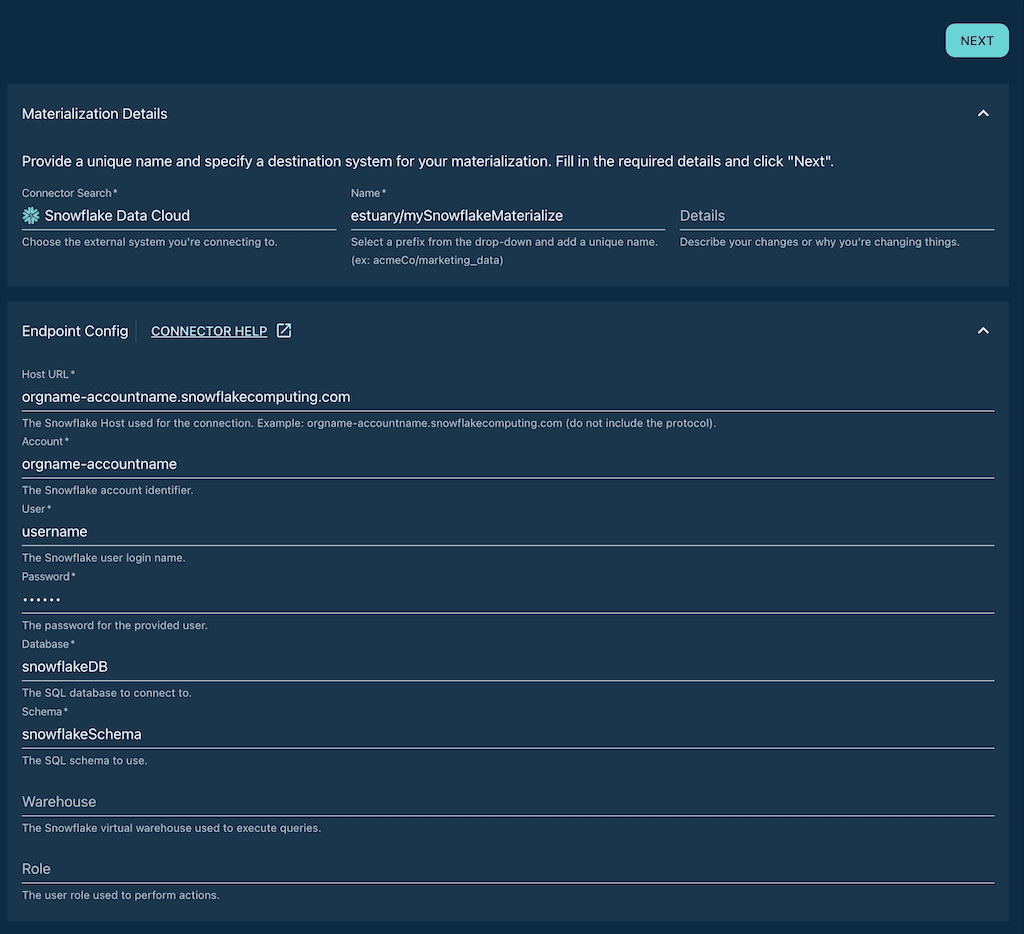

Materialize data to Snowflake

- You’re taken to the Create Materializations page. Browse to and select the Snowflake tile.

- In the form that appears, create a name for your materialization as you did for your capture.

- Fill in the required fields:

- Host URL

- Account identifier

- User

- Password

- Database name

- Schema

- Scroll down the Collection Selector section. Note that all the collections you captured from Firestore are mapped to new tables that will be created in Snowflake.

- Provide a name for each table. You can copy the names of the collections, or select different names that are meaningful to you.

- Click Next. Flow initiates a connection with your Snowflake database.

- Click Save and Publish. When publication is successful, you’ll see a notification. The historical data from your Firestore database has been copied to your Snowflake tables. From now on, any new documents that appear in Firestore collections will be reflected in Snowflake in milliseconds.

For more documentation on this workflow, see:

- Estuary’s guide to creating a dataflow.

- Documentation for the Firestore connector.

- Documentation for the Snowflake connector.

Wrapping up

As your company or project grows, so does your data. Suddenly, you have to solve new problems, like finding a cost-effective, reliable way to analyze Firestore data or connecting a new data source to your existing Snowflake warehouse.

There are surprisingly few options for syncing your Firestore and Snowflake data, but they do exist — and not all of them will take you days to set up. Hopefully, the tutorial section of this post made setting up your first pipeline relatively simple.

To chat all things real-time data integration, get help with more advanced pipelines, or just say hi, you can join the Estuary Slack community.

Learn more about Google Firestore in these in-depth guides:

- Firestore Query Best Practices: Dive deeper into optimizing your Firestore queries for better performance

- Analyze Google Firestore Data: Learn how to effectively analyze and derive insights from your Firestore data

- Extract & Export Data from Firestore: Explore methods to extract and export data from Firestore for various use cases

Author

Popular Articles