If you’re already using Google BigQuery as your data warehouse, it’s useful to understand the nuances of the diverse data types it offers. These data types serve as building blocks of your database, representing how your data is stored, processed, and analyzed. Gaining a thorough understanding of Google BigQuery Data Types will help you harness the full potential of the platform. This will allow you to optimize query performance, enhance data integrity, and generate more accurate insights.

In this article, you’ll uncover eight important BigQuery data types with practical examples and explanations. Additionally, we’ll shed light on functions specific to each data type.

BigQuery Overview

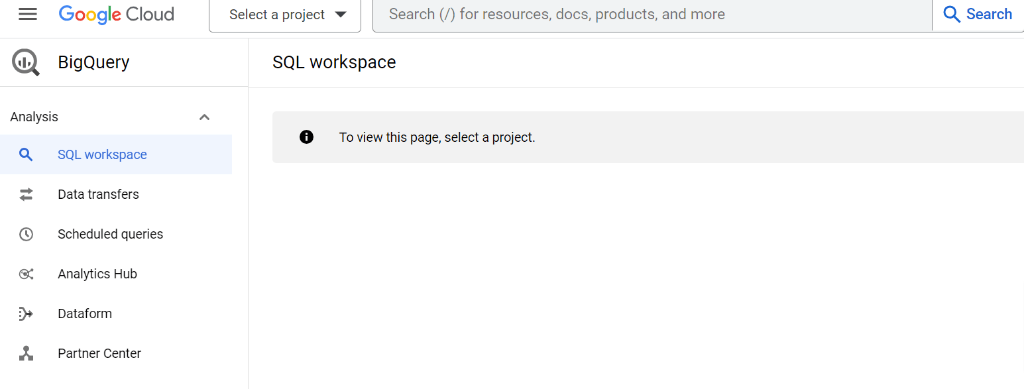

Developed by Google in 2010, BigQuery is a cloud-based, fully managed data warehousing and analytics service. It allows you to store and analyze large amounts of data using simple SQL queries.

What makes BigQuery a robust data warehouse is its ability to stream real-time data and support geospatial analysis. It also provides seamless integration with Google Cloud services, offering scalability and flexibility for diverse data processing needs. Its serverless architecture complements these features by eliminating the need for infrastructure management, allowing you to focus on data analysis tasks.

Understanding Google BigQuery Data Types

With BigQuery, you can handle vast amounts of data with SQL, making it a valuable tool for data-driven decision-making. But, to use it effectively, it's important to understand BigQuery data types. These data types form the fundamental structure of your data within BigQuery.

Following are the eight most important BigQuery data types.

BigQuery Data Types: STRING

In BigQuery, the STRING data type is used to represent variable-length character strings. You can use the STRING data type to store a sequence of characters or text of varying lengths.

For instance, you can store:

- Names of individuals

- Email addresses

- Textual descriptions or comments

- Identifiers or labels, and more

Syntax:

column_name STRING

Example:

Create table Employee (

Employee_first_name STRING,

Employee_last_name STRING,

Employee_email STRING);

Some of the functions supported by the STRING data type in BigQuery are:

- LENGTH: To get the total count of characters in a string

- CONCAT: To concatenate two or more strings

- SUBSTR: To get a substring from a string

- UPPER and LOWER: To convert a string to uppercase or lowercase

- REGEXP_EXTRACT: To extract substrings using regular expressions

Bytes

While string and byte are often used interchangeably and share common functions, they have distinct concepts. A byte is a group of 8 bits, and each bit can either be 0 or 1. It is the basic unit of data storage. Bytes can represent a wide range of data, including numbers, characters, binary data, and more.

When you store or manipulate strings in BigQuery, they are internally stored as sequences of bytes based on the UTF-8 (Unicode Transformation Format 8-bit) encoding. This means that each character in the string is represented by one or more bytes.

String and Byte data must be enclosed in single (‘) or double (“) quotation marks, or in triple-quotes with a group of single (‘ ‘ ‘) or double (“ “ “) quotation marks.

| Name | Size |

| String | 2 logical bytes + the UTF-8 encoded string size |

| Bytes | 2 logical bytes + the number of logical bytes in the value |

BigQuery Data Types: BOOLEAN

The BOOLEAN data type (also known as BOOL) represents a binary value with either a TRUE or FALSE state. The storage size of a BOOL column is very small, usually one bit per value or one logical byte. You can use the BOOLEAN data type when you need to represent binary values that have only two states.

For instance, you can track:

- Completion status of tasks or orders (‘TRUE’ if completed, ‘FALSE’ if not)

- Availability of the product (‘TRUE’ if available, ‘FALSE’ if not in stock)

- If a customer is active or not

Syntax:

column_name BOOL

Example:

You have a table called Employees with columns Employee_id and is_active. Employees with IDs 1 and 3 are active (TRUE), and 2 and 4 are inactive (FALSE).

To check the number of active employees in your table, you can use the following query.

SELECT * FROM Employees WHERE is_active = TRUE;

After running the above query, you’ll get the output as:

| Employee_id | is_active |

| 1 | TRUE |

| 3 | TRUE |

BigQuery Data Types: NUMERIC

The numeric data type is used to store and represent numeric values, such as integers or floating-point numbers. You can use numeric data types to perform calculations, financial data applications, or measurements on numeric data.

Syntax:

column _name NUMERIC

Example:

price NUMERIC

BigQuery has additional numeric data types with alternative names that can be used interchangeably to represent the same data types. For instance, you can either use NUMERIC or DECIMAL to define a column or variable with fixed-point decimal values.

- INT64 with aliases: INT, INTEGER, BIGINT, SMALLINT, TINYINT, BYTEINT

- FLOAT64

- NUMERIC with alias DECIMAL

- BIGNUMERIC with the alias BIGDECIMAL

Integer Type

The Integer type in BigQuery represents signed 64-bit integers. It’s used for whole numbers, both positive and negative, and provides a specific range for storing numerical data.

Example: 42, 0, 566

| Name | Aliases | Size | Range |

| INT64 (Integer) | INT, INTEGER, BIGINT, SMALLINT, TINYINT, BYTEINT.

| 8 bytes | -9,223,372,036,854,775,808 to 9,223,372,036,854,775,807 |

Decimal Type

You can use decimal type when storing fixed decimal numeric values that require exact precision, such as measurements or financial prices.

Example: price DECIMAL(10,2)

Floating Point Type

You can use floating point data type to represent or store numbers with a fixed number of digits but with varying precision.

Example: temperature FLOAT

BigQuery Data Types: STRUCT

In BigQuery, STRUCT allows you to group multiple columns or fields, each with its own data type, into one logical unit.

You can use the STRUCT data type in some of the following scenarios:

- Capturing location details with latitude and longitude values

- Storing precise information about students or employees, including first name, last name, and other attributes

- Organizing data that contains product information, including name, amount, and description

STRUCT Declarations

The following four are examples of the STRUCT type declarations:

- STRUCT<INT64>: A simple struct with a single unnamed field of type INT64.

Query:

SELECT STRUCT(40) AS emp_struct;

Output:

This will create a struct with a single field containing the integer value 40.

- STRUCT<x STRING(10)>: This is a simple struct with a single parameterized string field named 'x' with a maximum length of 10 characters.

- STRUCT<x STRUCT<y INT64, z INT64>>: This is a nested struct. The outer struct is named ‘x’. Inside ‘x,’ there is another struct with two fields, ‘y’ and ‘z’, both of type INT64.

- STRUCT<inner_array ARRAY<INT64>>: This contains fields named ‘inner_array’, which is an array of 64-bit integer values.

BigQuery Data Types: ARRAY

You can use ARRAY as a data type to store and work with an ordered collection of values of the same data type. Here, the order of values or elements is important. ARRAY data types are represented using angle brackets < and >. These angle brackets are used in type declarations to indicate that a particular column is an array of similar values. The array index starts from 0, and each value is identified by an index number.

For example, you can use an array when you want to record the historical stock prices over time or to keep track of items in an order.

Syntax:

column_name ARRAY<datatype>

Example:

CREATE TABLE Orders (

order_id INT64,

product_names ARRAY<STRING>,

order_amount ARRAY<FLOAT64>

);

In this example, the product_names column is declared as an array of strings, and order_amount is declared as 64-bit floating point numbers.

ARRAY Declaration

These declarations will help you understand how to declare arrays with various levels of complexity and nested structures. Here’s an overview of some examples:

- ARRAY<INT64>

ARRAY<INT64> represents a single array of 64-bit integers. You can use this to store a single integer in an array.

Example: [1,2,3,4]

- ARRAY<ARRAY<INT64>>

This is not supported and is an invalid type of declaration. Arrays cannot contain other arrays in BigQuery.

- ARRAY<BYTES(5)>

You can use this while representing a simple array of parameterized bytes. In this, each element in the array is a byte string with a fixed length of 5 bytes.

Example: [“abcde”, “12345”, “fghij”,.....]

- ARRAY<STRUCT<INT64, INT64>>

You can use this declaration to represent an array of structs, and each struct contains two 64-bit integers. This allows you to have a collection of pairs of integers.

Example 1:

CREATE TABLE Graph(

id INT64,

x_and_y_points ARRAY<STRUCT<INT64, INT64>> );

Example 2:

INSERT INTO Graph(id, x_and_y_points )

VALUES

(1, [{20, 40}, {15, 55}, {30, 80}]),

(2, [{15, 100}, {10, 70}]),

(3, [{55, 65}, {65, 75}, {60, 70}, {75, 85}]);

In this example, x_and_y_points is defined as an array of structs, and each struct contains two INT64 fields of x and y points.

BigQuery Data Types: DATETIME

You can use the DATETIME data type in BigQuery to specify date and time together. The format for this data type is 'YYYY-MM-DD HH:MM:SS.SSSSSS'. This is useful for applications working with precise timing information, such as event scheduling, tracking, or performing time-based calculations.

Example 1:

Select * from Daily_logs WHERE datetime_col= “2020-09-21 12:30:00.45”

Example 2:

Here, DATETIME represents 12:30 p.m. on Aug 27, 2023: DateTime '2023-08-27 12:30:00.45'

BigQuery Data Types: JSON

JSON (JavaScript Object Notation) is a lightweight data-interchange format that is simple for humans to read and write and also for machines to parse and generate. You can use JSON to store semi-structured or unstructured data where the schema keeps changing depending on the records.

A few scenarios where you should consider using JSON data types are:

- Capturing daily attendance logs in JSON format

- Storing user-generated information with different fields like topic, description, and author

Syntax:

column _name JSON

Example:

This Product table includes a JSON column_name product_details:

| Id | product_details |

| 1 | {"name": "Laptop", "price": 20000, "brand": "ABC"} |

| 2 | {"name": "Smartphone", "price": 5000, "brand": "ABZ"} |

You can also use JSON to insert a query. For instance, adding employee information to an Employee table:

INSERT INTO Employee (employee_id, employee_info)

Values (1,’{“first_name”:John”, “last_name”:”Mark”}’);

BigQuery Data Types: GEOGRAPHY

You can use the BigQuery GEOGRAPHY data type to represent geographical shapes on the Earth’s surface, including points, polygons, or lines. A familiar scenario is when a pair of latitude and longitude coordinates is used to point to the Earth’s surface. You can use this data type to work on geographical data, such as location-based information, tracking locations, mapping, or spatial data analysis.

Geography Functions

These are various geographic functions grouped into different categories:

| Category | Description | Functions | Example |

| Constructors | These functions help you create geographic values from existing geographic values or coordinates. | ST_GEOGPOINT ST_MAKELINE ST_MAKEPOLYGON ST_MAKEPOLYGONORIENTED | ST_GEOGPOINT(-133.5414,34.8899) |

| Formatters | Formatters convert geography data to an external format, like Well-known text. | ST_ASBINARY ST_ASGEOJSON ST_ASTEXT ST_GEOHASH | ST_ASTEXT(geography_expression) to get WKT format |

| Parsers | These functions help to build geography data from external formats like GeoJSON, which is an open standard format designed for representing simple geographical features. | ST_GEOGFROM ST_GEOGFROMGEOJSON ST_GEOGFROMTEXT | ST_GEOGFROMTEXT('SRID=4267;POINT(-77.0092 38.889588)')) |

| Predicates | Using these functions, you can evaluate spatial relationships between two geographical entities or assess specific properties of geography. The return value of these functions is boolean. | ST_CONTAINS ST_COVERS ST_EQUALS ST_INTERSECTS ST_TOUCHES ST_WITHIN | ST_CONTAINS(geography_1, geography_2) |

| Transformations | These functions generate new geography data from existing data or input. | ST_UNION ST_BOUNDARY ST_BUFFER ST_INTERSECTION | ST_INTERSECTION(geography_1, geography_2) |

| Measures | Used to compute the measurement of one or more geography data types. | ST_ANGLE ST_AREA ST_DISTANCE ST_LENGTH ST_MAXDISTANCE | ST_DISTANCE(point 1, point2) to calculate the distance between two points |

The Takeaway

Understanding BigQuery data types and choosing the ones that best fit your data analytics requirements is crucial. Appropriate data type selection ensures data accuracy and consistency within the platform, but it becomes more critical if you’re working with multiple data sources and analytical tools. To reduce discrepancies when replicating your data with BigQuery, consider leveraging Estuary Flow. This tool is designed to ensure precise data management, ensuring the integrity and reliability of your data across various platforms.

FAQs

- What are the data types in BigQuery?

BigQuery supports several data types, each offering numerous functions and operations. Some of the common data types in BigQuery are String, Integer, Boolean, Struct, Array, Geography, DateTime, Numeric, and more.

- What data format does BigQuery support?

BigQuery supports various data formats, including CSV, JSON, Avro, ORC, and Parquet.

- In which format is data stored in BigQuery?

Data in BigQuery is stored in a columnar format, which is highly optimized for analytical queries.

Author

Popular Articles