In the era of data-driven insights, ETL (Extract, Transform, Load) operations form the cornerstone for unlocking the full potential of big data. These operations play a vital role in facilitating informed decision-making by enabling organizations to efficiently store high-quality data.

One of the leading tools for executing ETL processes is Google BigQuery. Known for its lightning-fast SQL-like queries on extensive datasets, it owes its speed to a distributed architecture and columnar storage. However, relying solely on Google BigQuery’s default features isn’t enough to ensure an efficient ETL process. Familiarizing yourself with some of the best practices is essential for optimizing performance.

To enhance your ETL process, this article will explore the nine best practices for effectively utilizing Google BigQuery ETL.

Google BigQuery Overview

BigQuery is a cloud-based analytics and data warehousing solution provided by Google. It is widely known for its serverless architecture, which provides efficient data management capabilities to store, analyze, and process large amounts of data. BigQuery also lets you concentrate entirely on data analysis without worrying about infrastructure management.

What sets BigQuery apart is its robust processing capabilities. BigQuery allows you to execute complex queries on terabytes of data in seconds and petabytes of data in just a few minutes. This efficiency is a result of its columnar architecture and Google's extensive resources.

Here are some key features of Google BigQuery:

- SQL Compatibility: BigQuery employs SQL-like query language, enabling the execution of queries in SQL syntax. This approach simplifies the process of leveraging BigQuery’s analytical capabilities.

- Data Integration: BigQuery offers federated queries for seamless data integration with various data sources, including Google Cloud Storage, Google Sheets, Google Cloud Dataflow, as well as external data sources like databases, APIs, and more.

- Data Transformation: The data transformation feature of BigQuery enables you to manipulate and refine the data efficiently. You can perform various techniques using SQL-based queries, such as filtering, aggregating, pivoting, and joining. This feature also lets you convert unstructured data into actionable insights within a single environment.

Extract, Transform, Load (ETL) Explained

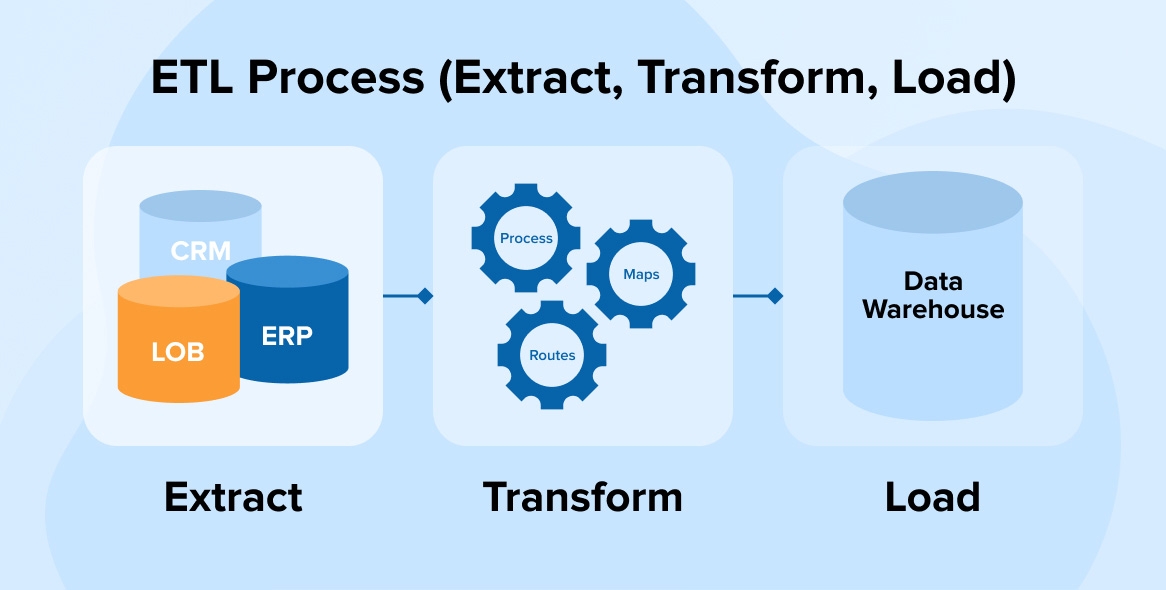

ETL is a three-step data integration process. ETL is designed to combine data from several sources and load it into a centralized location, usually a data warehouse, for analytical workflows.

Here's an overview of the ETL process:

- Extraction: In the initial stage, the data is extracted from different data sources, such as databases, logs, spreadsheets, or external systems.

- Transformation: After the data is extracted, it undergoes a series of transformations, which involves filtering, cleaning, joining, aggregating, or restructuring the data to ensure consistency. In this step, you can also perform data enrichment to add more information and enhance the dataset's value, if needed.

- Loading: The transformed data is then loaded into the destination, where it is accessible for analysis, reporting, and decision-making.

These steps in ETL ensure that data is accurate, consistent, and structured in a way that can be used for business intelligence or data-driven tasks.

9 Best Practices for Optimizing Google BigQuery ETL

Performing ETL for Google BigQuery can greatly enhance your data processing capabilities. To ensure a successful implementation, follow these nine best practices:

Uploading Data in the Staging Area

The first step in BigQuery ETL involves Extracting the data from different sources and either moving them to a staging area of your choice for processing, or for processing mid-stream. This helps to centralize data before performing complex transformation and analysis. You can store data using one of the following ways:

- SaaS tool like Estuary: If data is stored in any cloud storage system or application, you can use SaaS tools like Estuary to extract data. Estuary Flow is a real-time data integration platform that offers an extensive library of over 150+ native connectors and support for 500+ connectors from Airbyte, Meltano, or Stitch to connect various data sources to destinations including BigQuery. It takes care of many of the transformation tasks for you mid-stream - including data aggregation, joins, filtering, and manipulation - before loading into BigQuery. It’s easy to use, with no-code pipeline building, administration, and monitoring.

- Google Cloud: Google Cloud offers a storage transfer service to migrate the data to any staging area you choose. However, this option may include a learning curve, as it requires detailed knowledge of cloud platforms.

- gsutil for Server-to-GCS Uploads: To upload data to Google Cloud Storage (GCS) from different servers, you can also use gsutil, a Python application that allows you to upload data using the command line.

These strategies can help you successfully transfer data to Google Cloud Service. The supported formats for uploading data from GCS to BigQuery are Parquet, ORC, JSON, Cloud Firestore exports, Cloud Datastore exports, and Comma-separated Values (CSV).

Formatting Data the Right Way

Since BigQuery supports many data formats, it is crucial to select the right one to perform operations efficiently. To make the right choice, you should start by assessing your specific requirements. While formatting data, consider the following:

- Avro is one of the formats that allows the fastest query performance. This row-based binary format enables you to read the data in parallel by splitting it into small parts. An important thing to note here is that the compressed Avro format is much faster than non-compressed in terms of data ingestion.

- Parquet and ORC are column-oriented, binary-based formats. These two formats have their pros and cons but are the next most efficient after the Avro format.

- In terms of data loading speed, CSV and JSON are slower formats than the ones mentioned above. This is because Gzip compression cannot be broken. Here’s how these formats are processed: The Gzip file is first loaded onto a slot in BigQuery, where the file is decompressed, and then the load is parallelized.

These guidelines can help you choose the right file format based on common use cases. However, your final choice in format should align with the type of table you want to create in BigQuery.

Partitioning and Clustering

Partitioning is breaking up large data tables into more manageable subsets based on a specific column, often time or date. With this division, query performance becomes more efficient as less data needs to be scanned while performing a database operation.

On the other hand, clustering is a broader process than partitioning, as it organizes the data within partitions based on one or more columns. Often, clustering is used for filtering and grouping data. The arrangement of clustering optimizes query execution by grouping similar data.

By applying partitioning and clustering strategies on Google BigQuery ETL, you can harness the full potential of BigQuery’s analytical capabilities. This approach is most useful when managing large-scale datasets.

Reduce Data Processing

By following the best practices of data compression, you can streamline data processing without losing valuable information. In BigQuery, the efficiency of this process is directly related to the formats you use. Key insights into data compression include:

- By using formats like Avro, Parquet, and ORC, you can automatically perform data compression on BigQuery since these formats store data in columnar format.

- Avro is most efficient in performing data compression.

- If you use CSV or JSON format, uncompressed files perform better than compressed files.

- Change the data compression settings and prioritize the columns that are often used in queries to improve query performance.

Optimize Query Operations

Sometimes, SQL queries can be inefficient. However, you can optimize query performance in BigQuery with the following tips:

- Don't Use 'SELECT*': Avoid using 'SELECT*' while performing query operations unless necessary. Selecting whole tables for a query is not ideal and can result in slow performance.

- Sorting and Filtering: Apply sorting and filtering conditions on query operations to limit the amount of data queries processed. By adding these conditions, you can drastically cut down your query execution time and costs

- BigQuery Optimization: The automatic query optimization capabilities of BigQuery are robust. It includes features like query rewrites, automatic partitioned table pruning, and dynamic filtering. Learning how to use these features and monitor query execution plans can increase the effectiveness of your BigQuery operations.

Data Security

Following the best practices for using BigQuery isn’t of much benefit if your data is not secure. Here are some of the key strategies for securing your data in BigQuery:

- Use Google Cloud's Identity and Access Management (IAM) to manage access restrictions and permissions for BigQuery datasets and tables.

- In IAM, apply the principle of least privilege so that only those with proper authorization can access the data.

- Use encryption to protect your data at rest and while it is being transmitted. You can use encryption features such as Transparent Data Encryption (TDE) and Transport Layer Security (TLS) provided by Google Cloud to protect your data.

Schedule and Automate ETL Jobs

Scheduling and automating ETL workflows ensures the data is consistently transformed and loaded. Google Cloud offers tools such as Cloud Scheduler and Cloud Functions that allow you to trigger ETL jobs and schedule at predefined intervals.

Both tools ensure that data is consistently loaded, altered, and consumed within predefined schedules, helping you avoid manual intervention.

Additionally, avoiding repetitive ETL activities can help you focus on more strategic data management and analysis. This approach not only increases productivity but also enables more efficient decision-making processes.

Data Validation

Data validation involves implementing systematic checks and validating processes to ensure that data stored in BigQuery meets the quality standards. It covers tasks such as checking for null or missing values, confirming data types and formats, and more. Below are some of the benefits of data validation in BigQuery:

- It reduces the risk of incorrect decisions by carefully checking data at each level of the ETL process.

- You can avoid incomplete data from entering the analytical systems.

- Data validation in Google BigQuery ETL is a safeguard that ensures the information utilized for analysis is reliable and aligns with your objectives.

Federated Tables for Ad Hoc Analysis

Federated tables provide access to external data. With federated tables, you don't have to import the data that is stored outside BigQuery into its native storage. Instead, you can access data directly from sources such as Google Cloud Storage, Google Sheets, or even external databases like MySQL.

This best practice is commonly used for ad hoc analysis since it allows you to explore and learn from data quickly. However, when compared to native BigQuery storage, the method has some limitations, including:

- Query performance is not as good as native BigQuery storage.

- Currently, the Parquet or ORC format is not supported for this operation.

- Consistency is not guaranteed if the data changes while querying.

- The query result data is not cached.

The Takeaway

Throughout this article, we’ve delved into the nine Google BigQuery ETL best practices, providing valuable insight for data management and analytics. By following these practices, you can streamline your ETL process when working with BigQuery.

However, performing these best practices manually can be time-consuming and expensive. You might also need a dedicated team for this, which can be inefficient.

By using SaaS tools like Estuary Flow, you can reduce manual efforts and improve the effectiveness of your ETL process. It offers automation capabilities to seamlessly build your pipeline, an easy-to-use interface to orchestrate workflows, and dedicated customer support in case of need.

Without requiring intensive code, you can set up a data pipeline in Flow for a fully automated and secure data migration process. Sign up here and start using Flow for your data integration needs.

Author

Popular Articles