Any organization looking to leverage data for real-time analytics and decision-making often requires integrating data across diverse systems. If your organization uses Apache Kafka, a robust stream-processing platform, for event-streaming, consider connecting Kafka to MongoDB, a leading NoSQL database known for its flexible, scalable storage. This integration will help enhance data availability and real-time processing capabilities.

A Kafka to MongoDB connection is particularly beneficial if you need to process large volumes of data for complex querying and analysis. The setup can help capture, store, and analyze data in real-time for better decision-making and optimal business outcomes.

Let’s quickly look at both platforms and their features before getting started with the different methods to integrate Kafka to MongoDB.

Apache Kafka – Source

Apache Kafka is a popular open-source data streaming platform primarily used to manage data from multiple sources and deliver it to diverse consumers. It enables efficient publication, storage, and processing of streams of records and allows the simultaneous streaming of massive data volumes in real time.

Key Features of Apache Kafka

- Distributed Architecture: Kafka employs a distributed architecture, dispersing data across numerous nodes within a data cluster. This architecture is suitable for handling vast amounts of data while ensuring fault tolerance.

- High Throughput: Kafka excels in handling massive data volumes without compromising performance. This is achieved by distributing data across multiple partitions and allowing parallel processing, ensuring Kafka can maintain high throughput even as data volume increases.

- Connectivity: It has extensive connectivity options to integrate data with various sources such as data lakes, databases, and external systems via Kafka Connect. This enables Kafka to act as a central data stream and distribution hub within an organization's infrastructure.

MongoDB – Destination

MongoDB is a scalable and flexible NoSQL document database platform designed to overcome the limitations of traditional relational databases and other NoSQL solutions. Unlike relational databases that require a predefined schema, MongoDB’s dynamic schema allows you to store various data types and structures. It even excels in data storage, management, and retrieval tasks, offering support for high-volume data storage.

In addition, MongoDB offers MongoDB Atlas, a fully managed cloud service that simplifies the deployment, management, and scaling of MongoDB databases.

Key Features of MongoDB

MongoDB provides several features that make it an excellent choice for various applications.

Here are some of the features:

- Sharding: It is the practice of dividing extensive datasets across multiple distributed instances. Sharding enables significant horizontal scalability. Horizontal scaling allocates a portion of the dataset to each shard within the cluster, essentially treating each shard as an independent database.

- Replication: MongoDB uses replica sets to achieve replication. A primary server accepts all write operations and replicates them across secondary servers. In addition, any secondary server can be elected as the new primary node in case of primary server failure.

- Database Triggers: Database triggers in MongoDB Atlas allow you to execute code in response to specific database events, such as document insertion, updation, or deletion. These triggers can also be scheduled to perform at predetermined times.

- Authentication: Authentication is a vital security feature in MongoDB, ensuring only authorized users can access the database. MongoDB offers various authentication mechanisms, with the Salted Challenge Response Authentication Mechanism (SCRAM) being the default.

Methods to Connect Kafka to MongoDB

Now, let’s look at the two ways to connect Kafka to MongoDB.

- Method 1: Use Estuary Flow to Connect Kafka to MongoDB

- Method 2: Use MongoDB as a Sink to Connect Kafka with MongoDB

Method 1: Use Estuary Flow to Connect Kafka to MongoDB

Estuary Flow is a versatile platform with no-code automation capabilities. Its user-friendly interface and pre-built connectors seamlessly transfer data from the source to the destination in real-time. With its impressive connector capabilities, Flow provides and supports an effortless connection between Kafka and MongoDB.

Key Benefits of Estuary Flow

Some of the top features of Flow include:

- No-code Connectivity: Estuary Flow provides over 300+ ready-to-use connectors, offering the capability to effortlessly sync any source and destination with just a few clicks. Configuring these connectors doesn’t even require writing a single line of code.

- Streaming or Batch Processing: Estuary Flow offers the flexibility to perform either streaming or batch processes. You can opt to transform and merge data from various sources before loading it into the data warehouse (ETL), after loading (ELT), or both (ETLT). Estuary Flow also supports streaming or batch transforms using SQL or TypeScript for ETL processes and facilitates ELT processes using dbt (ELT).

- Real-time Data Processing: Estuary Flow supports real-time data streaming and migration, enabling continuous data capture and replication across platforms with millisecond delay. This ensures that data is readily available for use without any significant lag.

- Change Data Capture: CDC helps data synchronization across systems with low latency. This implies that any updates or changes to the Kafka topic will be automatically reflected in MongoDB without manual intervention.

- Scalability: Estuary Flow is structured for horizontal scaling, allowing it to handle large volumes of data efficiently. This scalability feature makes it suitable for organizations of all sizes, effectively accommodating small-scale and large-scale operations.

Prerequisites

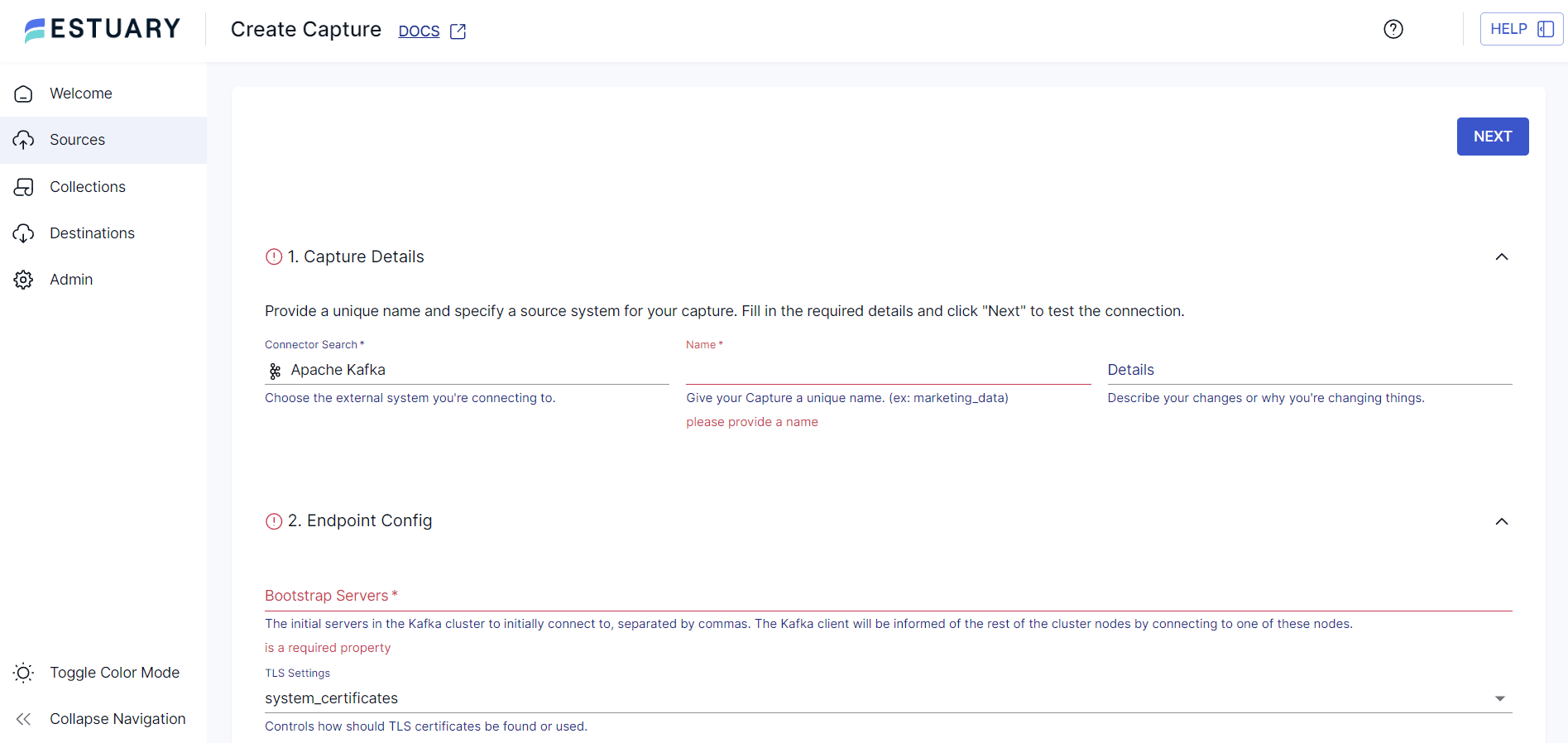

Step 1: Connect to Kafka as a Source

- Sign in to your Estuary account.

- To start configuring Kafka as the source end of the data pipeline, click Sources from the left-side navigation pane of the dashboard. Then, click the + NEW CAPTURE button.

- Type Kafka in the Search connectors field. When you see the Apache Kafka source connector in the search results, click its Capture button.

- On the Create Capture page, specify details like a unique Name, Bootstrap Servers, and TLS Settings. In the Credentials section, select your choice of authentication from SASL or AWS MSK IAM.

- To finish the configuration, click NEXT > SAVE AND PUBLISH. The connector will capture streaming from Kafka topics.

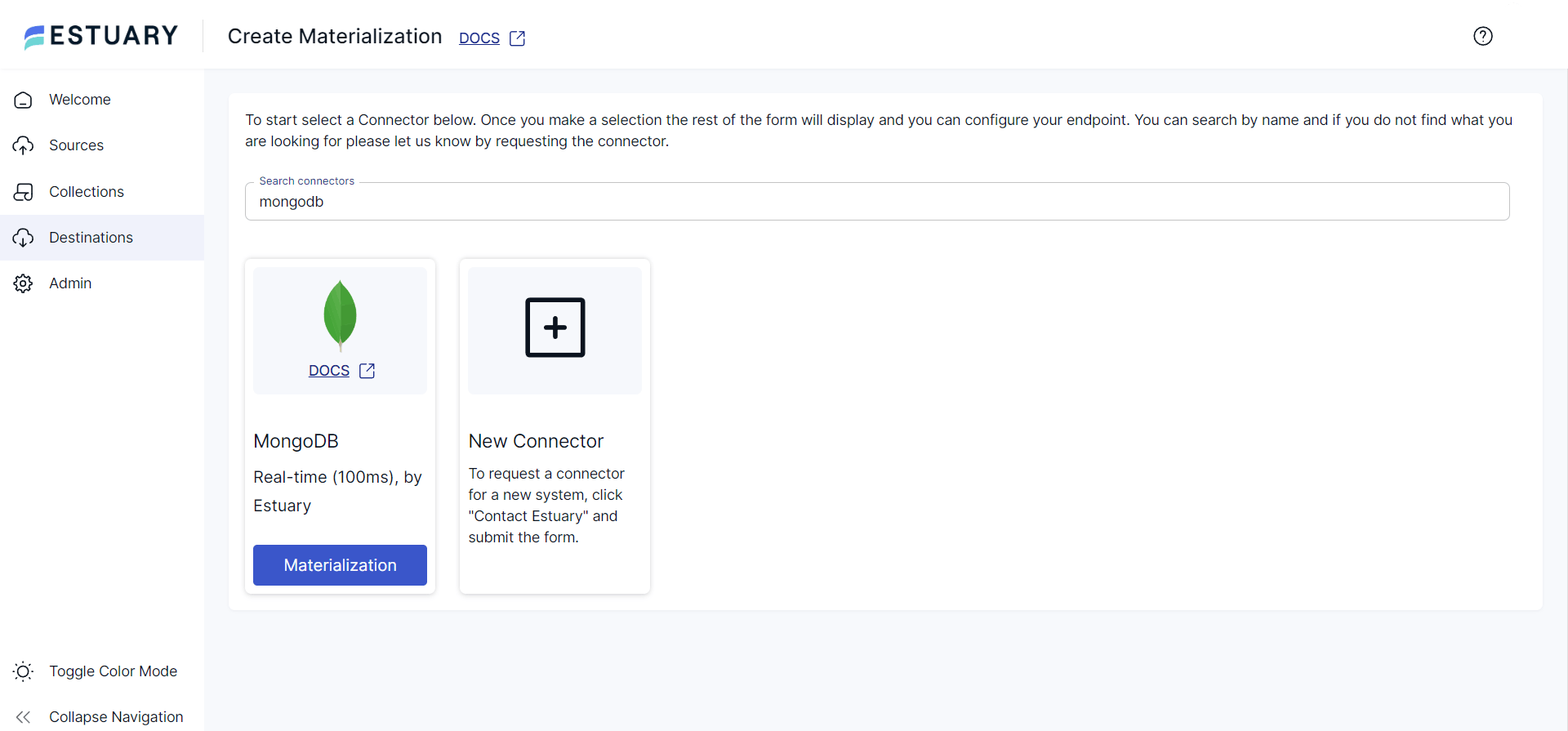

Step 2: Connect MongoDB as Destination

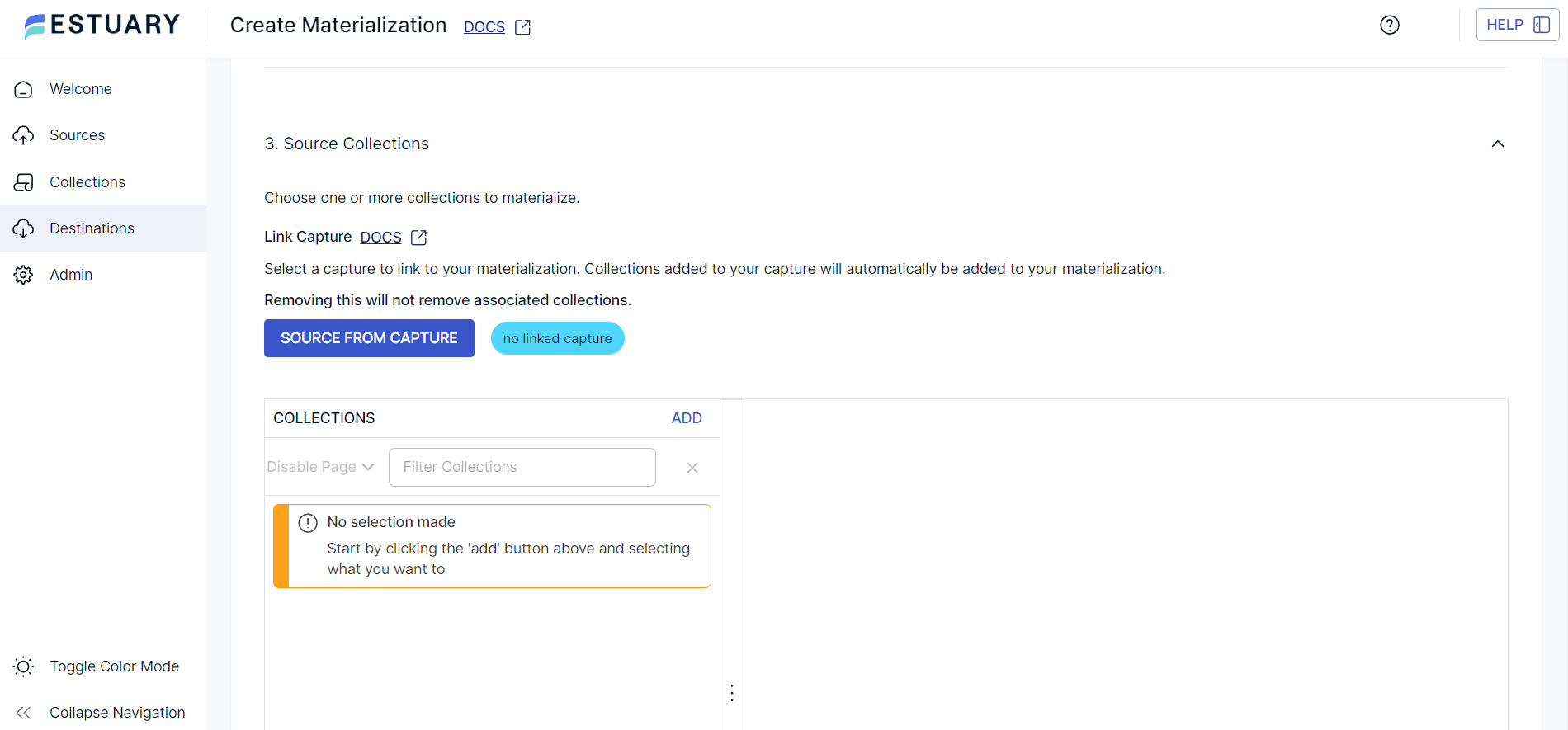

- To start configuring the destination end of the data pipeline, click the Destinations option on the main dashboard.

- Then click the + NEW MATERIALIZATION button.

- Now, search for MongoDB using the Search connectors field. Click the Materialization button of the MongoDB connector to start the configuration process.

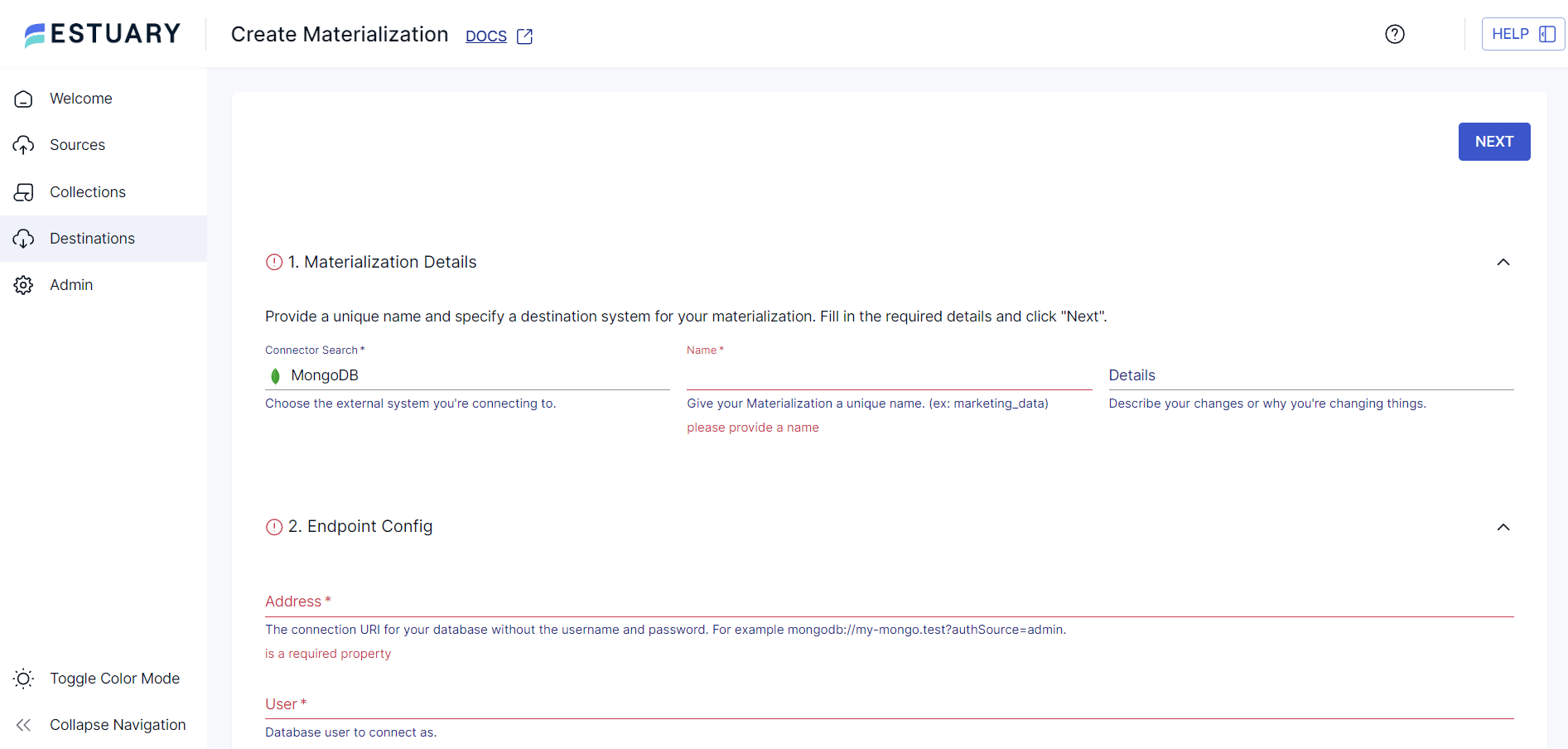

- On the Create Materialization page, fill in the details such as Name, Address, User, Password, and Database.

- Click the SOURCE FROM CAPTURE button in the Source Collections section to link a capture to your materialization.

- Click NEXT > SAVE and PUBLISH to finish the destination configuration. The connector will materialize Flow collections into MongoDB collections.

Method 2: Use MongoDB as a Sink to Connect Kafka

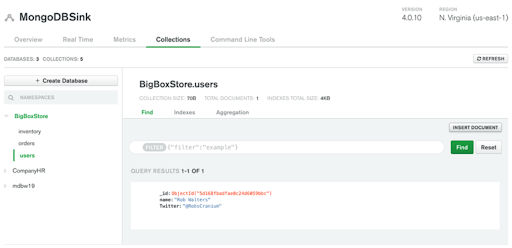

Let's consider a use case to understand the Kafka to MongoDB connector better.

- When new users register on the website, their contact details are required across multiple departments.

- The contact information is stored in a Kafka topic named newuser for shared access.

- Subsequently, MongoDB is configured as a sink for the Kafka topic using the MongoDB Sink Connector. This setup allows the propagation of new user information to a users collection in MongoDB.

- To configure the MongoDB Sink Connector for this use case, issue a REST API call to the Kafka Connect service as follows:

plaintextcurl -X PUT http://localhost:8083/connectors/sink-mongodb-users/config -H "Content-Type: application/json" -d ' {

"connector .class":"com.mongodb.kafka.connect.MongoSinkConnector",

"tasks.max":"1",

"topics":"newuser",

"connection.uri":"<>",

"database":"BigBoxStore",

"collection":"users",

"key.converter":"org.apache.kafka.connect.json.JsonConverter",

"key.converter.schemas.enable": false,

"value.converter":"org.apache.kafka.connect.json.JsonConverter",

"value.converter.schemas.enable":false

}'plaintextTo test this setup, use Kafkacat to send a message that simulates Kafka.

kafkacat -b localhost:9092 -t newuser -P <

- To verify that the message has been successfully transmitted to your MongoDB database, establish a connection to MongoDB using your preferred client tool and execute the db.users.find() command.

- If you wish to use MongoDB Atlas, you can navigate to the Collections tab to view the databases and collections present in your cluster.

The MongoDB sink connector opens the door to many use cases ranging from microservice patterns to event-driven architectures. Learn more about the MongoDB connector for Kafka here.

Limitations of Using MongoDB Sink Connector

- Need for Constant Monitoring: Despite the automation provided by the sink connector, constant monitoring is required to ensure the timely resolution of any errors.

- Increased Setup Time: Setting up the MongoDB sink connector involves configuration steps that tend to be time-consuming, apart from requiring technical expertise in Kafka and MongoDB.

Conclusion

Data migration from Kafka to MongoDB presents several advantages, such as auditing capabilities, maintaining and scaling data infrastructure, ad-hoc queries, and accommodating increasing data volumes and query loads. There are two approaches to establishing the connection between Kafka and MongoDB.

One of the methods is to use the MongoDB sink connector to transfer data from Kafka to MongoDB. However, this approach has limitations, such as being time-consuming, error-prone, and requiring continuous monitoring.

Consider using data pipeline solutions like Estuary Flow to help overcome unnecessary drawbacks when replicating Kafka data to MongoDB. With ready-to-use connectors, an intuitive interface, and CDC capabilities, Estuary Flow supports a streamlined integration process.

Are you looking to migrate data between different platforms? With its impressive features and 300+ pre-built connectors, Estuary Flow is likely the best solution for your varied data integration needs. Sign up for your free account and get started!

Frequently Asked Questions

- Is there any performance impact when streaming data from Kafka to MongoDB?

Performance considerations depend on various factors such as data volume, network latency, hardware resources, and the efficiency of data processing pipelines. Properly configured Kafka and MongoDB clusters and optimized data serialization and batching strategies can help minimize performance overhead and enable smooth data streaming between Kafka and MongoDB. - What are the benefits of using Kafka with MongoDB?

Using Kafka with MongoDB enables real-time data processing and analysis by streaming data directly into MongoDB. This ensures faster data insights and decision-making capabilities based on the latest data. It also provides scalability and fault tolerance, as Kafka can handle large volumes of data streams, and MongoDB can scale horizontally to accommodate growing data needs.

Author

Popular Articles