Growing businesses have to deal with higher volumes of data, requiring effective storage and analytical solutions. One of the strategic moves for efficient data management and utilization is to migrate data from one database system to another. This allows enterprises to leverage more powerful capabilities, like advanced analytics and backups, of the destination database.

The migration from MariaDB to Postgres is a popular solution among organizations. The advanced querying capabilities and extensibility of Postgres are some of the driving factors behind this migration.

Whether you’re seeking to harness the vast potential of Postgres or upgrading your database infrastructure, there are different methods that can help you achieve a MariaDB to Postgres migration easily. Let’s look at an overview of both these platforms before getting to know the ways to migrate your data.

An Overview of MariaDB

MariaDB, an open-source relational database management system (RDBMS), was developed in 2009 by the original creators of MySQL.

It is primarily designed as a drop-in replacement for MySQL. Therefore, tools and applications that are compatible with MySQL don’t require any significant modifications to work with MariaDB. Similar to MySQL, MariaDB also supports external plugins to help enhance database functionalities. This allows you to extend the database and apply it in more use cases, such as data warehousing and logging applications.

However, MariaDB performs better than MySQL in querying views from the database. While MySQL queries all tables connected to the desired view, MariaDB only queries the tables specified in the query. Additionally, when compared to MySQL, MariaDB supports more storage engines, including TokuDB, Spider, and Connect.

An Overview of Postgres

PostgreSQL, commonly referred to as Postgres, is an open-source relational database management system (RDBMS) that was originally developed at the University of California, Berkeley. However, it is now being actively maintained and developed by a global community of developers.

Postgres supports both SQL (relational) and JSON (non-relational) querying, providing you the flexibility to handle structured and semi-structured data. For querying structured data, Postgres conforms with the SQL standard, allowing you to write queries that are compatible with other relational databases. And to handle semi-structured data, Postgres has native support for JSON and JSONB data types. It also provides a rich set of operators and functions for querying JSON data.

While Postgres supports a wide range of built-in data types, including integers, arrays, strings, XML, JSON, and geometrical data types, it also allows you to create custom data types. Apart from this, you can define custom operators, functions, and procedural languages by using extensions and user-defined functions.

Methods to Migrate Data from MariaDB to Postgres

There are three methods that will help you move your data from MariaDB to Postgres.

- Method #1: Using CSV files

- Method #2: Using pgloader

- Method #3: Using no-code ETL tools like Estuary Flow

Method #1: Using CSV Files to Manually Replicate Tables from MariaDB to Postgres

You can export your data from MariaDB as CSV files to upload to PostgreSQL. Here are the steps to get this done:

Step 1: Export data from MariaDB

MariaDB accepts SQL commands similar to MySQL. Use the INTO OUTFILE command to export your MariaDB table as a CSV file. Here’s an example of how you can use this command:

plaintextselect * from customers

INTO OUTFILE 'customers.csv'

FIELDS ENCLOSED BY '"'

TERMINATED BY ','

ESCAPED BY '"'

LINES TERMINATED BY '\n';This command will export data from the customers table as a customers.csv file.

Step 2: Import the CSV File to PostgreSQL

To import the CSV file into a Postgres database, you can use the COPY command as follows:

plaintextCOPY customers(first_name,last_name,contact_number,city)

FROM ‘C:customers.csv’

DELIMITER ‘,’

CSV HEADER;This command will copy data from the source file customers.csv to the target table named customers with the list of columns specified in parentheses.

You can apply the same procedure to copy data from MariaDB to Postgres for other tables as well. However, if you're dealing with a huge number of tables, the process of replicating data can become time-consuming.

To import multiple files into a table at once, you can follow the steps mentioned here.

Method #2: Using pgloader to Migrate Data from MariaDB to Postgres

Pgloader is an open-source command-line data-loading tool for loading data from databases like MySQL, MariaDB, SQLite, and SQL Server to PostgreSQL. Designed to handle large volumes of data, while loading data, it can also perform data transformations in parallel. Some of the data transformations that pgloader manages include schema differences, data encoding, data type conversions, and more.

Here are the steps involved in using pgloader for MariaDB to Postgres migration:

Step 1: Update and upgrade the apt package on your system with the following command:

plaintextapt update && apt upgrade -yStep 2: Ensure you have pgloader installed on your system. If you don’t have it yet, use the following command to install pgloader:

plaintextapt install pgloaderStep 3: To verify the installation is successful, check the version of pgloader installed by using the following command:

plaintextpgloader --versionStep 4: Ensure you have a PostgreSQL database where you want to load the data. Then, run the following command:

plaintextpgloader mysql://mysqluser:password@<mysql-server>:<mysql-port>/<source-database> postgresql://<pgsql-role>:password@<pgsql_server>:<postgresql-port>/<target-database>If pgloader is unable to perform the data type conversion successfully during the data loading process, you may witness an error. But you can fix it by executing the cast modifier commands first, followed by the pgloader command mentioned above. Here’s an example of the cast modifier commands:

plaintextpgloader --cast "type float to real drop typemod" \ --cast "type int to integer drop typemod" \ --cast "type bigint when (= 20 precision) to bigint drop typemod" \This method will replicate your data from MariaDB to Postgres without requiring any code for data transformations. However, if you need more frequent data updates, like every hour or daily, this can get exhausting.To manage this, you can consider setting up a cron job.

Method #3: Using No-Code ETL Tools like Estuary Flow for MariaDB to Postgres Migration

While the previous methods may migrate your data from MariaDB to Postgres, they may not be the most efficient in terms of time and effort, nor can they achieve real-time data migration or manage schema evolution on your behalf. Using no-code ETL tools can help you overcome these challenges.

Estuary Flow is one such tool that will extract your data from the desired source and load it into the destination without requiring a single line of code. Here are some key features of Estuary Flow:

- Built-in Connectors: Flow offers a range of pre-built connectors for widely used data sources and destinations to simplify the process of building a data pipeline. You can use these connectors to set up the data pipeline in just a few clicks. And if you can’t find the connector you’re looking for, you can submit a request to the Estuary team to build it for you.

- Scalability: Flow is designed to handle large data volumes and scale seamlessly for growing data needs. This ensures zero data loss and improved data integrity.

- Real-time Data Streaming: Flow captures data from the source and transfers it to the destination, all with millisecond latency. This makes sure that your data is always up-to-date for real-time analysis.

To get started with Estuary Flow for integrating MariaDB and Postgres, you must register for an account. If you already have one, then log in to your Estuary account. Here are the steps to follow after logging in:

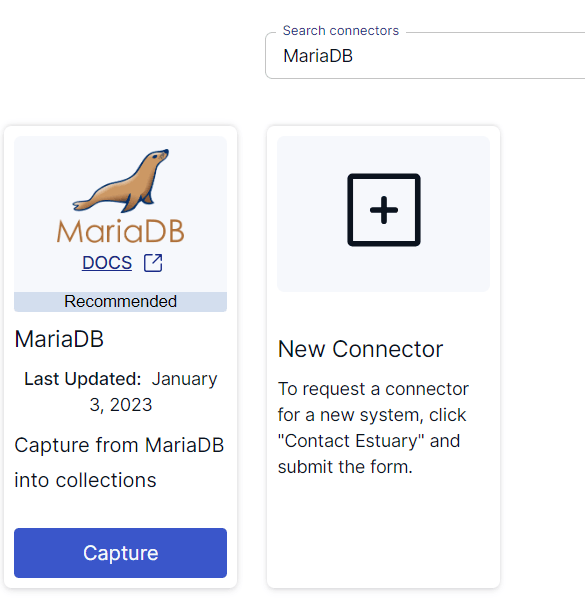

Step 1: Setting Up MariaDB as the Data Source

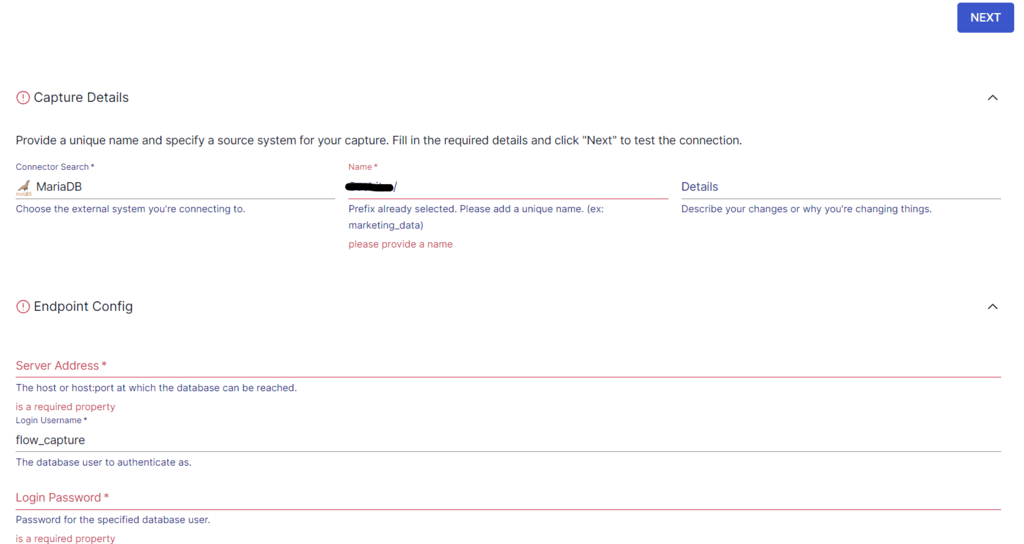

On the Estuary dashboard, click on Sources on the left-side pane. Then, click on + NEW CAPTURE and search for MariaDB in the Search connectors box. You will see the MariaDB connector appearing in the search results. Click on the connector’s Capture button.

There are certain prerequisites that you must fulfill before you use this connector. Read about the prerequisites here.

Specify the required details on the MariaDB connector page. These details include a Name for the connector, Server Address, and Login Password. After filling in the details, click on NEXT, then click on Save and Publish.

The MariaDB connector is a Change Data Capture (CDC) connector that captures change events from a MariaDB database via the Binary Log.

Step 2: Setting Up Postgres as the Destination

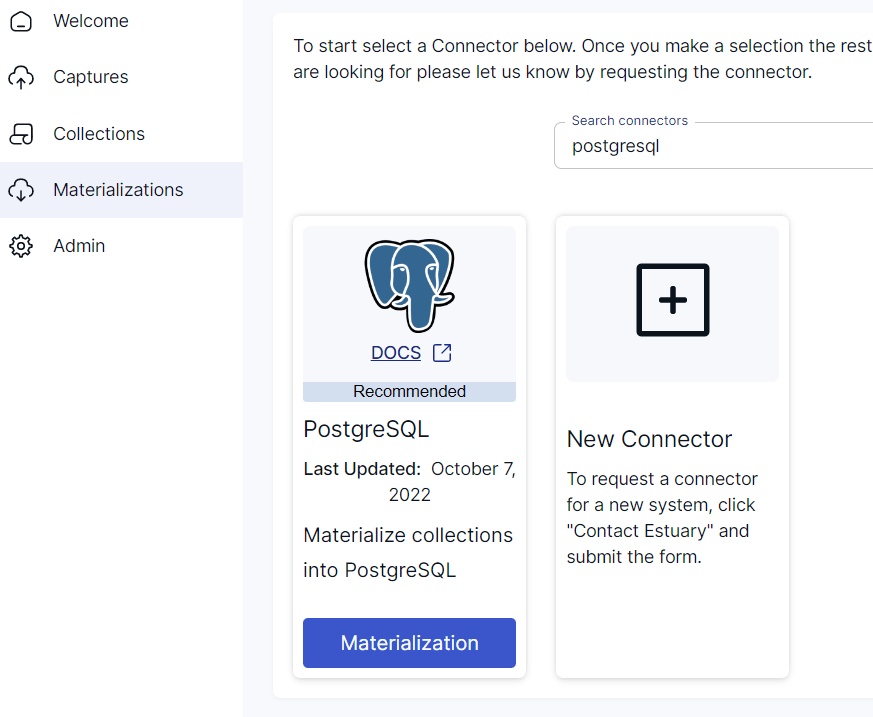

Now, you must set up the destination end of the pipeline. To do this, you can click on the Materialize Connections option that appears in the pop-up window following a successful capture. Alternatively, you can navigate to the Estuary dashboard and click on Destinations on the left-side pane. Next, click on the + NEW MATERIALIZATION button.

Search for Postgres in the Search connectors box. The search results will show the PostgreSQL connector, among other connector options. Click on the Materialization button of the connector.

Before you use this connector, ensure you complete the following prerequisites:

- A Postgres database and user credentials.

- At least one Flow collection.

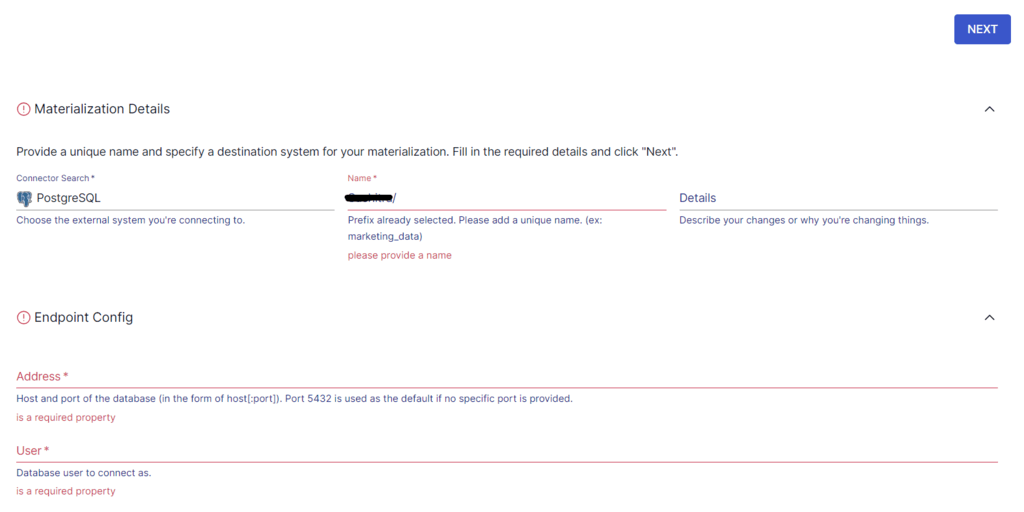

This will redirect you to the PostgreSQL connector page, where you must fill in some required details.

Specify the details, such as a Name for the connector, Address, User, and Password. While the Flow collections will be automatically selected for you, consider using the Source Collections feature to manually select collections you want to materialize into your Postgres database.

Click on NEXT, followed by Save and Publish. The connector will materialize Flow collections into tables in a PostgreSQL database.

For more information on the data migration process, read the Estuary documentation:

Conclusion

Moving data from MariaDB to Postgres helps unlock several benefits, such as the capabilities of advanced analytical querying, extensibility, and scalability. PostgreSQL is an appealing choice for your applications with complex data requirements.

There are three methods for migrating data from MariaDB to Postgres. You can use CSV files, pgloader, or no-code SaaS tools like Estuary Flow to complete this data migration. Among these methods, the one that requires minimal manual effort and maintenance and is the least time-consuming is the use of no-code tools.

Estuary Flow supports real-time data streaming and Change Data Capture (CDC) to capture change events in real time. This helps perform complex time-critical data analysis to gain actionable insights for improved data-driven decision-making. Register for your free Estuary account and start building your first data pipeline.

Author

Popular Articles