Any business utilizing its data for real-time analytics and data governance often requires robust data integrations across different systems. If your business utilizes MySQL, a traditional relational database management system, consider connecting MySQL to Databricks, a unified platform for data, analytics, and AI.

Migrating data from MySQL to Databricks can significantly enhance your operational capabilities. The associated benefits include real-time analytics, improved data governance, and support for ETL and the orchestration of batch and streaming data. This integration helps facilitate efficient data processing and delivers crucial insights for decision-making.

This article will explore the two methods for connecting MySQL to Databricks.

If you're eager to jump straight to the methods and steps for connecting MySQL to Databricks, feel free to click here to skip ahead.

An Overview of MySQL

MySQL is one of the most popular relational database management systems (RDBMS) available today, renowned for its reliability and performance. It is acclaimed for its ability to organize data into structured tables and establish relationships among various data types. The SQL in MySQL implies you can utilize Structured Query Language (SQL) for creating, modifying, and extracting data from relational databases.

Here are some key features of MySQL:

- High Performance: MySQL supports multiple storage engines, such as MyISAM and InnoDB, each optimized for different use cases. And, with efficient indexing of tables, MySQL enhances performance, speeding up data retrieval operations like SELECT queries. MySQL also employs various caching techniques to improve query response times.

- Robust Security Measures: MySQL provides robust security features, including host-based verification and support for secure password mechanisms. It also allows detailed control of user privileges that can be configured per host, per database, or even per table.

- Reliability: MySQL ensures data integrity and reliability with features like foreign key constraints and transactional support. Regular updates, bug fixes, and security patches help improve stability while keeping the system secure and up-to-date.

An Overview of Databricks

Databricks is a cloud-based platform that utilizes the Apache Spark big data processing engine to manage and analyze large datasets efficiently. It can handle diverse workloads, including batch processing, real-time streaming, and machine-learning tasks, making it highly versatile.

To handle such workloads, Databricks optimizes cloud resources with dynamic allocation and scaling to match your workload requirements. This ensures efficient data processing and helps in cost optimization.

Here are some of the key features of Databricks:

- Integrated Tools: Databricks offers comprehensive tools and services tailored for big data operations. These include support for multiple data formats, seamless integration with leading libraries and frameworks, and data analysis utilities.

- Data Governance: Databricks offers robust data governance services, including audits and compliance controls. These functionalities empower you to regulate data access, manage data lifecycle, and fulfill their legal obligations and compliance objectives.

- Encryption: Databricks employ encryption to protect data at rest and in transit. Some of the encryption measures include safeguarding data storage, network communication, and user authentication.

Why Migrate from MySQL to Databricks

Migrating from MySQL to Databricks can help unlock new data insights and improve decision-making. Let's examine the several benefits of this migration.

- Unified Data Platform: Databricks offers a unified data platform that integrates all your data, analytics, and AI capabilities in a single environment. This simplifies data workflows, reduces integration complexity, and enables cross-functional collaboration.

- Build Machine Learning Models: Databricks provides cutting-edge tools like Databricks AutoML for automated ML model training and Unity Catalog for model management. You can utilize these tools for a streamlined, full-cycle development of machine learning models.

- Cost-Effective Scalability: Databricks optimizes resource allocation automatically, scaling up or down based on workloads. This helps reduce costs by ensuring you only pay for the resources you use.

Methods to Migrate Data from MySQL to Databricks

Here are the two ways to migrate data from MySQL to Databricks:

- Method #1: Using Estuary Flow to Load Data from MySQL to Databricks

- Method #2: Using CSV Export/Import to Move Data from MySQL to Databricks

Method #1: Using Estuary Flow to Load Data from MySQL to Databricks

Estuary Flow is a real-time ETL solution with low-code automation capabilities. It offers a user-friendly interface and pre-built connectors that help effortlessly transfer data between the source and destination in real time.

Some of the key features of Estuary Flow are:

- Transformation Options: Estuary Flow allows you to perform real-time or batch transformations using SQL or TypeScript. You can use these transformations for ELT or the traditional ETL approach with Estuary Flow.

- Change Data Capture (CDC): Estuary Flow leverages Change Data Capture (CDC) for real-time data processing. This low-latency technique helps track and capture the changes made to the source data, ensuring these updates are reflected in the destinations immediately.

- Real-time Integration: The real-time data integration feature helps build pipelines of millisecond latency between data extraction and loading. This ensures immediate insights and swift decision-making since you can analyze and act upon the data almost as soon as it is generated.

- No-code Configuration: Estuary Flow offers 200+ pre-built connectors that can seamlessly synchronize any source and destination with just a few clicks. Configuring these connectors does not require writing a single line of code.

Before you start using Estuary Flow to transfer data from MySQL to Databricks, here are some prerequisites:

Step 1: Configure MySQL as the Source

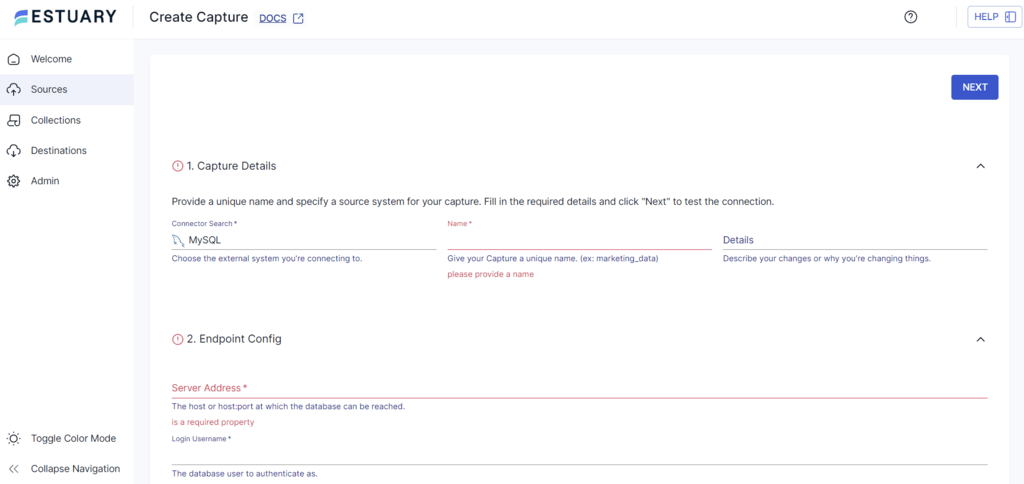

- Login to your Estuary Flow account to access the dashboard.

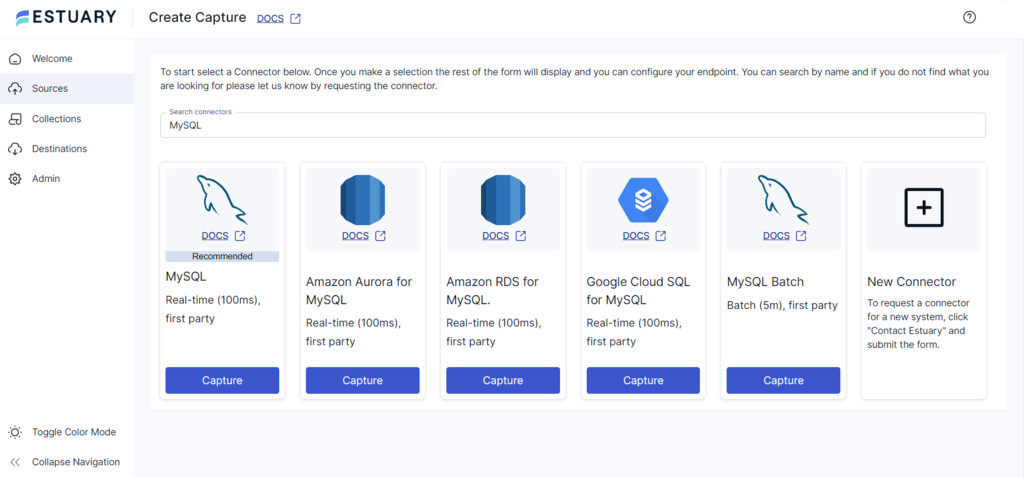

- Click the Sources option on the left-side pane of the dashboard.

- Click the + NEW CAPTURE button, and you will be redirected to the Create Capture page.

- On the Create Capture page, type MySQL in the Search connectors field. Click the Capture button of the real-time MySQL connector from the search results.

- On the MySQL connector configuration page, fill in the necessary details, such as Name, Server Address, Login Username, and Login Password.

- Finally, click NEXT on the top right corner and then SAVE AND PUBLISH to complete the source configuration.

This CDC connector will capture change events from your MySQL database via the Binary Log.

Step 2: Configure Databricks as the Destination

- To configure Databricks as the destination end of the integration pipeline, click MATERIALIZE COLLECTIONS in the pop-up window appearing after the successful capture.

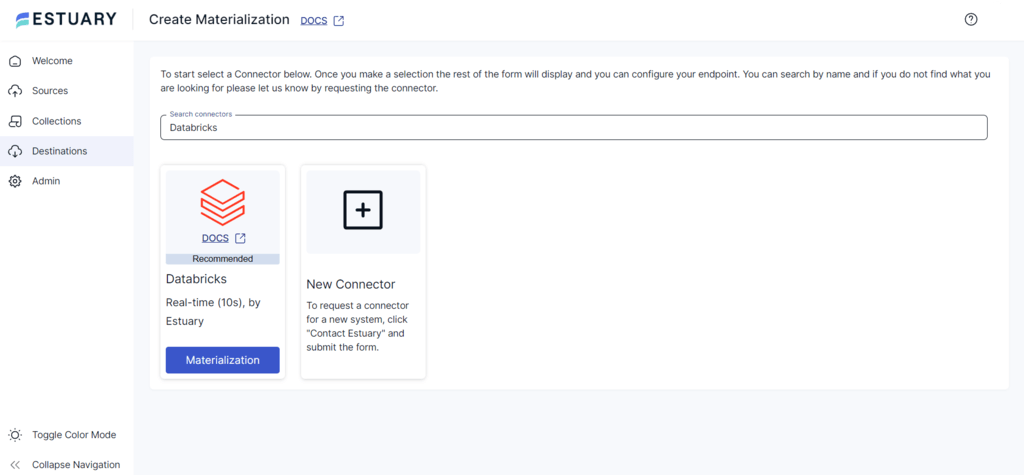

Alternatively, navigate to the dashboard and click Destinations > + NEW MATERIALIZATION. This will redirect you to the Create Materialization page.

- Type Databricks in the Search connectors field and click the connector’s Materialization button from the search results.

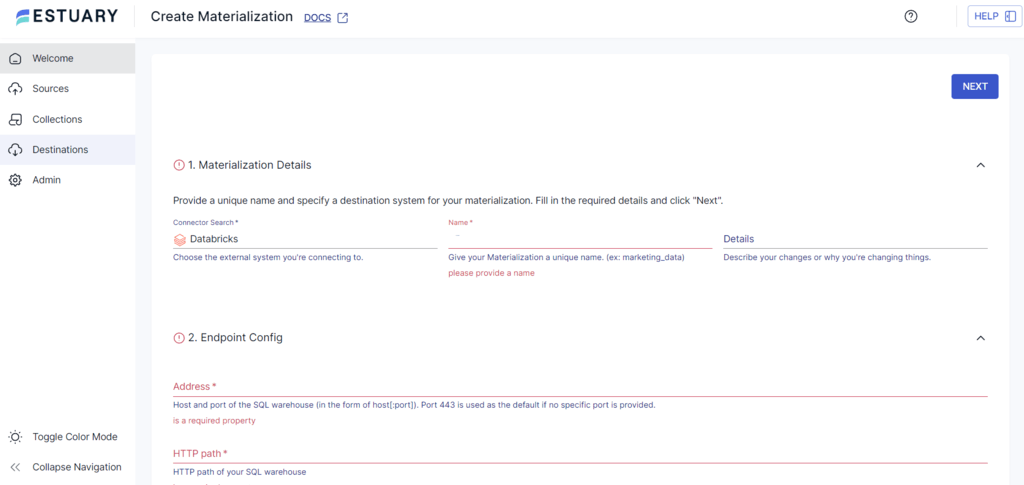

- On the Databricks connector configuration page, specify the necessary details, such as Name, Address, HTTP path, and Catalog Name. For authentication, specify the Personal Access Token details.

- Although collections added to your capture will automatically be included to your materialization, consider using the Source Collections section to manually link a capture to your materialization.

- Finally, click the NEXT > SAVE AND PUBLISH buttons to complete the destination configuration.

The Databricks connector will materialize Flow collections of your MySQL data into tables in your Databricks SQL warehouse. Initially, the connector will upload data changes to a Databricks Unity Catalog Volume. Following this, it transactionally applies the changes to the Databricks tables.

Ready to streamline your MySQL to Databricks data transfer? Sign up for your free Estuary account and start building today!

See Estuary Flow streamline your Databricks data loading in this quick video demo.

Method 2: Using CSV Export/Import to Move Data from MySQL to Databricks

This method involves extracting data from MySQL in CSV format and loading it into Databricks. Let’s look at the details of the steps involved in this method:

Step 1: Use MySQL Workbench to Extract MySQL Data as CSV Files

MySQL Workbench is an import/export wizard that allows you to export databases/tables using a graphical user interface (GUI). It supports exports in CSV and JSON formats. Download MySQL Workbench here.

Here are the steps to export MySQL data as CSV

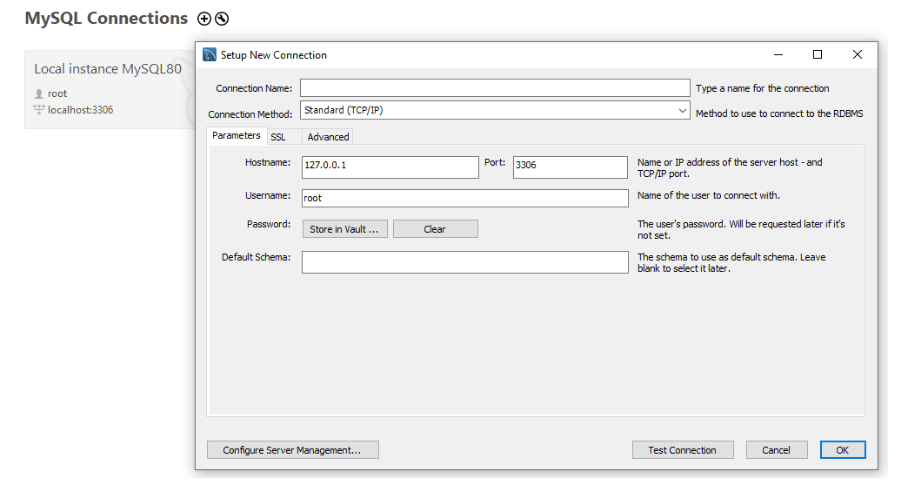

- Open MySQL Workbench. To connect to your MySQL database, click the + sign next to MySQL Connections on the home page.

- Provide the necessary details, such as Connection Name, Connection Method, Hostname, Port, Username, and Password.

- Click the Test Connection button to complete the database connection.

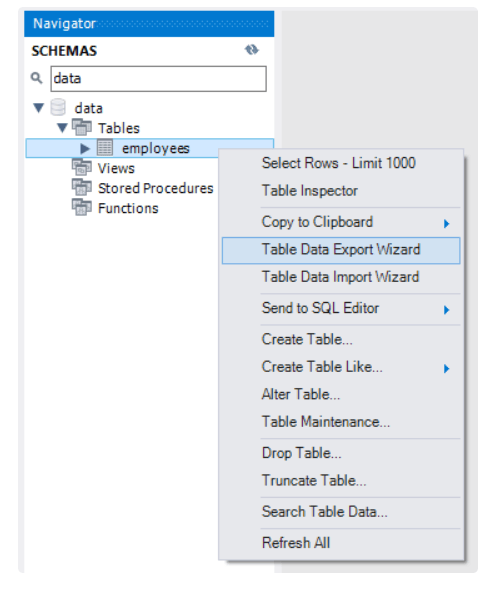

- To export MySQL data, right-click on the table containing the data you want to export and select the Table Data Export Wizard option.

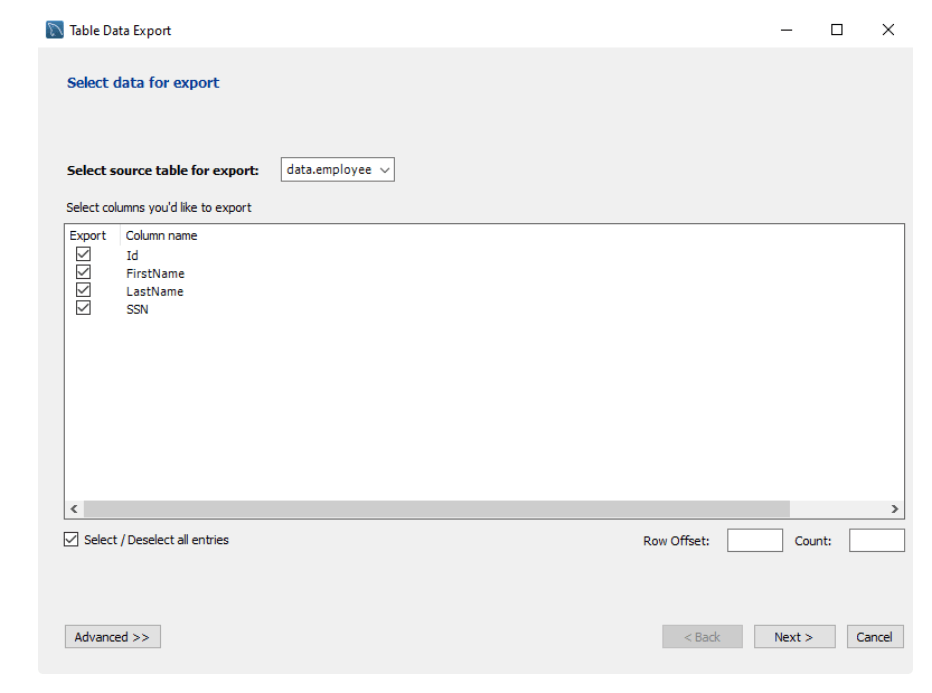

- Specify the data to be exported, including the Columns, Count (the number of rows to be exported from the top), and Row Offset (the number of rows to be exported from the bottom).

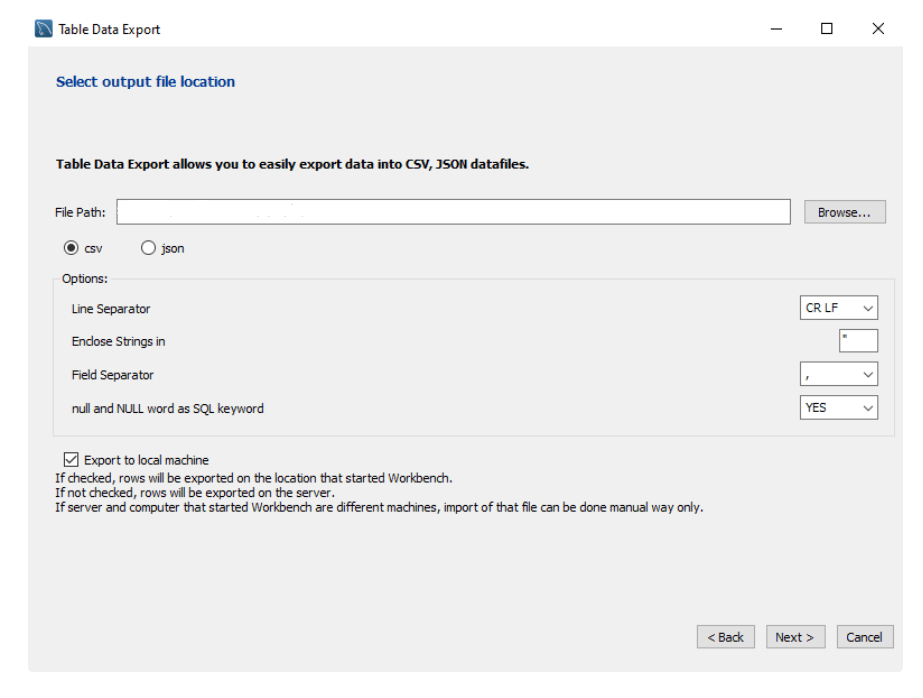

- Specify the File Path, format (CSV/JSON), Line Separator, Enclose String in, Field separator used to delimit values, and null and Null word as SQL keyword. Then click the Next button to proceed.

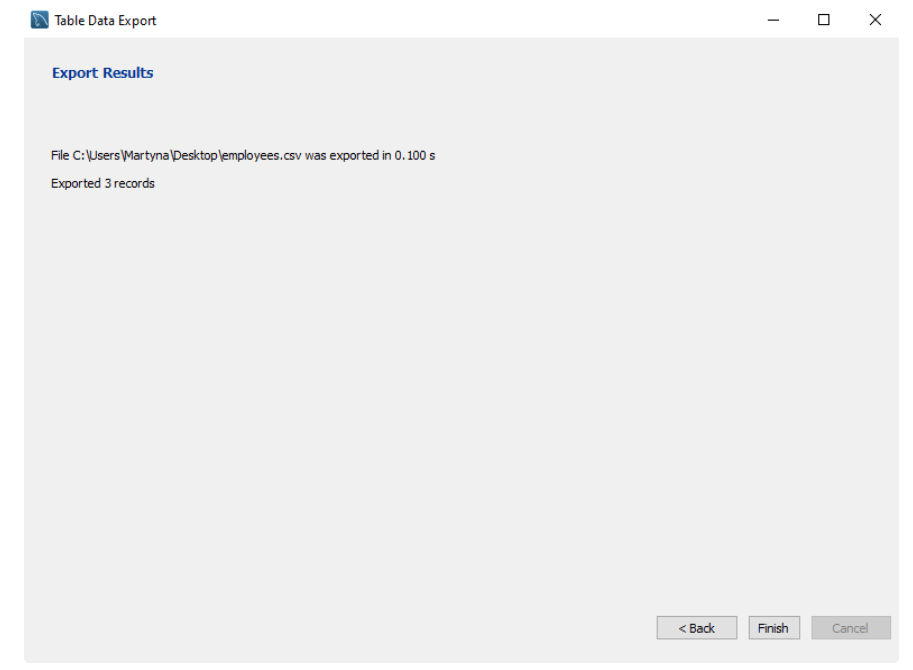

- Once the export is successful, you can see a message similar to the one below.

- You can find the CSV files in the mentioned path to process the data.

Step 2: Load CSV to Databricks

Follow the steps below to load a CSV file to Databricks,

- Login to your Databricks account and navigate to the dashboard.

- In the Sidebar menu, click Data > Add Data > Create Table.

- Drag and drop the CSV files from your local directory and upload them.

- To access your data, click the Create Table with UI button.

- Now, you can read and modify the CSV data in Databricks.

Challenges of Using CSV Export/Import to Move Data from MySQL to Databricks

While the CSV export/import process is effective for a MySQL-Databricks integration, there are some associated limitations, including:

- Time-consuming: Manually extracting MySQL data as CSV and then loading it into Databricks is a time-consuming process. This method can be slow, especially when dealing with large volumes of data and limited network bandwidth.

- Risk of Errors: The CSV export/import method requires manual intervention to execute and monitor various tasks. This can impact the data integrity and make it more error-prone, affecting the reliability of the transferred data.

- Lack of Automation: The CSV export/import method lacks automation capabilities, making it difficult to streamline repetitive data transformation tasks. Maintaining accuracy, consistency, and efficiency throughout the migration process can be difficult without automation.

- Lack of Real-Time Integration: The manual migration typically involves batch processing with data transferred on-need basis. There can be a significant delay between data updates in the source and destination system, resulting in the method lacking real-time integration capabilities.

Final Thoughts

By connecting Databricks to MySQL, you gain multiple benefits, including rapid data processing, advanced machine learning modeling, and the flexibility to adapt to varying data requirements. To migrate data from MySQL to Databricks, you can either use the manual CSV export/import method or Estuary Flow.

The manual CSV export/import method can be time-consuming and require extensive technical expertise. Consider using Estuary Flow to help overcome these limitations. With ready-to-use connectors and an intuitive interface, Estuary Flow supports effortless data migration from source to destination with negligible latencies.

Would you like to transfer data between different data sources and destinations? To get started, log in or register for free here.

Frequently Asked Questions

- What are the top three ETL tools for transferring data from MySQL to Databricks?

The top three ETL tools to transfer data from MySQL to Databricks include Estuary Flow, Stitch, and Fivetran. These tools help extract data from MySQL, transform it, and load it into Databricks to enhance data management capabilities.

- Are there any best practices for optimizing Databricks data migration performance?

Best practices include parallelizing data transfer and transformation tasks, optimizing network bandwidth usage, caching intermediate results, and leveraging Databricks’ distributed processing capabilities for efficient data processing.

- What are the post-migration steps to ensure a successful migration to Databricks?

Post-migration steps include verifying data completeness and correctness within Databricks by comparing it with the source system. Ensure data pipelines, analytical tasks, and machine learning algorithms function properly. Conduct thorough testing of data processing and analytical workflows to validate their accuracy and reliability.

About the author

Rob has worked extensively in marketing and product marketing on database, data integration, API management, and application integration technologies at WS02, Firebolt, Imply, GridGain, Axway, Informatica, and TIBCO.

Popular Articles