How Does Query Processing Happen in Snowflake

Architecture, processing, and best practices... Looking to become a better data professional by understanding Snowflake query processing? Start here.

Overview of Snowflake and query processing

If you’re asking yourself, “How does query processing happen in Snowflake?” You’ve come to the right place.

Let’s start with a summary of Snowflake: It’s a self-managed, cloud-based tool used for processing, warehousing & analyzing large amounts of data. Snowflake follows ANSI SQL standards and has a hybrid architecture (more on this later) to handle query processing. They self-identify as a “data platform as a self-managed service.” Flexibility and ease of use are their most important offerings to data teams. We’ll dive further into exactly how they support those goals through their approach to query processing.

Importance of understanding query processing in Snowflake

Understanding query processing is important for a number of reasons. Fundamentally, you’ll become a better data professional for it. It offers greater visibility into the entire lifecycle of the data you’re working with. This will help you understand where to go next in the midst of troubleshooting performance issues.

If you’re comfortable with SQL fundamentals and want to upskill, this is your next step. You’ll also be able to make more informed decisions about schema and database design. These topics are the bread and butter of data, and essential to know for professional development.

Architecture of Snowflake

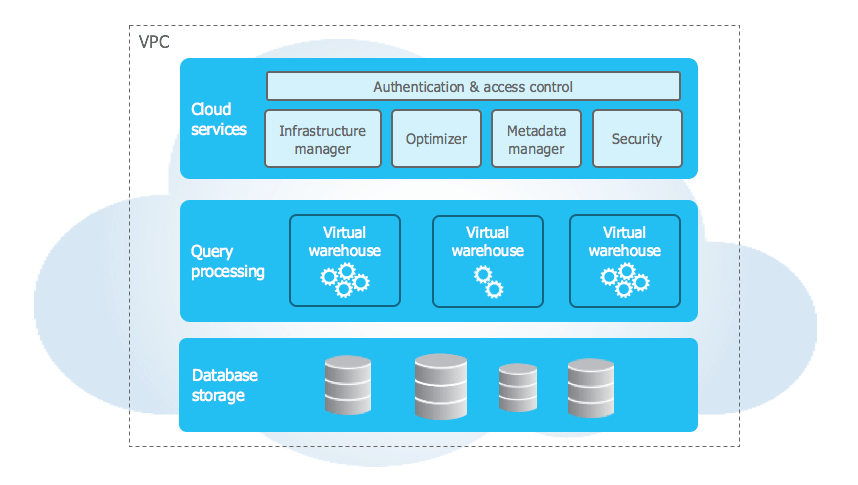

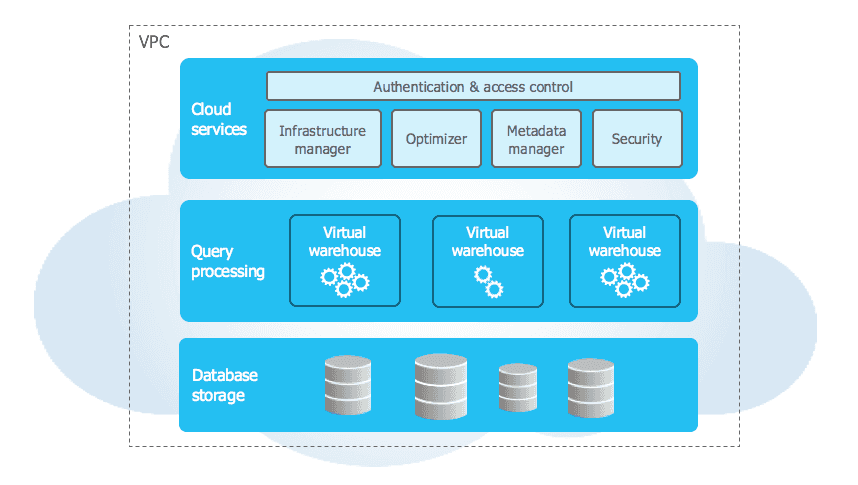

Snowflake’s architecture is a unique hybrid of traditional shared-disk and shared-nothing architecture. It resembles shared-disk because all persisted data is stored in a central repository that all compute nodes can access. It resembles shared-nothing because it processes queries in a way that each node within a particular cluster stores a portion of the data locally. Each update request is handled by a single node, which eliminates contention between them.

See the image above, courtesy of Snowflake. At a more granular level, it consists of key layers: database storage, query processing, and cloud services. When data is persisted into Snowflake, it reorganizes it at the database storage level in a way that is optimized for complex query processing. Queries are then processed in virtual warehouses (i.e compute nodes). These compute cluster sizes are defined by the user when they are initially created, but are capable of auto-scaling, and auto-resuming. The cloud services layer is responsible for handling authentication, access control, query parsing and optimization, and more.

Multi-cluster shared data architecture

A multi-cluster architecture means that Snowflake distributes compute resources across multiple clusters. This enables users to concurrently process and query data across different resources. This is especially useful during peak business hours, and handling high user volumes. For example, this would allow you to have Airflow orchestrating tables updates into Snowflake, analysts querying data, and a team of Engineers validating data in a dev environment simultaneously with no performance issues. This means you can allocate dedicated compute resources for each of these scenarios, making consistent and predictable performance achievable. The rest of this article will dive a little further into exactly how that is done.

Separation of compute and storage

Snowflake is able to offer this to users because their compute and storage are separate. In typical warehousing systems, processing power and storage capacity are tightly coupled. There are a number of benefits from having the two separate.

First, this allows you to spin up multiple virtual warehouses at a time. For example, your analytics team would be querying data using one warehouse, and your engineering team would be querying data using a separate warehouse. You can adjust these compute resources separately to best complement various workload requirements. This flexibility is also cost-effective because you’re only paying for the resources you need.

Snowflake's virtual warehouses

Virtual warehouses are an important part of Snowflake’s architecture. A virtual warehouse is a compute resource that allows you to work with the data you want.

Snowflake offers different sizes of warehouses that you can choose from based on the workload requirements. The sizes correspond to the amount of CPU and memory you need in each particular warehouse. For example, your analytics and engineering teams likely have different requirements, and therefore need different virtual warehouses to be assigned to them. You can scale each warehouse up and down as needed, and enable auto-suspend and auto-resume policies as mentioned earlier. This ensures each warehouse is paused when not in use, and resumes when required without manual intervention.

As your organization continues to scale, you can also consider enabling Snowflake’s Multi-Cluster Warehouse (MCW) feature. This allows you to group multiple virtual warehouses together under a single name, which can be easier to manage at scale.

Query processing in Snowflake

Steps in query processing

As discussed earlier, Snowflake’s query processing layer is separate from the disk storage layer. At a high level, here are the steps of query processing in Snowflake:

- A SQL query is submitted by a user either in the Snowflake UI (via Worksheets), a third-party tool with appropriate user access permissions (dbt, Azure, PowerBI, etc), or with SnowSQL.

- Snowflake then validates the query’s syntax. If it passes without error, it’s converted into a different representation that is further processed by Snowflake downstream.

- The query is then optimized, taking into account the table size, available system resources, data distribution and complexity, among other considerations.

- Snowflake retrieves metadata about the table schema, and is then used to further optimize the given query.

- Snowflake then considers what compute resources are necessary (and what is available) to execute the query. These virtual warehouses are autoscaled for this reason.

- The query is then executed against the data in question, and made available to the requesting user.

- Snowflake then collects data on the query performance and metrics. This information helps to optimize following queries, and identify potential bottleneck performance issues.

- The query & its output are stored (i.e. cached), which is accessible to users if they need to rerun it.

Parsing and validating queries

Parsing and validating queries is an important step of how Snowflake handles data processing.

Snowflake’s query parser verifies the given query adheres to ANSI SQL standard, checks availability of the database objects and resources in question, searches for any defined constraints, and more.

If no errors occur, it turns that given SQL query into a data structure called an abstract syntax tree (AST). Essentially, it becomes a version that’s functionally equivalent to what the user inputted, but is better optimized for Snowflake to use. If errors are found, the parser outputs a detailed error code for the user to resolve the issue manually before trying again.

Query optimization

One of the main goals of the Snowflake parser is to generate cost- and performance-efficient execution plans for queries. Once the query is parsed and validated, the parser generates a number of possible execution plans and chooses the most cost-efficient one.

Snowflake offers a query-optimizing tool for enterprise accounts, which continuously monitors query performance, and adjusts execution plans based on their runtime results. It also offers tools for enterprise-level clients like search optimization and acceleration services that can aid in this process.

Execution and caching

Snowflake caches queries, which allows previously-run queries to execute without needing to re-computing the data. If you’re running a particular query often, this is helpful for performance and cost.

When a query is executed, the resulting cache is in-memory, meaning that it lives in the virtual warehouse it was executed in. Before running any given query, Snowflake checks to see if a fingerprint (i.e. a hash value) already exists for said query. If a match exists, Snowflake will return the results from cache. If it does not exist, a new fingerprint is created and stored. When the cache limit is met, the oldest fingerprints are dropped. This feature assists users with cost-management because it avoids reusing resources where not required.

Optimizing query performance in Snowflake

Best practices for designing tables and schemas

Following best practices around tables and schemas helps support data integrity, and performance. Here are some tips to consider while designing your own resources:

Using appropriate data types and file formats

When you create a table or view in Snowflake, you must assign a particular data type for each column. Snowflake supports a wide variety of types including boolean, integer, timestamp, varchar, etc. Varchar is known as the “catch-all” type because it can hold numbers, letters, and special characters. Although it may be tempting to assign each column to this type because it’s easier, it may require more storage, impact your performance, or be inappropriate in the context of stakeholder needs or downstream application dependencies.

Optimizing query syntax

Beyond using correct data and file types, there are a couple of different ways to further optimize queries. Arguably, designing your Snowflake resources in a way that expects increased data and complexity is the most important.

You should consider how often you expect the table to be queried against, how the table is updated and at what interval, retention policies (i.e. Time Travel and Fail Safe featuring), and more. If you don’t consider the future while designing these resources, problems, and needs are often unacknowledged until an issue appears in production, or your stakeholder voices concern. One of the best ways to have optimally performing queries is to design them with long-term scalability in mind.

Further, being intentional about what tools and approaches you take with loading data is important. No amount of monitoring, or refactoring SQL can fix the problems incurred by using the wrong tool for the job.

There are a number of ways to load data into Snowflake, and each of them depends on your use case. For example, if you have a complex, high-volume table that needs to be accessible for near real-time analytics, batch loading this data may not be the most suitable option. Instead, it may be wise to consider streaming the data with Snowpipe, a managed service, or a combination of open-source tooling.

You can also improve query processing time with complex or large data by implementing compression on the data where appropriate. For example, say you have a column with high cardinality, meaning its values are unique. This could be something like a shopping cart id or user id. One way you could compress this would be through columnar compression. Applying this to data where it makes sense would significantly improve query performance, and help you better understand the data itself.

Using appropriate virtual warehouses

Choosing the correct virtual warehouse size for your use case depends on a number of factors we’ve discussed such as volume of data, performance requirements, and overall complexity. Warehouse sizes are abstracted as t-shirt sizes, but just mean how many compute nodes are available. The most important part of choosing a warehouse size comes down to understanding your requirements, and continuously monitoring how it performs at a given scale. If you’re experiencing any performance issues, scaling this horizontally is typically the fastest way to resolve them.

Monitoring and troubleshooting queries

Query history and profile

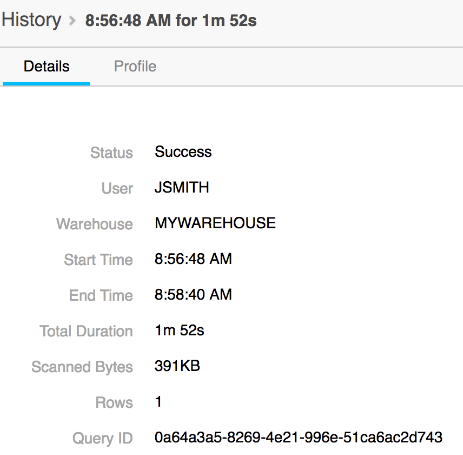

Snowflake stores information about the queries you run such as start and end times, and how much compute resource was required to execute them. This data is accessible to users and is important for performance monitoring schemas and warehouses.

Snowflake offers a tool called the Query Profile, which helps you understand what’s happening “underneath the hood.” It helps you identify mistakes in your SQL queries, but also any compute bottlenecks that may be affecting performance downstream. You can easily access this tool on the Snowflake UI by clicking on any given query ID. In the photo below courtesy of Snowflake, you’ll see the details tab as mentioned.

Query performance metrics

Query compilation time, CPU and memory usage, queue time, concurrency, and volume of data processed are some examples of query performance metrics that Snowflake tracks.

Identifying and resolving query issues

Identifying and resolving performance bottlenecks can be simplified with continued observation over time. For example, let’s say you have a SQL query with multiple sub-joins. You’re querying against multiple tables that are small right now, but are expected to grow over time. You can observe the query compilation time over time to help you identify what, if anything, needs to be changed about your SQL query or resource utilization so you can maintain performance standards.

Let’s say that over the course of 6 months, you’ve observed performance metrics for this table have significantly worsened. You notice the volume of data has increased and will continue to do so. You’re noticing that some of your other queries have been stuck in the queue for execution longer than normal, which has impacted time of delivery for downstream analytics.

As mentioned, the quickest approach would be to increase the size of your warehouse, which would take just a few minutes. The long-term approach would be to consider refactoring your SQL query and look into other areas of performance that could be addressed by using the tools Snowflake already offers.

Conclusion

Snowflake is a self-managed data warehousing tool comprised of a hybrid, three-tier architecture. It has a multi-clustered infrastructure that offers customization and cost-effectiveness for users. They also offer a number of resources to help data professionals understand query performance to better resolve performance bottlenecks.

Importance of query processing in Snowflake for data professionals

Understanding how query processing happens in Snowflake is important to understanding the end-to-end lifecycle of the data you’re working with, develop yourself professionally, and best support stakeholders.

Future of Snowflake's query processing capabilities

It’s difficult to predict exactly what Snowflake will do to continue improving query processing abilities for their users. Simply being speculative, they could continue allocating resources towards their query parser to better adjust to changing data and their respective patterns. Snowflake could further develop their analytic offerings to help clients better understand, or possibly predict potential issues before they occur.

Want to learn more about Snowflake? Check out these other articles…

Author

Popular Articles