In the era of big data, managing and analyzing data is crucial for any organization. This article explores two methods of loading data from Amazon Simple Queue Service (SQS) to Amazon Redshift using Amazon’s Lambda Function and a no-code tool like Estuary Flow. By the end of this article, you will have a thorough understanding of how to leverage the power of SQS, Redshift, and integration tools like Estuary Flow.

What is Amazon SQS?

Amazon Simple Queue Service is a fully managed, scalable message queuing service provided by Amazon. It enables you to store and retrieve messages of any size between various software components without additional services. The service also ensures that the messages are delivered in a fault-tolerant way; even if a component fails or becomes unavailable, the messages are not lost.

Amazon SQS provides two distinct types of queues—Standard Queue and FIFO Queue.

The Standard Queue service is designed to guarantee at least once delivery; each message will be delivered at least once. On the other hand, FIFO Queue provides exactly-once processing. This ensures that each message is processed a single time, eliminating the risks of duplicates and maintaining the order in which they are sent.

Now, let’s look at some of the key features of Amazon SQS.

- Security: Amazon SQS lets you securely send sensitive data between applications and manage your encryption tool easily with AWS Keys Management. It also helps protect your data with encryption, authentication, and access control policies.

- Scalability: It provides excellent scalability by processing all buffered messages simultaneously. SQS offers unlimited message queuing and can scale independently to handle unexpected traffic spikes.

- Reliability: Amazon SQS uses a message-locking mechanism during the processing and ensures that messages are delivered correctly with no duplicates, especially for FIFO queues.

What is Redshift?

Redshift is a highly efficient, cloud-based data warehousing service offered by Amazon.

Its most notable feature is its exceptional scalability, allowing you to start with just a few hundred gigabytes of data and scale it up to a petabyte or more. Redshift also employs an advanced column-based storage architecture, which reduces the overall data scan size during queries, leading to faster read times and optimized storage and compression. The robust security features, such as encryption and network isolation with Amazon VPC (Virtual Private Cloud) and AWS Identity and Access Management (IAM) make Redshift a compelling choice for data storage.

Let’s look at some key features of Amazon Redshift.

- Massively Parallel Processing (MPP): Redshift uses MPP architecture to distribute and query data across several nodes. Each node processes the data subset separately and in parallel, significantly boosting query performance and scalability. This architecture ensures that query speed remains consistent even as the volume of data increases, making it ideal for large-scale data analysis.

- Advanced Compression Techniques: Redshift utilizes advanced compression algorithms that reduce storage requirements and improve query performance. The data warehouse automatically samples your data and selects the most appropriate compression scheme. This helps in reducing the amount of I/O needed for data processing.

- Advanced Query Accelerator (AQUA): Redshift uses a high-speed cache layer that accelerates data queries. AQUA uses an innovative hardware-accelerated cache to deliver up to 10 times faster query performance than other enterprise warehouses, making it the top choice for data-intensive analytics.

Loading Data from Amazon SQS to Redshift

Method 1: Load Data from Amazon SQS to Redshift using Manual Method

Transferring data from Amazon SQS to Redshift involves a multi-step process that uses other AWS services, such as Amazon S3 and AWS Lambda Functions. Here are the detailed steps.

Prerequisites

- Redshift Cluster

- Amazon SQS

- AWS Lambda Function

- IAM Roles

- Amazon S3

Step 1: Set Up the AWS Lambda Function

- Create a Lambda Function: Create an AWS Lambda function to extract messages from your SQS queue.

- Permissions and Access Management: Ensure the necessary IAM roles and permissions are set up for the Lambda function to access SQS and S3 storage and for Redshift to access S3 storage.

- Scripting the function: Write a script within this Lambda function (you can use languages like Python, Java, etc.) to poll the messages and process the data.

Step 2: Store Data in Amazon S3

- Upload to Amazon S3 Storage: Use the created Lambda function to upload the data to the Amazon S3 bucket. Ensure that the data is in a format compatible with Redshift (like CSV, JSON, Parquet, etc.)

Note: You might need to implement additional scripting in the Lambda function to facilitate this transfer.

Step 3: Load Data into Redshift

- Prepare Redshift Table: Prepare a table in your Redshift cluster that matches the structure of the data you import from S3. This involves defining the table schema to align with the data format stored in S3.

- Redshift COPY Command: Use the COPY command in Redshift to load data from the S3 bucket into the Redshift table. This command should specify the path to the S3 bucket and any other options, such as data format, error handling, etc.

- Data Validation: After loading the data, validate the data in Redshift to ensure the load was successful and the data is accurate.

These steps can help to load data from Amazon SQS to Redshift. However, there are several limitations associated with this method, including:

- Complexity: Implementing a data pipeline using AWS services like Lambda Functions, SQS, S3, and Redshift requires a deep understanding of each service and how they interact. This complexity can be challenging for someone without extensive knowledge of the AWS ecosystem.

- Maintenance: You must conduct regular maintenance to ensure the data pipelines function correctly, including script updates, handling service changes, and monitoring data flow.

- Scalability Concerns: AWS services are generally scalable, but manual scaling of each component in the pipeline can be challenging. Handling large volumes of data can make optimizing Lambda function timeouts or memory difficult.

- Error Handling: Manual pipelines often require custom error-handling mechanisms. Ensuring robust error detections and recovery processes in multi-step pipelines— transferring data from SQS to Redshift can be complex and time-consuming.

- Cost Management: The involvement of multiple AWS services can make it challenging to keep track of costs. Unexpected increases in data volume or frequency can lead to higher costs. So, careful monitoring is necessary to avoid expenses escalating quickly.

- Data Consistency and Integrity: Ensuring data consistency and integrity throughout the pipeline can be a significant challenge, especially while dealing with large volumes of data or complex transformations. Any process failure or data format discrepancies can lead to data integrity issues.

Method 2: Migrate Amazon SQS to Redshift Using No Code Pipeline Tool

You can easily load data from Amazon SQS to Redshift using user-friendly no-code ETL tools, even without technical knowledge. One of the best tools to use is Estuary Flow, which streamlines data replication from Amazon SQS to Redshift. Here is a step-by-step guide.

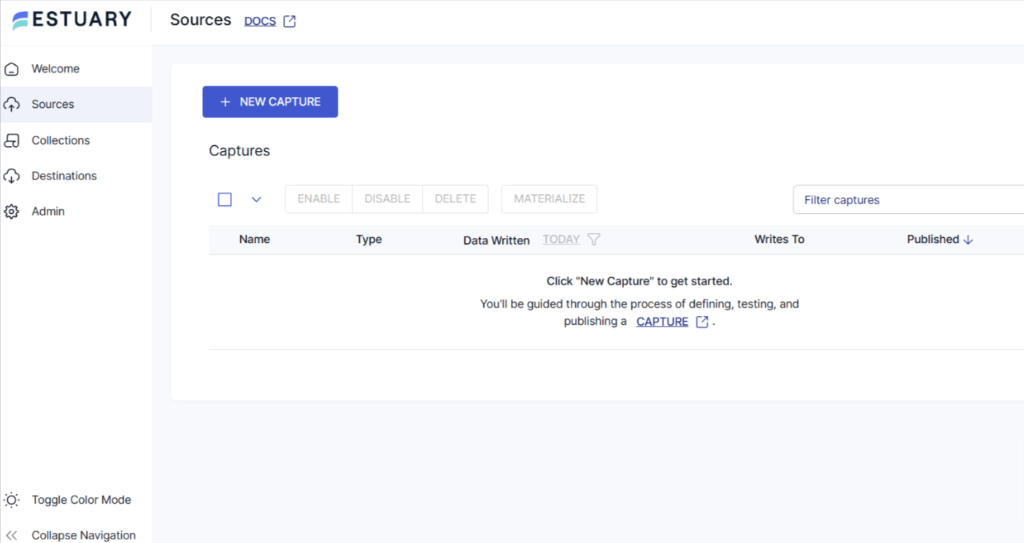

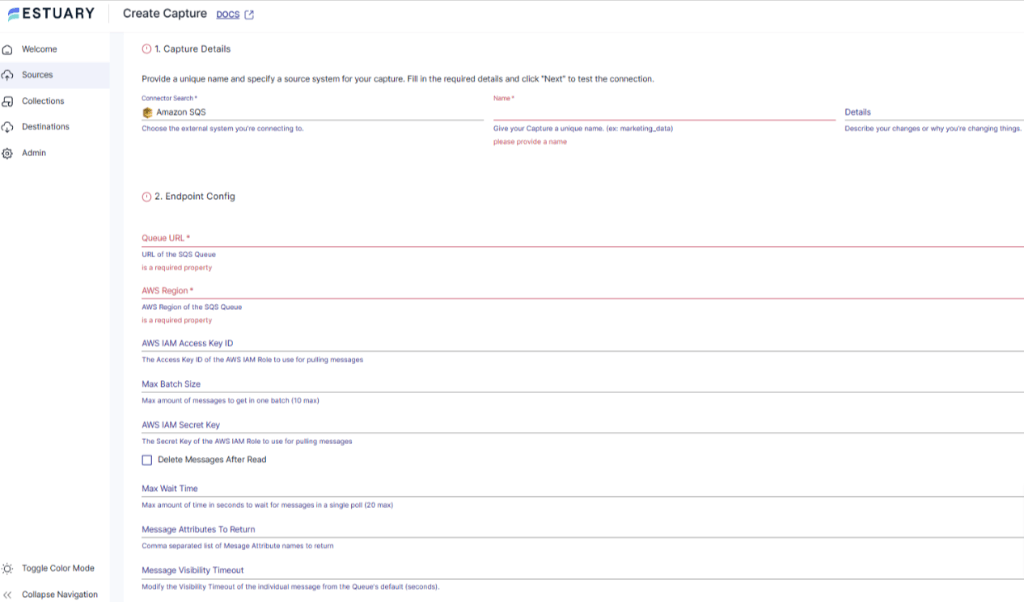

Step 1: Configure Amazon SQS as Source

- Create an account or log in to your existing Estuary Flow account.

- Click on the Sources tab on the left navigation pane.

- Click the + NEW CAPTURE option.

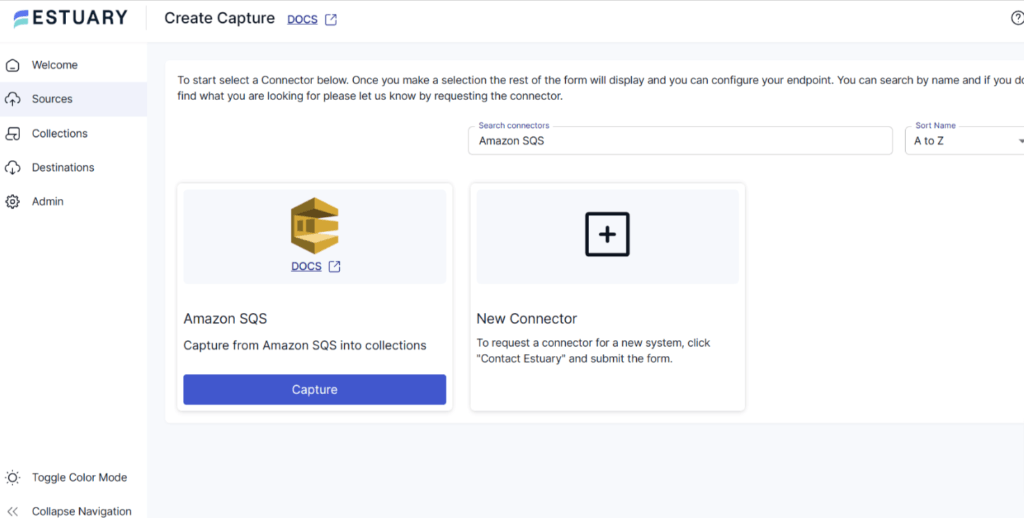

- Next, search for Amazon SQS in the search field and click the Capture button to begin configuring it as the data source.

- On the Create Capture page, enter the specified details like Name, Queue URL, AWS Region, AWS IAM Access Key ID, Message Visibility Timeout, and more.

- After filling in the required fields, click on NEXT > SAVE AND PUBLISH. This will capture data from Amazon SQS into Flow collections.

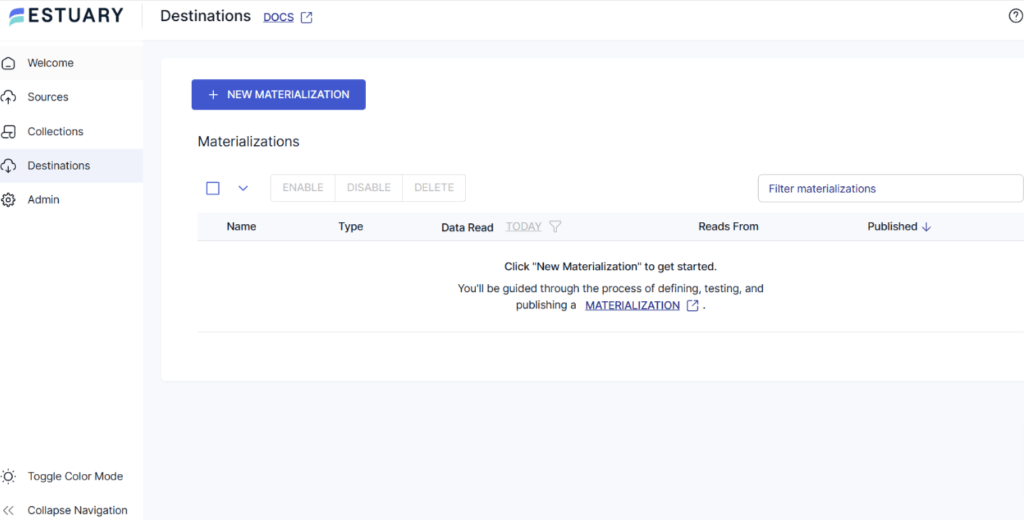

Step 2: Configure Redshift as the Destination

- Once the source is set, click the Destinations option on the dashboard.

- Click on the + NEW MATERIALIZATION button on the Destinations page.

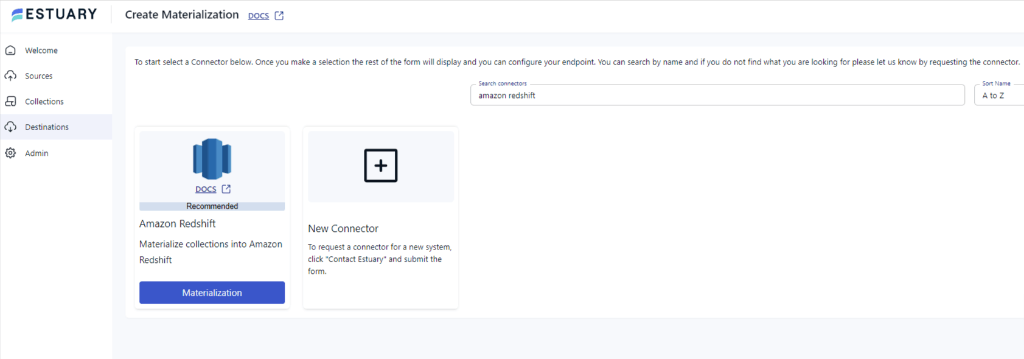

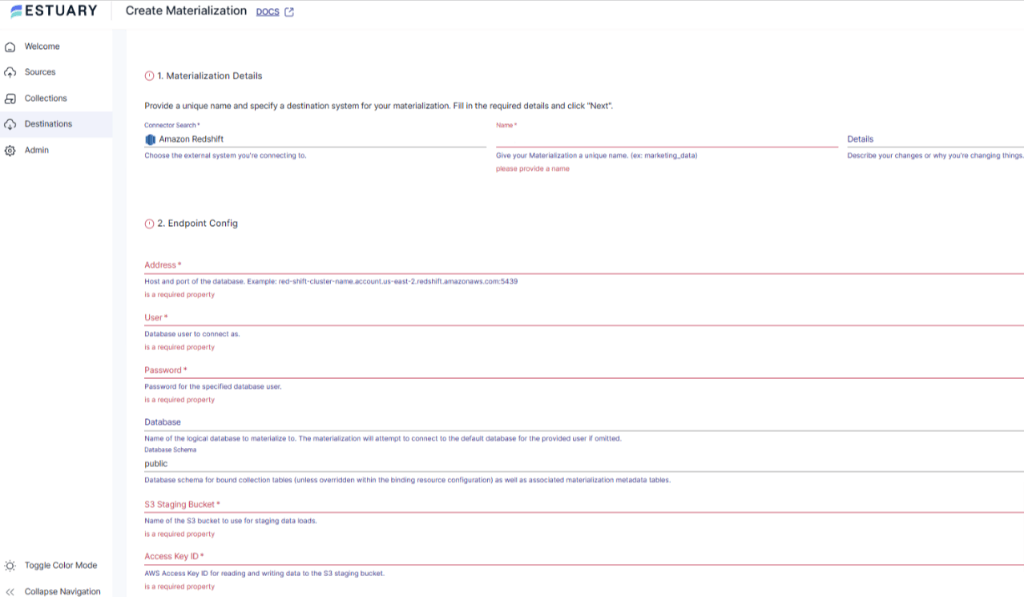

- Type Amazon Redshift in the Search connectors box and click on the Materialization button of the connector when it appears in the search results.

- On the Create Materialization page, enter the details like Address, User, Password, S3 Staging Bucket, Access Key ID, and Bucket Path.

- If your collection of data from Amazon SQS isn’t filled in automatically, you can add it manually using the Link Capture button in the Source Collections section.

With the source and destination configured, Estuary will now begin loading data from Amazon SQS to Redshift.

Benefits of Using Estuary Flow

Here are some of the benefits of using Estuary Flow:

- Zero Technical Expertise Required: No-code tools like Estuary Flow are designed to be user-friendly and do not require extensive technical expertise. You can execute the entire data migration process in just a few clicks, making the entire process streamlined.

- Real-time data processing with CDC: Estuary flow leverages Change Data Capture(CDC) for real-time data processing. This helps maintain data integrity and reduces latency.

- Automated Workflows: ETL tools like Estuary Flow automate the data migration process, reducing manual work for data ingestion. Such automation reduces the risk of errors and creates robust and complex workflows.

- Efficient Data Transformations: It provides data cleaning capabilities, which allow you to clean, filter, and validate data during the transformation step.

- Protection Against Failures: Estuary Flow ensures resilience against failures by storing captured data in cloud-backed data collections. This process can be understood as a backup measure, offering exactly one-semantic and distributed storage.

Conclusion

When it comes to loading data from SQS to Redshift, businesses generally weigh between traditional manual methods or modern solutions like Estuary Flow. The first method involves using the Lambda function to load data from SQS to the S3 bucket and then migrate it to Redshift using the built-in COPY command. The second method involves the use of Estuary Flow—an automated ETL tool that automates the entire workflow in just a few clicks.

While using the manual method requires technical expertise and is time-consuming, it can be used effectively for simpler workflows. However, when it comes to more complex multi-step workflows, it may not be the best option. Both methods have their pros and cons, so pick the one that suits your needs.

Estuary Flow provides an extensive and growing list of connectors, robust functionalities, and a user-friendly interface. Sign up today to simplify and automate the loading of data from Amazon SQS to Redshift.

Frequently Asked Questions (FAQs)

Can I use Amazon SQS with other AWS services?

Yes, you can use Amazon SQS with other AWS services, such as Amazon EC2, Amazon Elastic Container Service (ECS), and AWS Lambda, enhancing application flexibility. Amazon SQS also works with Amazon DynamoDB and Amazon Simple Storage Service (S3).

Is Amazon SQS reliable enough to store my data?

Yes, Amazon SQS is primarily a message queuing service that ensures high availability by storing messages across multiple redundant Availability Zones (AZs) within a single AWS region. This ensures that no single computer, network, or AZ failure can make messages inaccessible. However, it isn’t designed for long-term data storage, with a maximum storage time of 14 days.

Author

Popular Articles