The phrase Extract Transform Load explains most of the philosophy behind it. It picks up data from one system, transforms it, and then loads it to another system. To explain it more deeply, ETL pipelines are a series of processes that help a business collect data from different sources and move it to destination systems — usually a data storage system such as a data warehouse — while transforming it along the way.

For professionals who count on business intelligence and data-driven insights, these pipelines are the make-or-break element, not to mention essential for data scientists and analysts.

If ETL pipelines are a new ground for you to break, you have landed on the right spot. As this article is value-packed with insights regarding ETL pipelines, the processes involved, their advantages, and some real-life use cases.

By the time you finish reading, you will have a full grasp of what ETL pipelines do. Not only that, you’ll have gained insights into the difference ETL can make to your business and tools that will help you stay ahead of the competition.

What Are ETL Pipelines?

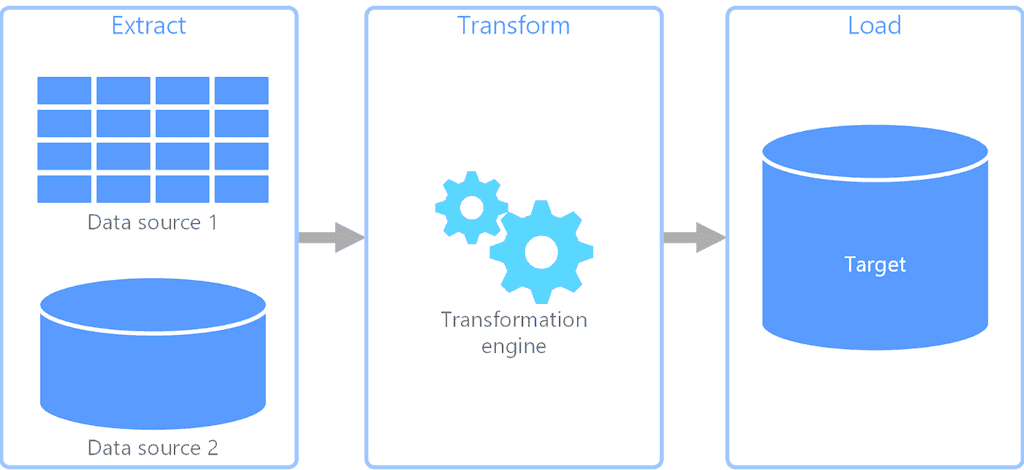

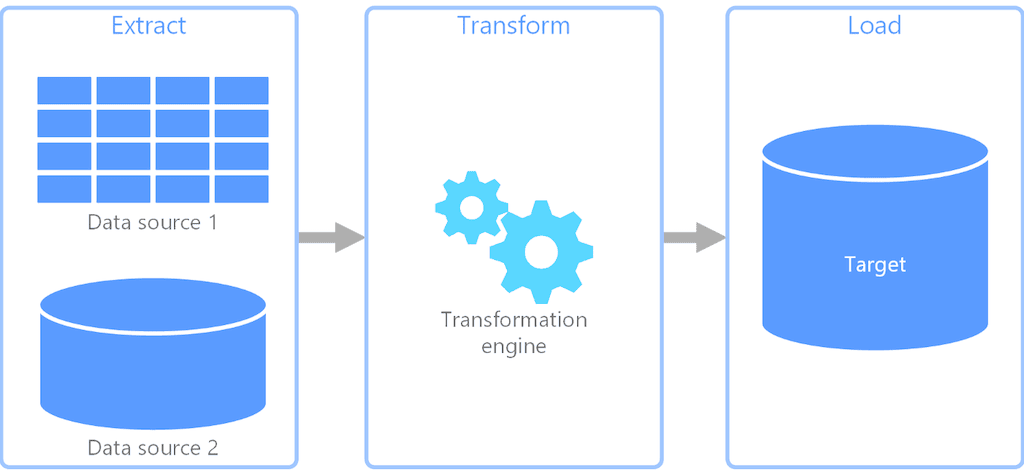

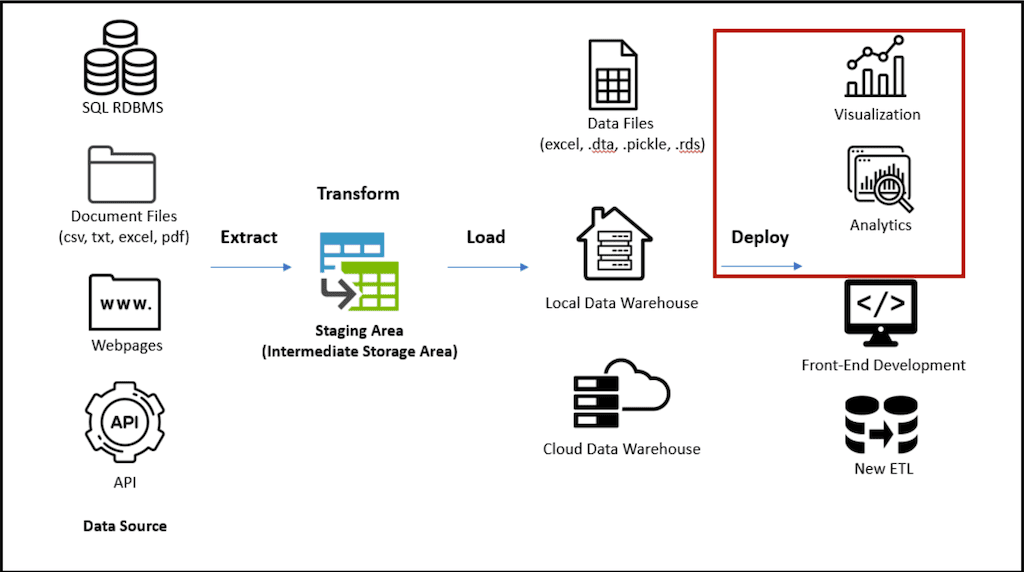

ETL stands for extract, transform, and load. The ETL meaning refers to this set of processes that moves data from a source to a destination system.

Moving data around a company is extremely important. A modern enterprise typically has many disparate sources of data, as well as various goals that require data to be consumed in different ways and by different systems.

For example, you might have security measures, marketing and sales goals, financial optimization needs, and organization-level decisions to make. All of these things require data. In many places and many forms.

How do you make sure data is in the right place in the right format?

That’s where data pipelines, including ETL pipelines, come in.

They allow businesses to move data between systems in an automated fashion, with data transformations included along the way.

Now, let’s take a look at the three steps of ETL in depth.

Extract

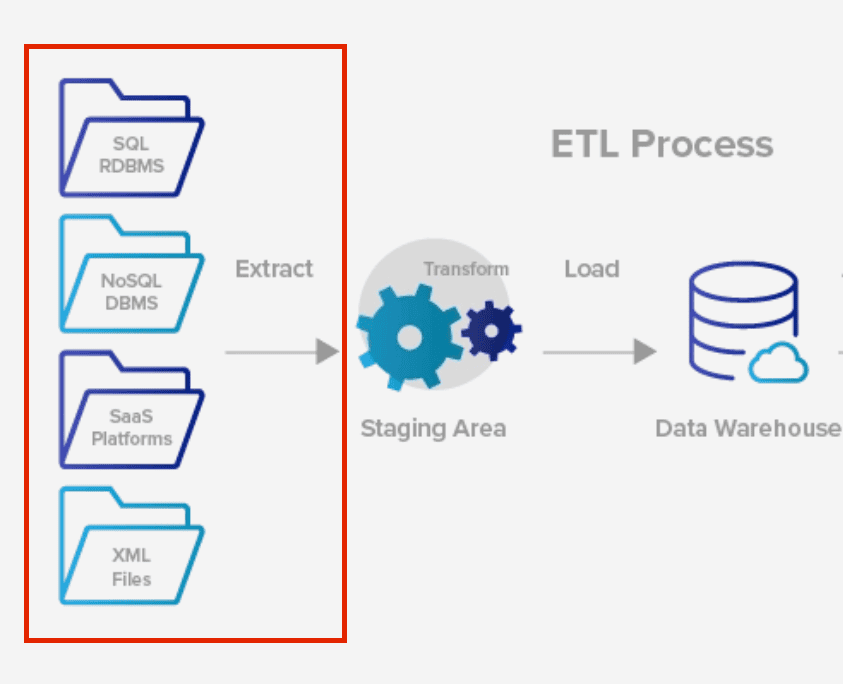

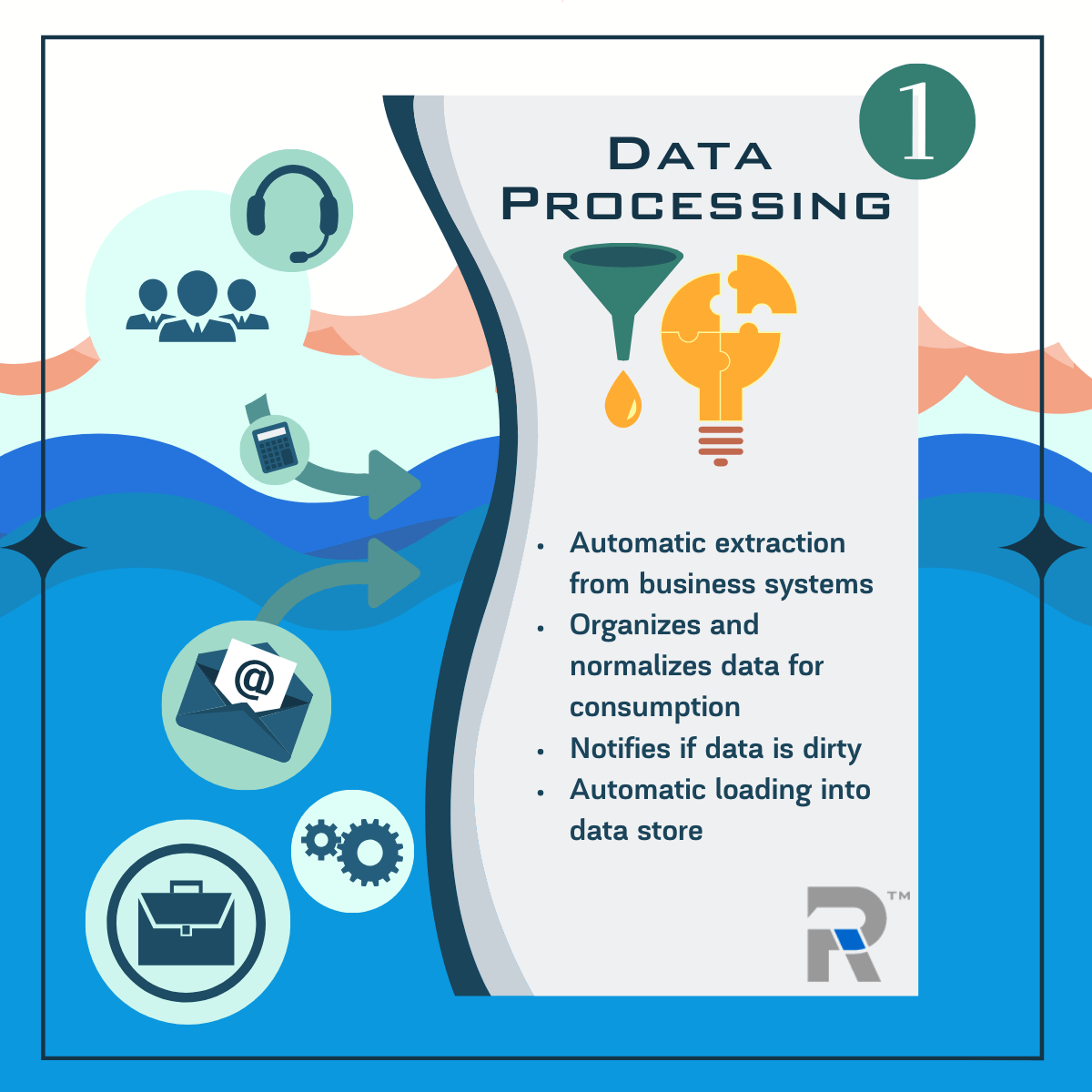

The pipeline must first extract, or ingest, information and data from a source or multiple sources.

Data source systems often include:

- APIs

- Websites

- Data lakes

- SaaS applications

- Relational databases, or transactional databases

How does data extraction actually work?

The technical details depend on the exact pipeline architecture. Because the pipeline is a separate type of data system from the source, it requires some sort of interface to communicate: an integration.

A company might purchase an ETL tool from a vendor. In this case, integrations with different source systems are usually included with the tool. In other cases, data engineers employed at the company might build the ETL pipeline in-house, including the necessary integrations with sources.

Pipelines may extract source data in real-time, meaning as soon as it appears in the source. They can also use a batch processing workflow, meaning that new changes are picked up at a set time interval.

Transform

Transformation is the second step of the ETL process. In this step, raw data extracted from the source is transformed into data that is compatible with the specific destination and use case.

For example, your business intelligence tool might require JSON data that conforms to a certain schema, but your source data is in CSV format. The transformation step of your ETL pipeline could change the data from one format to another as it passes through.

That’s just one example, and use cases vary widely. But one thing is almost always true: you must transform data at some point during its lifecycle. In an ETL pipeline, that transformation happens almost immediately after the data is extracted.

What types of data processing happen during the transformation step?

Most often, they include simple processes like:

- Filtering

- Aggregation

- Data cleaning

- Feature extraction

- Re-shaping data to conform to a schema

These relatively simple transformations have a critical purpose. They ensure that when the dataset arrives in storage or another application, it’s clean, consistent, and won’t cause errors. It’s ready to be used to meet business goals.

The fact that they are simple also makes them easy to automate. This saves data team members time from a task that would otherwise be quite tedious.

By contrast, more complex, exploratory data transformations and deep analysis can occur later in the data life cycle. This work is more complex and usually requires human attention. But automating the easy stuff up-front allows you to arrive here faster.

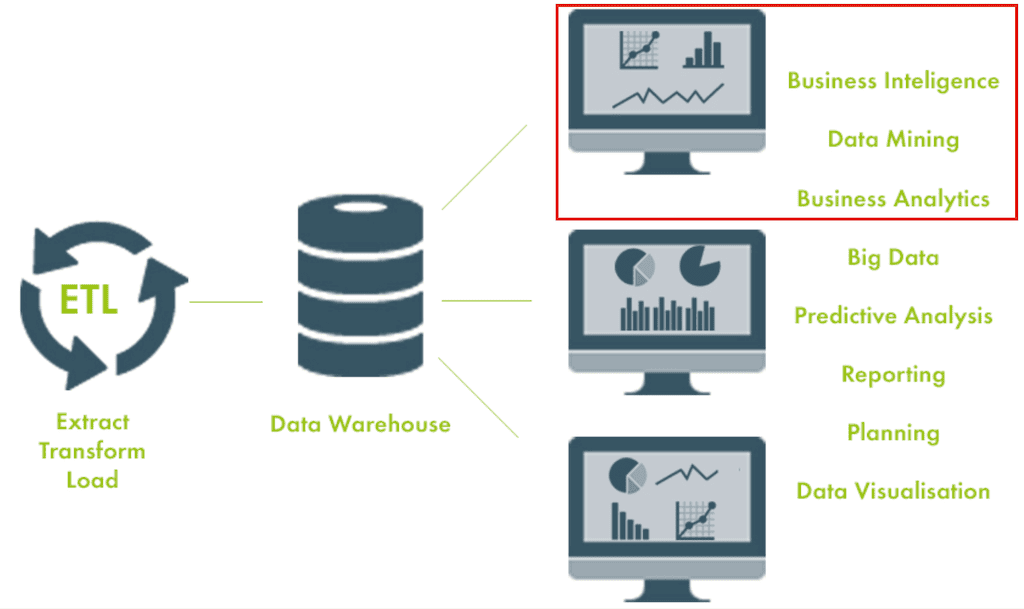

Load

The last step of the ETL process is loading the data into the target system, or destination. It’s the inverse of extraction.

In a typical, popular use case, the destination is a data warehouse or some other type of data store designed to power data analysis. Once in the warehouse, all sorts of analytic workflows can take place: business intelligence, machine learning, exploratory analytics, and more.

But use cases vary widely, and there are many other possible destinations. Generally speaking, destination systems can include:

- Data warehouses

- SaaS applications

- Operational business systems

- Visualizations and dashboards

Just as with the source, the pipeline needs to integrate with the destination system to load data. The technical details of the loading process depend on whether you use an ETL tool, what tool that is, or whether your ETL pipeline was built in-house.

Once data is loaded, you can start to notice the latency of the data flow; that is, the amount of time it takes the ETL data to move from source to destination.

Depending on the pipeline, latency can be in the hours, minutes, or, if real-time data streaming is used, down to the millisecond. We’ll discuss whether real-time ETL is possible toward the end of this article.

ETL Pipeline Vs Data Pipeline

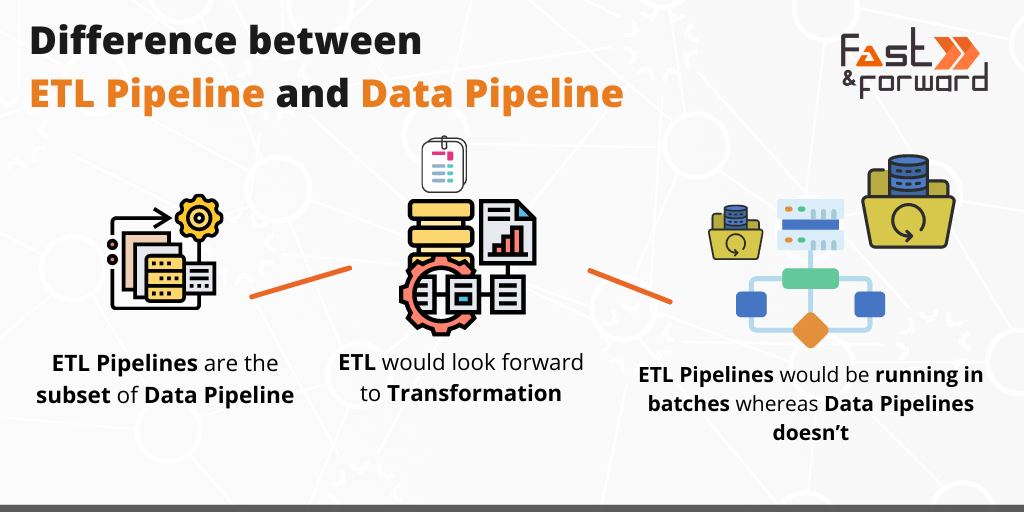

ETL pipelines fall under the category of data integration: they are data infrastructure components that integrate disparate data systems.

More specifically, ETL pipelines are a subset of data pipelines. Simply put, a data pipeline is any system that takes data from its various sources and funnels it to its destination.

But it’s not really that simple.

Every company’s data infrastructure and strategy are complex. Companies have different sources, destinations, and goals for their data. As a result, when you look closer, you’ll find that every data pipeline is unique.

ETL pipelines are a sub-category of data pipelines designed to serve a subset of the tasks performed by data pipelines, in general.

If you’re familiar with the world of data, you may have heard “data pipeline” and “ETL pipeline” used interchangeably, although they are different. This usage pattern has to do with the history of data pipelines.

ETL was among the first data pipeline architectures to gain popularity in the enterprise. This is because it was fairly simple for a data engineer to build a basic on-premises ETL pipeline specifically for every one of their employer’s use cases.

In those days, you couldn’t purchase an ETL tool; you had to build your own.

Over the years, data infrastructure has moved into the cloud, compute has grown more powerful, and service providers started to sell different types of tools. As a result, the possibilities for data pipelines have grown complex.

Despite all this, it’s very possible to hone in on a few things that set ETL pipelines apart from other types of data pipelines.

Transformation Isn’t Necessarily Done In Data Pipelines

Data pipelines don’t, by definition, have a transformation step. They almost always should, for reasons we discussed previously: Most source data is messy and needs to be cleaned up.

Data that isn’t minimally aggregated or shaped to a schema will quickly become unmanageable and useless.

But let’s imagine a perfect world for a moment. Let’s say you had a perfect set of JSON data that you wanted to move from a cloud storage provider to an operational system that happened to honor that exact format. In theory, you wouldn’t need to transform it. The “T” in “ETL” would be unnecessary.

ETL Pipelines End With Loading

In the same vein, ETL pipelines explicitly require that transformation occurs between extraction and loading. Loading is the last step. This isn’t always the case for data pipelines.

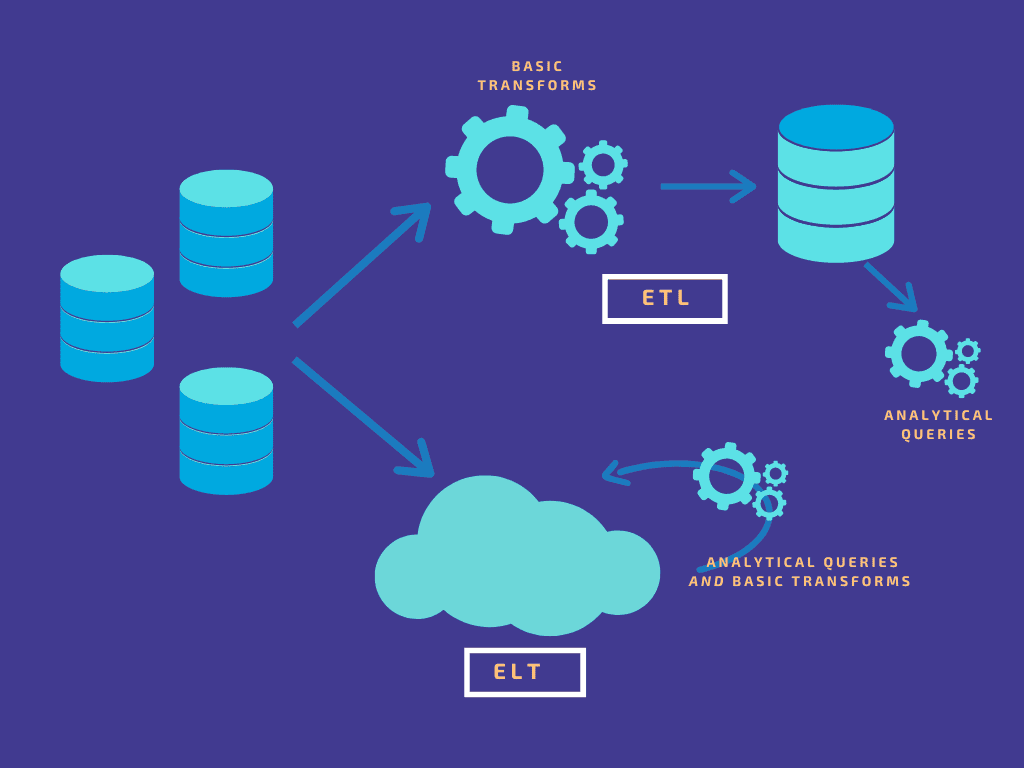

For example, the transformation could occur after loading (an ELT pipeline), or the pipeline could be more complex, with even more steps.

ETL vs ELT

Now, we’d be remiss if we didn’t compare ETL to ELT pipelines.

ELT pipelines are another well-known category of data pipelines. When you know what ETL stands for, it’s pretty easy to figure out what ELT means.

“E” still stands for extract. “L” still stands for load. And “T” still stands for transform.

An ELT pipeline is simply a data pipeline that loads data into its destination before applying any transformations.

In theory, the main advantage of ELT over ETL is time. With most ETL tools, the transformation step adds latency.

On the flip side, ELT has its drawbacks.

With ELT, you’re essentially sending data straight to the destination and putting off the transformations until later. By dumping disorganized, unclean data into your data store, you can risk bogging your systems down and even storing corrupt data that you’ll never be able to recover.

6 Proven & Tested Benefits Of ETL Pipelines

Ultimately, ETL pipelines are vital to companies because they collect and prepare data for analytics and operational workflows. By doing so, they ensure businesses of all industries can effectively use their data to function in the modern world.

ETL pipelines share all the benefits of data pipelines in general, as well as having some special benefits of their own. Let’s break that down.

Collecting Data From Various Sources

As businesses scale, they inevitably start to generate all different types of data, which comes from an equally wide array of sources.

Databases, APIs, websites, CRMs and other apps… the list goes on.

ETL pipelines provide a reliable way of centralizing data from all these different sources.

Reduced Time For Data Analysis

Data arrives at the target system transformed and ready to use.

Sure, transforming the data along the way can add a bit of time to the pipeline. But once it’s loaded, it’s ready to go.

You can transform your data however you need — so that it’s not just compatible with the target system, but also easy to use for human analysts and data scientists. If your destination is a data warehouse, as is often the case, this reduces the time to actionable insights.

Deeper Analytics And Business Intelligence

By automating the more tedious transformations — aggregation, filtering, re-shaping — ETL pipelines don’t just save time. They also allow the humans that consume data to take their analysis even deeper.

ETL eliminates manual work and human error from the early transforms, allowing analysts to innovate.

Easy Operationalization

The ETL pipeline’s destination isn’t always a warehouse. In some cases, you’ll pipe data straight from the source to applications like business intelligence software or an automated marketing platform.

This is known as data operationalization: putting data to work in the business’s day-to-day operations. Once you know what transformations are required to operationalize, you can create a direct path for data with an ETL pipeline.

Ample Support And Tooling

ETL isn’t the only kind of data pipeline, but it’s one of the easiest to set up. This is because it’s been around for a long time.

Many data engineers have years of experience building ETL pipelines… but you might not even need to hire an engineer. Companies that sell ETL tools have proliferated in recent years. Most of these tools are extremely easy to use.

Data Quality Assurance

Transforming before loading ensures that only high-quality data arrives in the target systems. This prevents nasty surprises like corrupt data or incorrect insights.

You can also add automated testing to your ETL pipeline for an extra level of insurance that your transformations are succeeding.

Disadvantages of ETL Pipelines

The main disadvantage of ETL pipelines is that — at least traditionally — they are not real-time data pipelines.

Rather, ETL pipelines rely on batch processing. This type of processing is relatively easy to implement, but it introduces some amount of delay.

As we progress through the 2020s, real-time data is quickly becoming the norm. Especially for operational data workflows, we expect the systems we use to reflect new updates immediately.

A few examples of when a delay in the data pipeline is not acceptable include:

- When you’re detecting financial fraud.

- When you’re managing inventory in a fast-paced warehouse.

- When you need to capture a customer for an online sale before they leave your site.

What About Real-time ETL?

Real-time data pipelines are possible, and some of them include transformations.

These pipelines rely on a data streaming infrastructure and apply transformations on the fly. There are no data batches, and as a result, the time from source to destination is a matter of milliseconds

You won’t hear these kinds of pipelines called “ETL,” because, on a technical level, they are very different processes. But they ultimately achieve the same goal: getting data from source to destination, while transforming it along the way.

9 Easy-To-Use ETL Pipeline Tools To Test Out

Here are the best ETL tools and services you can use to set up your ETL pipelines:

1. Estuary Flow

First up, we have Estuary Flow. We provide an easy-to-use and scalable streaming ETL solution that allows you to efficiently capture data from sources such as databases and SaaS applications.

Flow provides real-time CDC (Change Data Capture) support and provides real-time integrations for apps that support streaming. It can also integrate any Airbyte connector to access over 200 batch-based endpoints for long-tail SaaS.

2. AWS Glue

AWS Glue is Amazon’s serverless data integration service that simplifies the process of ETL pipeline development. AWS Glue uses Apache Spark as its processing engine which provides a scalable and distributed computing framework for data processing. It automatically generates ETL code by analyzing the source and destination data structures.

It has support for over 70 different data sources and provides a GUI-based interface, called the AWS Glue Studio, which you can use to visually create, run, and monitor ETL pipelines.

3. Portable

Portable.io is a cloud-based ETL tool that allows users to extract, transform, and load data into a data warehouse. Portable.io offers a no-code solution, which means users do not need to write code to connect to data sources and build data pipelines. It can connect to a wide variety of data sources and warehouses. Portable offers a free trial and hands-on support.

4. Azure Data Factory

Microsoft’s offering for data integration is Azure Data Factory. It is an extremely versatile tool and streamlines hybrid data integration with a fully managed, serverless service. It enables users to build code-free ETL and ELT pipelines using over 90 built-in connectors, simplifying data ingestion from diverse sources.

The platform also offers autonomous ETL which empowers citizen integrators to accelerate data transformation and drive valuable business insights.

5. Google Cloud Dataflow

Google Cloud Dataflow offers a unified, serverless solution for both stream and batch data processing. The platform simplifies the creation of ETL pipelines and ensures fast, cost-effective data processing with features such as automated resource management and autoscaling.

It also offers real-time AI capabilities which allow advanced analytics use cases, including predictive analytics and anomaly detection.

6. Integrate.io

Integrate.io is an easy-to-use no-code data pipeline platform and offers a complete set of ETL tools and connectors that simplify the building and management of data pipelines. Its feature set includes an intuitive drag-and-drop interface, fast ELT data replication, and automated REST API generation.

The platform supports over 150 data sources and destinations, ensuring seamless data integration across your entire ecosystem.

7. IBM DataStage

DataStage is IBM's offering for developing both ETL and ELT pipelines. It is available for on-premises deployment or on IBM Cloud Pak for Data, which offers automated integration capabilities in a hybrid or multi-cloud environment. The tool provides a scalable and parallel processing environment for ETL operations which enables users to process large volumes of data efficiently.

Key features of IBM DataStage include

- Parallel engine

- Metadata support

- Automated load balancing

- Prebuilt connectors and stages

- In-flight data quality management

8. Oracle Data Integrator

Oracle Data Integrator (ODI) is a comprehensive data integration platform that provides a new declarative design approach to define data transformation and integration processes. This results in easy maintenance and development. It can work with a wide range of data sources and targets, including relational databases, flat files, XML files, and cloud-based systems such as Amazon S3 and Microsoft Azure.

The ODI platform is integrated with the Fusion Middleware platform and consists of components for enterprise-scale deployments, hardened security, scalability, and high availability.

9. Matillion

Matillion is an ETL/ELT tool designed specifically for cloud database platforms. It supports Snowflake, Delta Lake on Databricks, Amazon Redshift, Google BigQuery, and Azure Synapse Analytics. It features a modern, browser-based UI with powerful, push-down ETL/ELT functionality that enables users to set up and start developing ETL jobs within minutes.

Now that we know about some prominent ETL tools and services, let’s take a look at ETL pipeline examples to see how they’re beneficial in real life.

7 Real-World Use Cases Of ETL Pipelines

ETL pipelines have many use cases across various industries and domains. Here are some of the many potential applications for you to consider using in your business:

Online Review Analysis

One of the most important applications of ETL pipelines is the sentiment analysis of online reviews.

But how can ETL pipelines help with this?

More and more people leave reviews online these days for everything ranging from restaurants they eat at, apps they use, and products they buy.

ETL pipelines can process large volumes of these customer reviews. Running advanced machine learning algorithms on this data enables businesses to analyze the sentiment of their customers, identify trends, and make improvements to their products and services.

Analytics For Ride-Hailing & Taxi Services

Transportation services such as taxis, ride-hailing platforms, and even public transit can use ETL pipelines to process their real-time data. This can provide valuable insights into usage patterns, peak hours, and other relevant metrics. This information can be used to optimize routes, improve efficiency, and enhance customer satisfaction.

Aviation Data Analysis

Another interesting use case of ETL pipelines is in aviation data analysis. ETL pipelines are used to collect, clean, and consolidate data from various sources within the aviation industry like flight schedules, passenger information, and airport operations.

Data analysis can then be carried out on this integrated data to gain meaningful insights to optimize operations, improve safety, and enhance the overall passenger experience.

Oil & Gas Industry Analytics

For the oil and gas industry, ETL pipelines can process real-time data streaming from sensors on oil rigs. This enables efficient monitoring of oil well performance, equipment health, and safety conditions on the rig. If used properly, this information optimizes production and maintenance schedules and leads to reduced downtime and operational costs.

Social Media & Video Platform Analytics

Social media and video platform analytics are huge areas of application for ETL pipelines. These platforms generate large volumes of structured and semi-structured data that need to be transformed and loaded into large data warehouses.

That’s where ETL pipelines come in. The processed and stored information helps these platforms understand the behavior of their users and their preferences. This allows them to optimize their service, recommend relevant content to users, and carry out targeted marketing.

Retail & eCommerce Analytics

ETL pipelines can be used by brick-and-mortar stores and eCommerce platforms alike. For instance, eCommerce and retail platforms like Walmart and Amazon use ETL pipelines (as well as ELT pipelines) to process large amounts of data, transform it and load it into a data warehouse.

But how does this help retailers? These companies can use this data to analyze user behavior, purchase patterns, and sales performance. This helps them identify trends, optimize inventory management, and improve overall business performance.

Healthcare Data Analytics

Healthcare provides a unique use case for data analysis because it requires a unified analysis of historical as well as real-time data. ETL pipelines can be employed in various use cases in healthcare data systems to improve patient care and optimize healthcare operations.

A practical application of ETL pipelines in the healthcare system is for warehousing patient and medication data from multiple healthcare facilities after processing. Healthcare facilities can also use streaming ETL pipelines to process real-time data from patient monitoring systems to create alerts when patients need urgent medical attention.

Conclusion

ETL pipelines started as a special piece of technology built by engineers. Today, easy ETL tools put them within the reach of many more stakeholders. And that group of stakeholders has grown huge.

Business leaders, IT professionals, salespeople, and many, many more types of workers are impacted by ETL pipelines and other types of data pipelines.

In this article, you learned about the ETL meaning, the role of ETL pipelines, and how they differ from data pipelines in general. We also covered each step of the process in detail and discussed the advantages of using ETL pipelines for data integration.

Most importantly, we discussed the importance of transformation in data integration. We live in the age of “big data.” Organizations need to keep their massive amounts of data in order if they want to derive useful insights from it.

Finally, we discussed 8 ETL pipeline tools and took a look at the applications of these pipelines in real life.

After reading about ETL pipelines, where do you think they fit in your business process? Do you use ETL pipelines or a different architecture? Let us know in the comment section below.

_______________________________________

Today, you can achieve the same benefits of traditional ETL, but in real time.

Estuary Flow is a tool that helps you create real-time data pipelines. With Flow, you can add transformations before loading data into your destination systems. But it’s not traditional ETL — it’s a data streaming solution with its own, unique process.

You can learn more about how Flow works here, or use it for free.

Author

Popular Articles