With the massive amount of data generated, it is becoming increasingly complex and difficult for businesses to manage it. However, with the emergence of streaming analytics, businesses can now process and analyze data in real time.

Organizations use streaming analytics to get a crystal-clear real-time view of the data that matters, from social media feeds and IoT sensors to sales operations. Rather than relying on traditional batch analysis techniques, this powerful tool allows organizations to quickly process incoming data across multiple sources simultaneously – providing valuable insights for making informed decisions in no time.

In this article, we will explore the basics of streaming analytics, its differences from traditional data analysis approaches, and its benefits. We will also examine the most popular streaming analytics tools, including Microsoft Azure, AWS, and Apache Kafka, and discuss how you can integrate data in real-time using Estuary, the no-code real-time data pipeline solution.

By the time you are done reading this guide, you’ll have a solid understanding of streaming analytics and its benefits. You will also be familiar with the most popular tools available for streaming analytics and know how to choose the right platform for your needs.

What Is Streaming Analytics?

Stream data is the future of digital communication, business intelligence, and operations. This is data that is continuously processed from thousands of sources all at once, allowing us to stay up-to-date with user activity on our applications and websites, buying habits on eCommerce stores, gaming progressions, geo-locations on devices, and social media interactions – you name it.

Once analyzed, all these small bits of information give valuable insights into consumer behavior that can help companies make smarter decisions faster than ever before. This is known as streaming analytics or event stream processing.

Companies can now access a wide range of analytics such as correlations, aggregation, filtering, and sampling to gain better insight into their business operations.

By analyzing social media streams, they can also measure public sentiment around their brand or product which gives them the power to respond quickly when needed situations arise.

Since the processing takes place immediately, streaming data analytics doesn’t require complex computations and the latency is in milliseconds. It also requires scalable, fault-tolerant, and reliable systems.

Workflow Of Streaming Analytics – Understanding The Mechanism

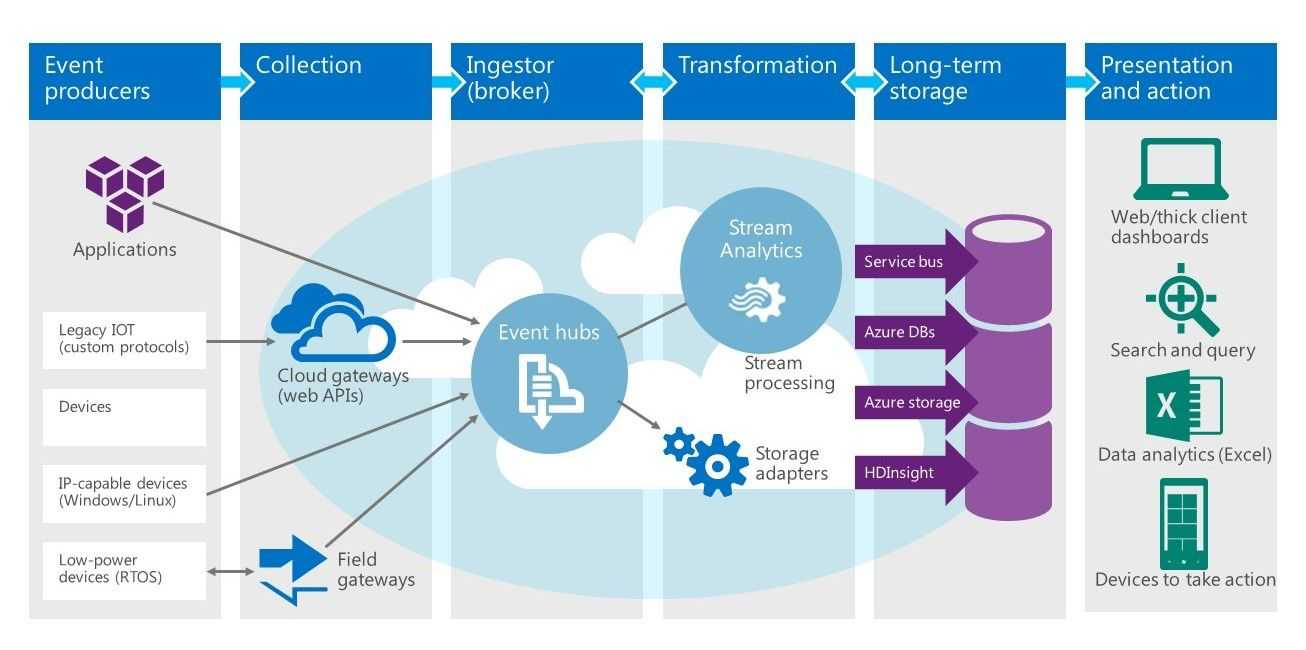

The general workflow of streaming analytics is as follows:

A. Data Ingestion

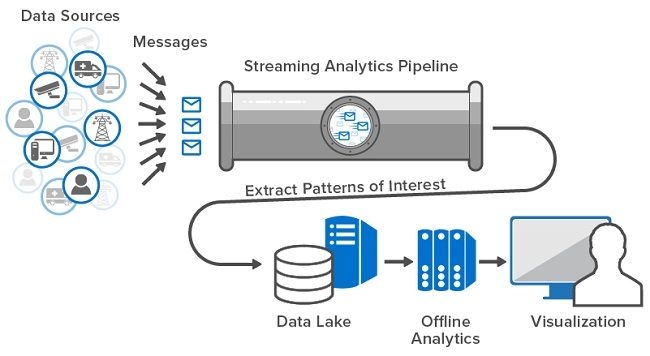

A key part of any data analytics project is how you get the necessary information. Data ingestion involves doing just that – pulling in all sorts of information from sensors, applications, and more into an easily accessible streaming platform.

It needs to be fast, reliable, and able to handle large volumes at a quick pace – gathering everything together so your real-time data analysis can take place quickly.

B. Stream Processing

Data processing is a crucial step when it comes to analyzing data. It involves applying logic and transformations that filter, join, aggregate, or enrich the streams of data so they are ready for analysis.

Complex queries such as windowing, grouping, and pattern matching must be handled properly while making sure any issues with missing or duplicate records don’t slip through – all this on top of having fault tolerance mechanisms in place should something go wrong.

C. Data Analytics

After cleaning the data, it is time to get your analytical engine running. By applying analytics and machine learning models to your data streams, you can look for anomalies, detect sentiment trends, or make predictions with ease.

Furthermore, dynamic patterns in the process will provide real-time feedback so that you don’t miss a beat when responding quickly.

D. Data Visualization

Once you have real-time analytics, it is time to visualize them using specialized tools. Visualization helps communicate the insights gained from streaming analytics to stakeholders clearly and concisely.

E. Action & Feedback

Now comes the final part of the streaming analytics process, taking actions based on the insights gained. This may involve triggering alerts, sending notifications, or initiating automated actions.

Feedback is also collected to evaluate the effectiveness of the actions taken and to improve the streaming analytics process.

How Businesses Can Benefit From Streaming Analytics?

We know you’re always looking for the best options that offer maximum perks and streaming analytics is definitely the one. That’s why we’ve put together this helpful list to show you all of its glittery advantages.

1. Instant Business Insights

Streaming analytics allow you to get your hands on untapped streams as you can ingest streaming data from multiple data streams and extract new types of insights. For example, streaming analytics can help businesses monitor and respond to customer behavior, preferences, and feedback in real time, enhancing customer satisfaction and loyalty.

2. Business Competitiveness

Streaming analytics can help businesses gain a competitive edge over their competitors by analyzing trends and benchmarks to gain an advantage over other businesses. For example, streaming analytics can help businesses identify new opportunities, trends, and patterns in real time, enabling them to create new products, services, and strategies.

3. Creating New Opportunities

Analyzing real-time data allows you to detect significant business events, predict future trends and find untapped streams, which lead to cost-cutting solutions, new revenue streams, and product innovations. For example, streaming analytics can help businesses optimize their resources and processes, reducing waste and inefficiency.

4. Enhanced Security & Compliance

Streaming analytics can help businesses protect their data and comply with regulations by applying rules and policies in real time. For example, streaming analytics can help businesses encrypt, mask, or delete sensitive data, or alert them of any breaches or violations in real time, ensuring data privacy and security.

Now that we are familiar with streaming analytics and how it can be used to analyze continuous data patterns, let’s see how it fares against traditional data analytics.

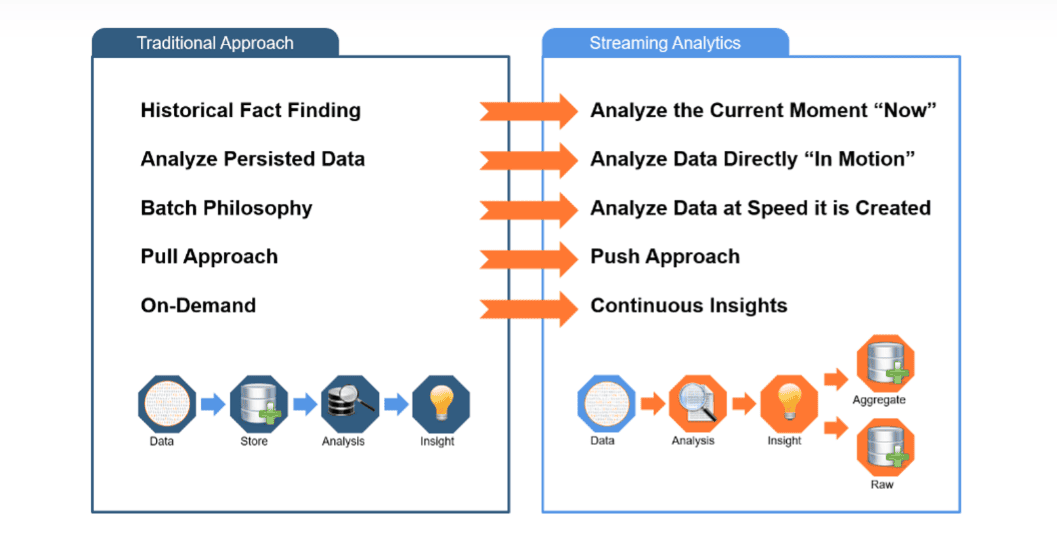

Streaming Analytics Vs Traditional Data Analytics – Everything You Need To Know

Now if you are wondering whether you really need to replace traditional data analytics with streaming analytics, well the answer is pretty simple. You don’t necessarily need to choose one over the other. Both techniques complement each other. Conventional data analytics relies on the batch processing approach and it differs quite a bit from streaming analytics.

So in this section, let’s level things up and see the end-to-end differences between traditional data analytics and streaming analytics.

I. Data Processing

The main difference between the two approaches lies in how the data processing occurs. Traditional data analytics employs the store-and-analyze workflow. First, the data stays in a data warehouse for some time and is then processed. In comparison, streaming analytics processes data as soon as it arrives – much like preparing notes as the teacher writes on the board.

II. Latency

The second major difference between the two techniques is processing time. Since traditional or batch processing deals with processing large quantities of data, the overall time or latency can be in minutes, hours, or even days. On the other hand, streaming analytics techniques have sub-second latencies since the data volumes are significantly low.

III. Dataset

Batch processing is an efficient way to handle a large amount of data at once, as all information needed for analysis is known in advance. On the other hand, the data streaming process enables the real-time analytic study of incoming data without having any previous knowledge about its size or content.

IV. Hardware

When it comes to processing large data sets, the right hardware requirements can make a big difference. Batch processing requires standard computer specs while data stream processing necessitates high-end gear and specialized architecture because of its greater reliance on speed and agility when handling fluctuating volumes of information.

Nevertheless, both options utilize considerable resources for their distinct tasks in working with extensive amounts of data.

V. Use Case

Batch processing is a great option if you don’t need instant answers – like backing up data mining or warehousing information. Meanwhile, stream processing can provide you with real-time insights that are essential for activities such as fraud detection, analyzing social media activity, or understanding what your sensors have to say.

Now that we are aware of the differences between streaming analytics and traditional data analytics, let’s see how you can use Kafka for this.

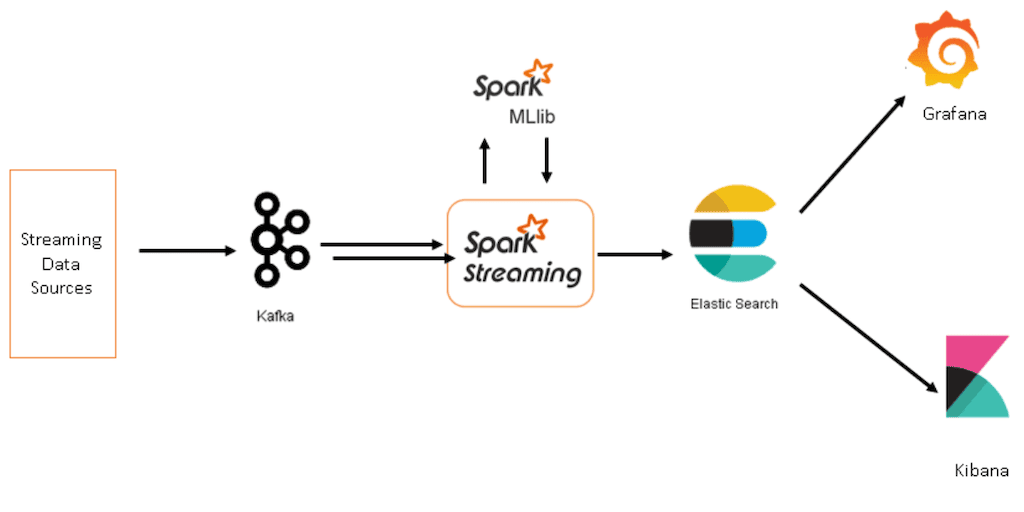

Streaming Analytics With Kafka

Apache Kafka is an open-source data streaming platform that lets you stream data in a distributed way. It is made by the Apache Software Foundation and works with the Hadoop ecosystem. Within this ecosystem, Kafka is used for data ingestion and is used along with other Apache services like Apache Spark for data processing, and Apache Hive for Analytics.

With Kafka, you can build robust, fault-tolerant, and scalable data pipelines for streaming analytics. This open-source platform provides 4 key features for streaming analytics including messaging, storage, processing, and integration, and allows users to:

- Publish and subscribe to streams of data, such as events, logs, metrics, etc., using a pub/sub model.

- Store streams of data in a distributed, replicated, and fault-tolerant way, using a commit log data structure.

- Process streams of data as they occur, using Kafka Streams, a lightweight library for stream processing, or Kafka Connect, a framework for connecting Kafka with external systems.

- Integrate Kafka with various data sources and sinks, such as databases, applications, cloud services, etc., using Kafka Connect or third-party connectors.

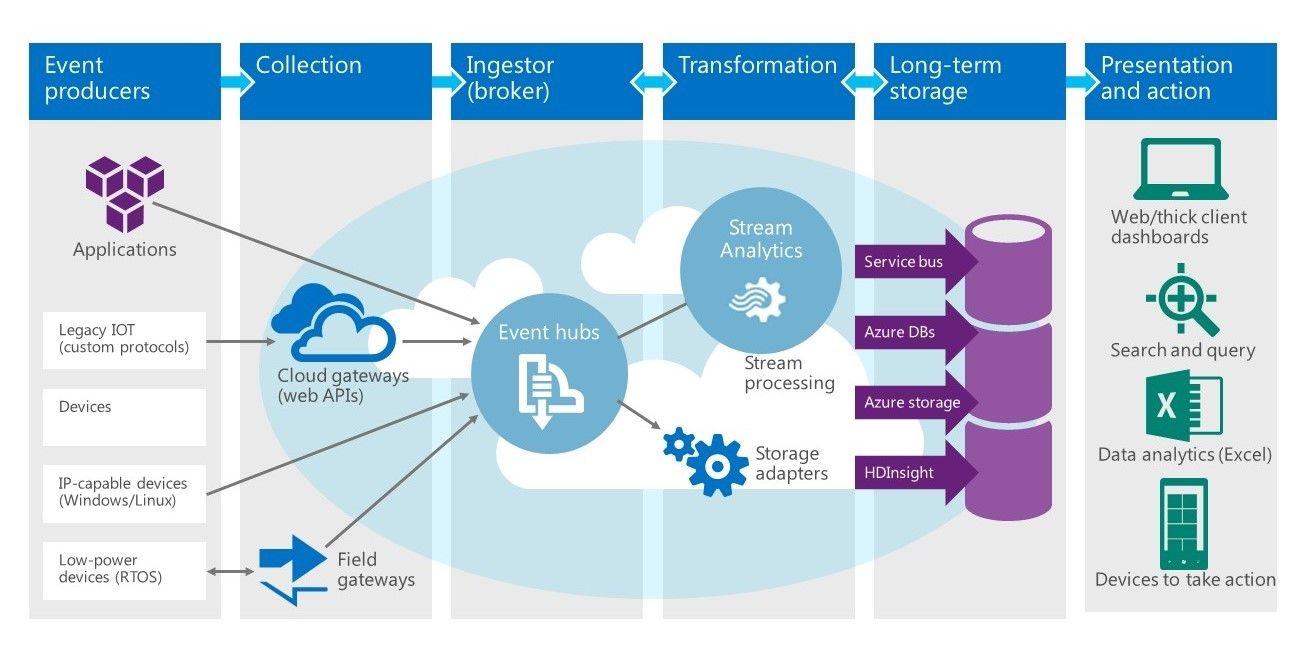

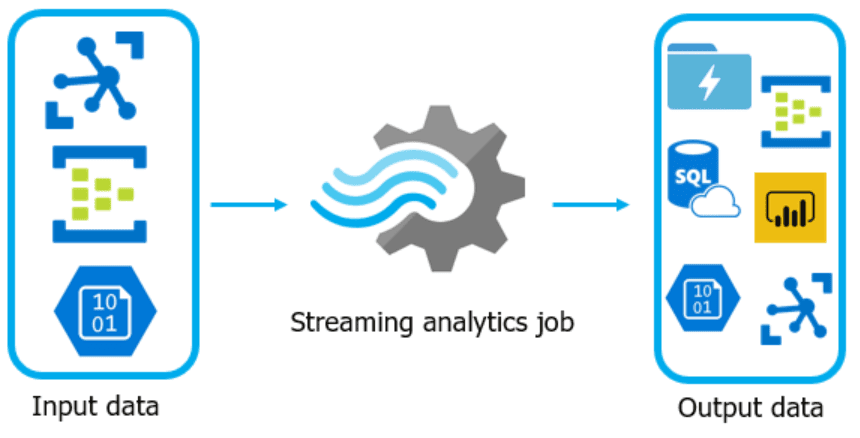

Streaming Analytics With Azure

Microsoft Azure is a PaaS (Platform-as-a-service) that provides a range of services and features that support streaming analytics, starting with Azure Stream Analytics. Azure Stream Analytics is Microsoft Azure’s service that lets users easily build and run real-time streaming analytics pipelines.

It supports a variety of input sources, such as Azure Event Hubs, Azure IoT Hub, or Azure Blob Storage, and output sinks, such as Azure SQL Database, Azure Cosmos DB, or Power BI. Users can also write SQL-like queries to analyze the data and apply machine learning models and custom code to enrich the data.

Azure Stream Analytics offers several benefits, such as:

- Scalability: Azure Stream Analytics can scale up or down automatically based on the data throughput and query complexity without requiring any infrastructure management or configuration.

- Reliability: It guarantees at least 99.9% availability and provides built-in disaster recovery and fault tolerance features.

- Security: Azure Stream Analytics provides strong encryption tools to protect data at rest and in transit. It also supports role-based access control and auditing.

- Cost-effectiveness: Azure Stream Analytics follows a pay-as-you-go pricing model which is very cost-effective, especially for beginners.

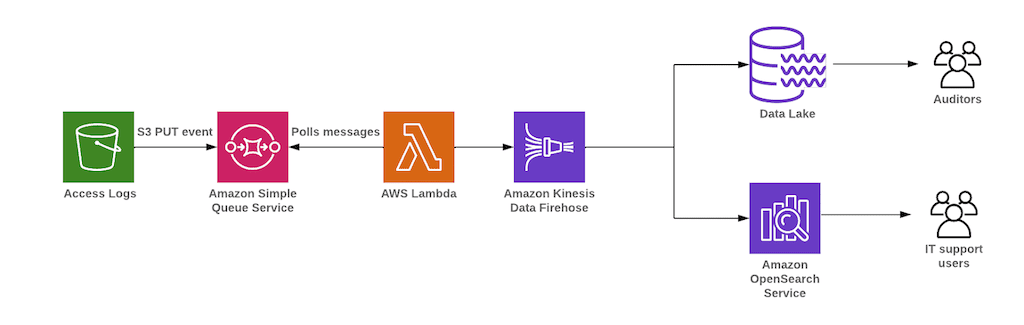

Streaming Analytics With AWS

AWS is a juggernaut when it comes to cloud computing and offers numerous cloud computing facilities for streaming analytics.

Some of the key AWS services for streaming analytics are:

a. Amazon Kinesis

Amazon Kinesis is a streaming analytics platform that provides 4 services for working with streaming data:

- Kinesis Data Streams allows users to capture, store, and process streaming data from various sources, such as applications, devices, sensors, etc.

- Kinesis Data Firehose allows users to transfer streaming data into AWS data stores, such as Amazon S3 and Amazon Redshift, for near real-time analytics.

- Kinesis Data Analytics is a fully managed Apache Flink service on AWS that allows users to perform real-time analytics on streaming data.

- Kinesis Video Streams allows users to capture, process, and analyze video streams for applications such as security, smart home, and machine learning.

b. Amazon MSK

Amazon MSK makes it simple to take advantage of Apache Kafka – an open-source platform that connects streams of data, also called event streams, across applications.

With MSK you don’t need to worry about management, configuration, and provisioning. It does all the hard work for you. And with integrations like CloudWatch, CloudFormation, and IAM access control options seamlessly integrated into your services – efficiency comes easy too.

c. AWS Lambda

AWS Lambda is a serverless computing service by Amazon. It can run code with ease without the hassle of managing a server. It’s also great for processing streaming data from Amazon Kinesis, DynamoDB, or S3 to transform it into usable insights by performing activities like enrichment, filtering, and aggregation.

Plus, AWS Lambda scales automatically, charges only for the compute time consumed, and supports multiple programming languages.

d. AWS Glue

With AWS Glue, you can get a data catalog and a serverless ETL (extract, transform, and load) service without managing any infrastructure.

You can use AWS Glue to find, catalog, and prepare streaming data for analysis. It can also run streaming ETL jobs that continuously clean, enrich, and load streaming data into AWS data stores, such as Amazon S3 and Amazon Redshift.

Now that we have discussed Azure, AWS, and Kafka for their streaming analytics support, it’s time to see how you can pick the right platform for your business needs.

Choosing The Right Platform For Your Streaming Analytics Needs

Before diving into the masked criteria that make one platform stand out, let’s compare notes on the functionalities of AWS, Azure, and Kafka.

Considering the possible highs and lows, pinpointing the right analytics tool is essential. Here are some pointers to steer you in the right direction:

- Identify the use case: The first step is to identify the use case. You should determine the type of data you need to process, the volume of data, and the required latency for processing the data.

- Evaluate features: After figuring out your use case, examine the features of each streaming analytics tool and see which one fits your needs. Consider elements such as scalability, fault tolerance, flexibility, and performance.

- Keep the infrastructure in mind: Consider the infrastructure compatibility of your streaming analytics tool. It should be compatible with the existing infrastructure and not require significant changes.

- Evaluate the cost: Expenses are the turning factor. Therefore, you should consider the upfront and ongoing costs, including licensing, maintenance, and support.

Upgrading Your Streaming Analytics With Estuary Flow

Streaming analytics is essential for any business that wants to leverage real-time data and gain a competitive edge. While Azure, AWS, and Kafka are powerful solutions, they can also be complex and costly to set up and maintain.

That’s why you should consider our Estuary Flow product, an open-source DataOps platform that simplifies streaming analytics and integrates all of your systems.

Estuary Flow lets you build, test, and scale real-time pipelines that continuously capture, transform, and materialize data across all of your systems, using batch and streaming paradigms.

So, how does Flow fit into streaming analytics?

The platform offers a unique approach:

- Real-time transformations: Flow lets you transform data on-the-fly between source and destination systems. You can use SQL or other languages to define these data transformations, known as “derivations.”.

- Integrate with real-time data warehouses: You can easily move data from any supported source to a storage system designed for real-time analytics, such as Rockset. You can also sync data to a traditional data warehouse, like Snowflake or BigQuery, effectively turning any data warehouse into a real-time data warehouse.

- Integrate with Kafka, Kinesis, and other streaming platforms. Easily plug into your existing streaming infrastructure and expand it with a few clicks.

You can also monitor and troubleshoot your pipelines using a web-based interface, and scale your pipelines automatically to handle any data volume or velocity.

Its event-based architecture helps you get the best outcome while ensuring minimal latency. Moreover, Flow is open-source, so you can access its source code and manipulate it to meet organizational requirements.

If you want to find out if Estuary Flow fits your needs, check this out.

Conclusion

Streaming analytics is a game-changer for businesses that want to leverage real-time data and gain a competitive edge. By analyzing data streams as they arrive, streaming analytics can provide insights into consumer behavior, market trends, and operational efficiency, helping businesses make smarter decisions faster than ever before.

Dedicated tools and services available on Microsoft Azure, AWS, and Apache Kafka offer scalability, reliability, and security, allowing organizations to process, transform, and analyze data in real time. These tools can handle large volumes and velocities of data and support various data formats and sources. They also offer features such as fault tolerance, data encryption, and data retention.

If you are looking for a simple and powerful way to stream and analyze your data in real-time, you should give Estuary Flow a try. It’s a no-code GUI-based solution that’s super easy to use and deploy. Plus, it works really well with services on AWS, Azure, and Kafka. So let’s start by signing up for our beta program.

Author

Popular Articles